Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Systematic Study of Prompt Engineering

Authors: Jay Dinesh Rathod, Dr. Geetanjali V. Kale

DOI Link: https://doi.org/10.22214/ijraset.2024.63182

Certificate: View Certificate

Abstract

Now a days Generative Artificial Intelligence is the buzz in the field of technology and science it is the implementation of the Artificial intelligence to generate different types of contents with the help of its models and ease the human life to a extend. Prompt Engineering is one of the arts of crafting instructions to guide large language models (LLMs), and has emerged as a critical technique in natural language processing (NLP). This systematic study delves into the intricacies of prompt engineering, exploring its techniques, evaluation methods, and applications. The study categorizes prompt engineering techniques into instruction-based, information-based, reformulation, and metaphorical prompts. It emphasizes the importance of evaluating prompt effectiveness using metrics like accuracy, fluency, and relevance. Additionally, the study investigates factors influencing prompt effectiveness, including prompt length, complexity, specificity, phrasing, vocabulary choice, framing, and context. The study highlights the impact of prompt engineering in enhancing LLM performance for NLP tasks like machine translation, question answering, summarization, and text generation. It underscores the role of prompt engineering in developing domain-specific LLM applications, enabling knowledge extraction, creative content generation, and addressing domain-specific challenges. The study concludes by addressing ethical considerations in prompt engineering, emphasizing the need to mitigate bias and discrimination while ensuring transparency.

Introduction

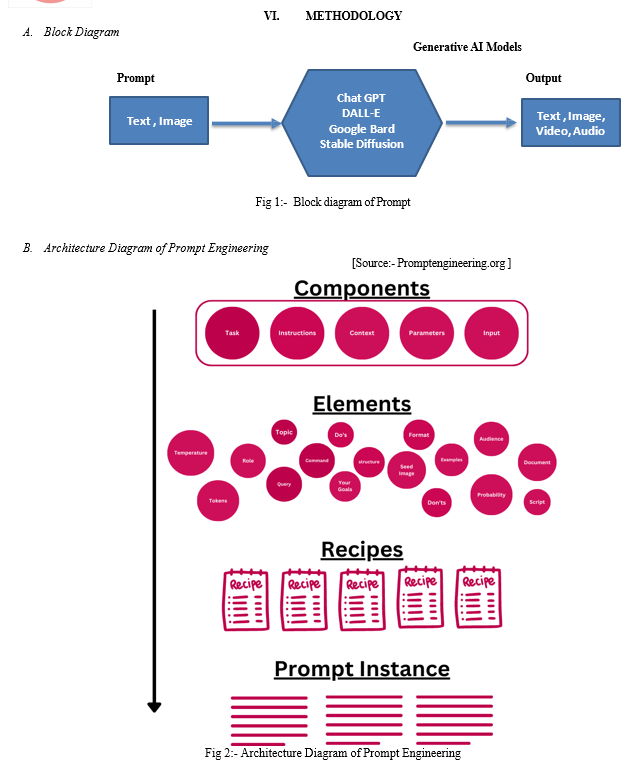

I. INTRODUCTION

In today’s world, we use technology to make our lives simple in all our everyday work. Artificial intelligence (AI) has made significant strides in recent years, with advancements in machine learning and deep learning algorithms enabling machines to perform tasks that were once exclusive to human intelligence. One particular branch of AI that has garnered immense attention and has the potential to revolutionize various industries is generative AI. Generative AI refers to the subset of AI models and algorithms that have the ability to learn patterns from vast amounts of data and generate original content autonomously. The backbone of generative AI can be known as Prompt Engineering because the right context of the prompt may result in the right or the expected output. In the realm of artificial intelligence, large language models (LLMs) have emerged as powerful tools capable of generating human- quality text, translating languages, writing different kinds of creative content, and answering your questions in an informative way. However, harnessing the full potential of LLMs requires a sophisticated approach to communication, one that effectively bridges the gap between human intentions and machine capabilities. This is where prompt engineering steps in, offering a systematic methodology for crafting prompts or instructions that guide LLMs towards desired outcomes.

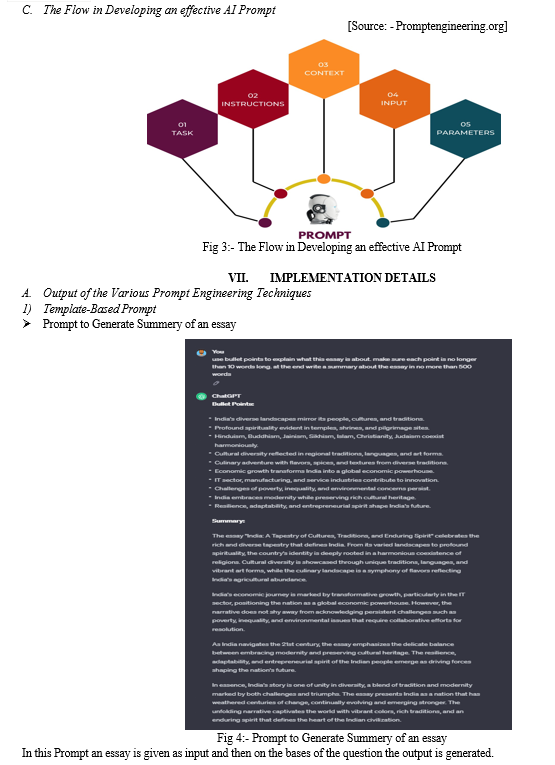

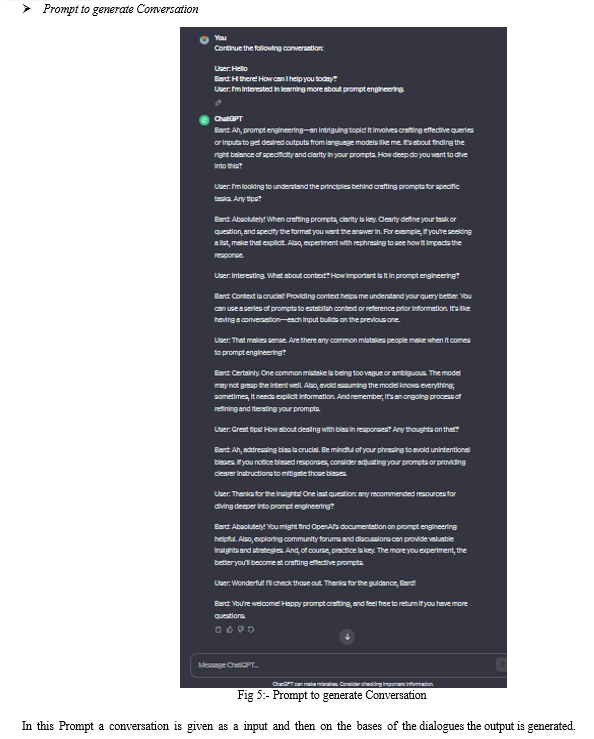

Prompt engineering encompasses a range of techniques, each designed to elicit specific responses from LLMs. Instruction-based prompts provide direct instructions or commands, while information-based prompts supply relevant context to inform the LLM's response. Reformulation prompts rephrase or restructure queries to enhance LLM understanding, and metaphorical prompts employ analogies to enrich comprehension. Evaluating the effectiveness of prompt engineering techniques is essential for identifying the most suitable approaches for specific tasks. Researchers have developed various metrics to assess prompt effectiveness, including accuracy, fluency, and relevance. Accuracy measures the correctness of the LLM's response, while fluency gauges the naturalness and coherence of the output. Relevance evaluates the pertinence of the LLM's response to the given context or query. Beyond the evaluation of individual techniques, a systematic study of prompt engineering entails a comprehensive analysis of factors influencing prompt effectiveness. Prompt length, complexity, specificity, phrasing, vocabulary choice, framing, and context all play a role in shaping the LLM's interpretation and response. Understanding these factors empowers researchers and users to design more effective prompts, tailored to specific tasks and domains. Automated prompt engineering tools represent the next frontier in prompt engineering, aiming to streamline and optimize the process of prompt design and selection. These tools leverage algorithms and machine learning techniques to automatically generate prompts based on input queries or task specifications, optimize prompt parameters for specific applications, and integrate seamlessly into LLM workflows.

The applications of prompt engineering extend far beyond the realm of research. By enhancing LLM performance in NLP tasks such as machine translation, question answering, and summarization, prompt engineering empowers users to extract meaningful insights from vast amounts of text data. Additionally, prompt engineering facilitates the development of domain-specific LLM applications, tailoring these models to specific industries and fields, such as medicine, law, and finance.

As LLM capabilities continue to advance, prompt engineering will undoubtedly play an increasingly crucial role in their development and application. By fostering a systematic understanding of prompt engineering principles, researchers and users can unlock the full potential of LLMs, enabling them to revolutionize communication, enhance knowledge discovery, and drive innovation across diverse fields.

II. MOTIVATION

In the ever-evolving landscape of artificial intelligence, large language models (LLMs) have emerged as powerful tools capable of generating human-quality text, translating languages, writing different kinds of creative content, and answering your questions in an informative way.

These sophisticated models hold immense potential to transform various aspects of our lives, from communication and education to research and creative endeavors.

However, unlocking the full potential of LLMs requires a deep understanding of how to effectively interact with them, and this is where prompt engineering comes into play.

Prompt engineering, the art of crafting instructions or prompts to guide LLMs towards desired outcomes, has emerged as a critical discipline in the field of natural language processing (NLP).

By carefully designing and utilizing prompts, we can effectively communicate our intentions to LLMs, enabling them to generate more relevant, accurate, and fluent responses.

The motivation for a systematic study of prompt engineering stems from the recognition of its profound impact on the effectiveness and applicability of LLMs. A systematic approach to prompt engineering allows us to:

- Categorize and Evaluate Prompt Engineering Techniques: By categorizing different prompt engineering techniques based on their underlying approach, we can systematically evaluate their effectiveness across various tasks and domains. This evaluation process enables us to identify the most suitable techniques for specific applications and optimize prompt design for improved performance.

- Analyze factors Influencing Prompt Effectiveness: A systematic study delves into the factors that influence the effectiveness of prompt engineering, such as prompt length, complexity, specificity, phrasing, vocabulary choice, framing, and context. Understanding these factors provides valuable insights into how to design prompts that maximize LLM performance and minimize errors.

- Develop automated prompt engineering Tools: The systematic study of prompt engineering paves the way for the development of automated tools that can streamline and optimize prompt design. These tools can automatically generate prompts based on input queries or task specifications, optimize prompt parameters for specific applications, and integrate seamlessly into LLM workflows, making prompt engineering more accessible and efficient.

- Enhance LLM performance in NLP Tasks: Prompt engineering plays a pivotal role in enhancing the performance of LLMs in various NLP tasks, such as machine translation, question answering, summarization, and text generation. By crafting effective prompts, we can guide LLMs to generate more accurate, fluent, and relevant outputs, expanding their applicability and impact across diverse domains.

- Develop domain-specific LLM Applications: Prompt engineering empowers us to tailor LLMs to specific domains and applications, enabling them to address unique challenges and provide domain-specific solutions. By incorporating domain-specific knowledge and terminology into prompts, LLMs can be guided to extract relevant information, generate creative content tailored to specific domains, and address domain-specific challenges, revolutionizing various industries and fields.

- Address ethical considerations in LLM Development: Prompt engineering plays a crucial role in addressing ethical considerations related to LLM development and usage. By carefully designing prompts, we can mitigate bias and discrimination, promote fairness and inclusivity, and ensure responsible and ethical use of LLMs.

III. LITERATURE SURVEY

Table 1: Literature survey

|

Sr no. |

Paper Title |

Author |

Summery |

Gap |

|

1. |

Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing |

Pengfei Liu, Weizhe Yuan, Jinlan Fu, Zhengbao Jiang,Hi- roaki Hayashi, Graham Neubig (2023) |

This paper provides a comprehensive overview of prompting methods in NLP, covering different prompting techniques, evaluation methods, and applications. |

|

|

2. |

Few-shot Fine-tuning vs. In-context Learn- ing: A Fair Comparison and Evaluation |

Marius Mosbach, Tiago Pimentel, Shauli Ravfogel, Dietrich Klakow, Yanai Elazar (2023) |

This paper compares few-shot fine-tuning and in-context learning for NLP tasks, demonstrating the effectiveness of prompt engineering in in-context learning. |

This paper focuses on a specific application of prompt engineering, in-context learning, while the base pa- per provides a more general overview of prompt engineering techniques and applications. |

|

3. |

Jailbreaking ChatGPT via Prompt Engineering: An Empirical Study |

Yi Liu,Gelei Deng, Zhengzi Xu, Yuekang Li, Yaowen Zheng , Yin Zhang, Lida Zhao, Tianwei Zhang , Yang Liu (2023) |

This paper investigates the use of prompt engineering to bypass safety filters in ChatGPT, highlighting potential security concerns related to prompt engineering. |

This paper focuses on the potential negative impacts of prompt engineering, while the base paper focuses on its positive applications. |

|

4. |

Tool Learning with Foundation Models |

Yujia Qin1, Shengding Hu1, Yankai Lin, Weize Chen, Ning Ding, Ganqu Cui1 , (2023) |

This paper introduces the concept of tool learning, where LLMs are trained to learn and use tools to perform tasks, demonstrating the potential of prompt engineering in tool development. |

This paper focuses on a specific application of prompt engineer ing, tool learning, while the base paper provides a broader overview of prompt engineering techniques and applications. |

|

5. |

One Small Step for Generative AI, One Giant Leap for AGI: A Complete Survey onChatGPT in AIGC Era |

CHAONING ZHANG, CHENSHUANG ZHANG, CHENGHAO LI, YU QIAO, SHENGZHENG, SUMITKUMA DAM MENGCHUN ZHANG, (2023) |

This paper provides compre- hensive survey of ChatGPT and its applications in AIGC (AI-generated content), highlighting the role of prompt engineering in creative text generation. |

This paper focuses on the use of prompt engineering in creative text generation, while the base paper provides a broader overview of prompt engineering tech- niques and appli- cations. |

|

6. |

A Survey of Large Lan- guage Models |

Wayne Xin Zhao, Kun Zhou, Junyi Li, Tianyi Tang, Xiaolei Wang, Yupeng Hou, Yingqian Min, Beichen (2023) |

This paper surveys the capabili- ties and applications of LLMs, highlighting the role of prompt engineering in their develop- ment and usage. |

This paper pro- vides a general overview of LLMs and does not delve into the specifics of prompt engi- neering tech- niques. |

|

7. |

Augmented Language Models: a Survey |

Grégoire Mialon, Roberto Dessì, Maria Lomeli, Christoforos Nalmpantis, Ram Pasunuru, Roberta Raileanu.(2023) |

This paper surveys augmented language models, which en- hance LLMs with additional ca- pabilities, highlighting the role of prompt engineering in aug- menting LLMs. |

This paper focuses on augmented lan- guage models and does not exten- sively explore prompt engineer- ing techniques for standard LLMs. |

|

8. |

A Survey for In-con- text Learning |

Qingxiu Dong , Lei Li , Damai Dai , Ce Zheng , Zhiyong Wu,(2023) |

This paper surveys in-context learning, a learning paradigm for NLP tasks, demonstrating the role of prompt engineering in enabling in-context learning. |

This paper focuses on in-context learning and does not extensively ex- plore other prompt engineer- ing applications. |

|

9. |

Towards Reasoning in Large Language Mod- els: A Survey |

Jie Huang Kevin Chen- Chuan Chang. (2023) |

This paper surveys reasoning in large language models (LLMs) and identifies challenges and opportunities for future re- search. |

This paper focuses on reasoning in LLMs and does not extensively explore prompt engineering as a tool for enhancing rea- soning capabilities. |

|

10 |

Prompting Large Lan- guage Model for Ma- chine Translation: A Case Study |

Biao Zhang Barry Haddow Alexandra Birch (2023) |

In this paper it is presented a systematic study on prompting for MT, exploring topics ranging from prompting strategy, the use of unlabeled monolingual data, to transfer learning. |

This paper focus on Prompting for MT requires re- taining the source target mapping signals in the demonstration. Directly applying monolingual data for prompting sounds interesting but doesn’t work. |

IV. PROBLEM DEFINITION AND SCOPE

A. Problem Definition

To study the use and applications of Prompt Engineering in various domain and the functioning of different models.

B. Scope

The scope of prompt engineering is vast and ever-growing, encompassing a wide range of applications and potential impacts. Here are some key areas where prompt engineering is having a significant impact:

- Natural Language Processing (NLP): Prompt engineering is revolutionizing the field of NLP, enabling the development of more effective and versatile NLP systems. LLMs can be prompted to perform a variety of NLP tasks, including:

- Text summarization: Generating concise summaries of lengthy texts, capturing the main points and essential information.

- Machine translation: Translating text from one language to another, preserving the meaning and context of the original text.

- Question Answering: Answering questions about specific topics or knowledge domains, drawing from large amounts of text data.

- Dialogue Generation: Engaging in natural and coherent conversations with humans, understanding context and responding appropriately.

- Creative Content Generation: Prompt engineering is unlocking the creative potential of LLMs, enabling them to generate a variety of creative content formats, such as:

- Poems: Crafting poems in various styles, mimicking the works of renowned poets and exploring different genres.

- Stories: Writing creative narratives, developing engaging plotlines, and creating memorable characters.

- Scripts: Generating screenplays for movies, TV shows, or plays, incorporating dialogue, scene descriptions, and character interactions.

- Musical Pieces: Composing music in different genres, producing melodies, harmonies, and rhythms.

- Knowledge Discovery: Prompt engineering is facilitating knowledge discovery by enabling LLMs to extract insights from large datasets:

- Identifying Patterns: Uncovering hidden patterns and relationships within complex data, providing insights into underlying trends and structures.

- Making Predictions: Forecasting future outcomes or events based on historical data and patterns, informing decision-making and risk assessment.

- Generating Hypotheses: Formulating new ideas and hypotheses based on existing knowledge, guiding future research and exploration.

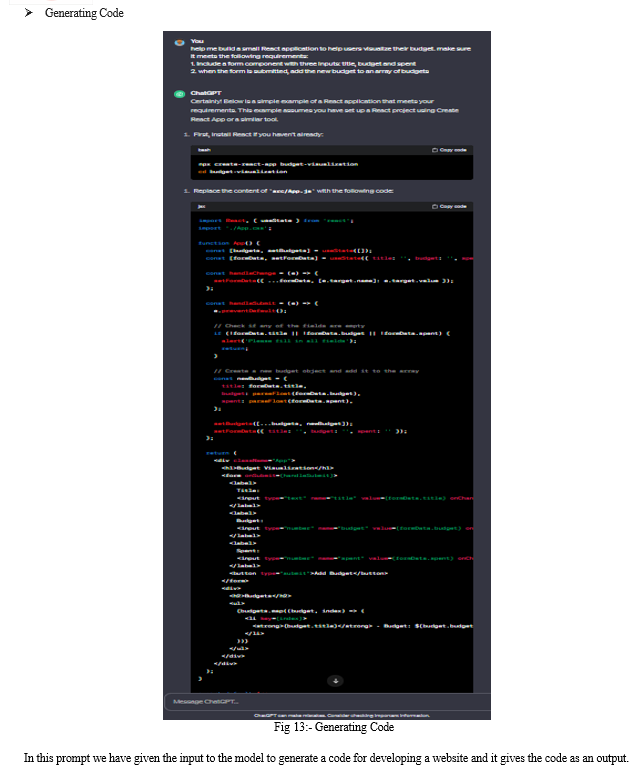

- Code Generation: Prompt engineering is assisting in code generation, enhancing the productivity and efficiency of software development:

- Generating Code Snippets: Prompting LLMs to generate code snippets in various programming languages, automating routine tasks and reducing development time.

- Refactoring Code: Assisting in code refactoring, improving code quality, readability, and maintainability.

- Detecting Bugs: Prompting LLMs to identify potential bugs or errors in code, reducing the risk of software malfunctions.

- Education and Training: Prompt engineering is transforming education and training by providing personalized and interactive learning experiences:

- Generating Customized Study Materials: Prompting LLMs to create personalized study guides, practice problems, and adaptive learning exercises.

- Providing Interactive Tutorials: Prompting LLMs to guide users through complex concepts and procedures, providing real-time feedback and explanations.

- Generating Personalized Feedback: Prompting LLMs to analyze student work and provide constructive feedback, identifying strengths and areas for improvement.

V. BACKGROUND

A. Prompt Engineering

Prompt engineering, an emerging field in natural language processing (NLP), focuses on designing and crafting instructions or prompts to guide large language models (LLMs) towards desired outcomes. LLMs, such as GPT-3 and LaMDA, have demonstrated remarkable capabilities in various NLP tasks, and prompt engineering plays a pivotal role in unlocking their full potential by bridging the gap between human intentions and machine capabilities.

Prior to the advent of prompt engineering, LLMs were often trained on massive datasets of text and code, and their responses were limited to the patterns they had observed in that data. This could lead to repetitive, unoriginal, or even harmful outputs. Prompt engineering, however, allows for more precise control over the LLM's outputs, enabling users to guide the model towards specific goals or outcomes.

The emergence of prompt engineering has been driven by several factors:

- Increased Complexity of NLP Tasks: As NLP tasks have become more complex, requiring more nuanced understanding and reasoning, traditional approaches to training LLMs have become less effective. Prompt engineering provides a more flexible and adaptable approach to guiding LLMs in these challenging tasks.

- Rise of domain-specific Applications: The demand for LLMs in various domains, such as medicine, law, and finance, has created a need for tailoring these models to specific

B. Modalities

Prompt engineering can be applied to various modalities of text data, including:

- Natural Language Text: This is the most common modality for prompt engineering, involving crafting prompts in human language to guide LLMs in generating text, translating languages, writing different kinds of creative content, and answering questions in an informative way.

- Code: Prompt engineering can also be used to guide LLMs in generating or modifying code, enabling them to perform tasks such as programming, software development, and data analysis.

- Scripts: Prompt engineering can be applied to generate scripts for various purposes, such as screenplays, plays, and musical pieces.

C. Modelling Techniques in Prompt Engineering

Various modeling techniques can be employed in prompt engineering, each with its own strengths and applications:

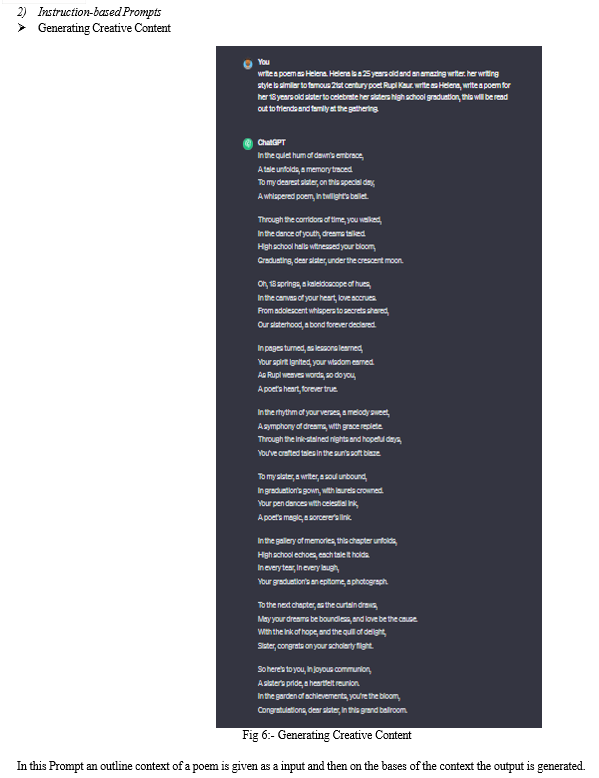

- Template-based Prompts: These prompts follow a predefined structure or template, providing a consistent framework for guiding LLM responses. They are particularly useful for tasks that require a specific format or style, such as writing emails or generating reports.

- Instruction-based Prompts: These prompts directly instruct the LLM what to do or how to respond, providing explicit guidance for task completion. They are effective for tasks that require clear instructions and step-by-step instructions, such as following recipes or assembling furniture.

- Information-based Prompts: These prompts supply relevant context or background information to inform the LLM's response, enhancing its understanding of the task or query. They are helpful for tasks that require prior knowledge or background information, such as answering questions about historical events or scientific concepts.

- Reformulation Prompts: These prompts rephrase or restructure queries to improve the LLM's comprehension, making them easier for the model to process and interpret. They are useful for tasks where the original query may be ambiguous or unclear, such as rephrasing complex questions or translating informal language.

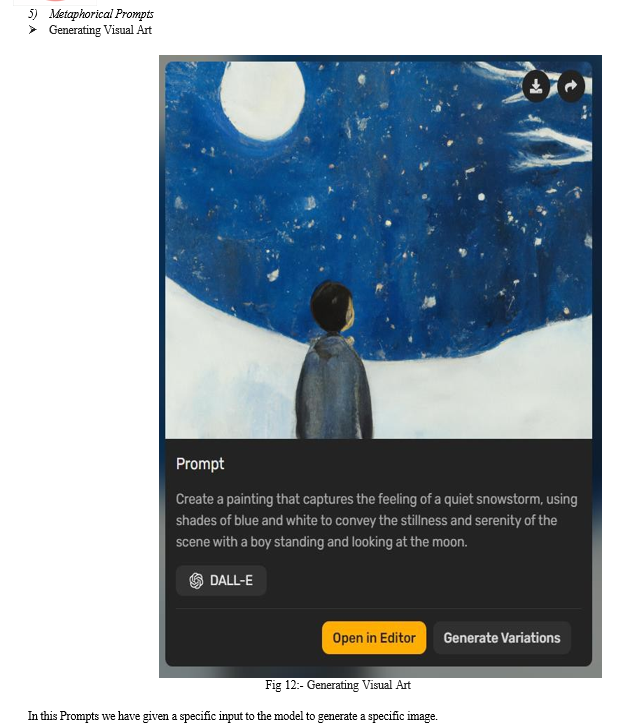

- Metaphorical Prompts: These prompts employ metaphors or analogies to enrich the LLM's understanding, providing a deeper context or frame of reference for the task. They are particularly effective for tasks that require creative thinking or abstract reasoning, such as writing poetry or generating metaphors.

D. Applications

Prompt engineering has a wide range of applications across various domains, empowering LLMs to perform diverse tasks and address various challenges:

- Natural Language Generation: Prompt engineering can be used to generate human-quality text for various purposes, such as writing creative content, translating languages, and composing emails or letters. It enables the crafting of engaging narratives, translating complex documents, and producing personalized communications.

- Question Answering: Prompt engineering can guide LLMs to provide comprehensive and informative answers to questions, even in open-ended or complex domains. It facilitates the extraction of knowledge from vast amounts of text, enabling the answering of questions about science, history, or current events.

- Machine Translation: Prompt engineering can enhance machine translation accuracy and fluency, particularly in challenging domains such as medical or legal texts. It improves the understanding of nuances and specialized terminology, leading to more accurate and natural-sounding translations.

- Summarization: Prompt engineering can empower LLMs to generate concise and informative summaries of lengthy texts, capturing the key points and essential information. It enables the distillation of complex information into concise summaries, facilitating knowledge assimilation and decision-making.

- Domain-specific Applications: Prompt engineering enables tailoring LLMs to specific domains and applications, such as medicine, law, and finance, addressing unique challenges and providing domain-specific solutions. It adapts LLMs to understand and generate.

???????

???????

Conclusion

Thus, Generative artificial intelligence (AI) has emerged as a transformative field, empowering machines to learn from data and generate original content autonomously. This report has provided a comprehensive and systemic study of prompt engineering techniques, covering various aspects such as modeling techniques, applications, literature survey, and conclusions. Prompt engineering has emerged as a powerful tool for leveraging the capabilities of LLMs, enabling users to achieve a wide range of tasks and applications. By understanding the principles, techniques, and applications of prompt engineering, individuals and organizations can effectively harness the power of LLMs for their specific needs and contribute to the advancement of this transformative technology.

References

[1] Liu, P., Yuan, W., Fu, J., Jiang, Z., Hayashi, H., & Neubig, G. (2023). Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Computing Surveys, 55(9), 1-35. [2] Mosbach, M., Pimentel, T., Ravfogel, S., Klakow, D., & Elazar, Y. (2023). Few-shot fine-tuning vs. in-context learning: A fair comparison and evaluation. arXiv preprint arXiv:2305.16938. [3] Liu, Y., Deng, G., Xu, Z., Li, Y., Zheng, Y., Zhang, Y., ... & Liu, Y. (2023). Jailbreaking chatgpt via prompt engineering: An empirical study. arXiv preprint arXiv:2305.13860. [4] Qin, Y., Hu, S., Lin, Y., Chen, W., Ding, N., Cui, G., ... & Sun, M. (2023). Tool learning with foundation models. arXiv preprint arXiv:2304.08354. [5] Zhang, C., Zhang, C., Li, C., Qiao, Y., Zheng, S., Dam, S. K., ... & Hong, C. S. (2023). One small step for generative ai, one giant leap for agi: A complete survey on chatgpt in aigc era. arXiv preprint arXiv:2304.06488. [6] Zhao, W. X., Zhou, K., Li, J., Tang, T., Wang, X., Hou, Y., ... & Wen, J. R. (2023). A survey of large language models. arXiv preprint arXiv:2303.18223. [7] Mialon, G., Dessì, R., Lomeli, M., Nalmpantis, C., Pasunuru, R., Raileanu, R., ... & Scialom, T. (2023). Augmented language models: a survey. arXiv preprint arXiv:2302.07842. [8] Dong, Q., Li, L., Dai, D., Zheng, C., Wu, Z., Chang, B., ... & Sui, Z. (2022). A survey on in-context learning. arXiv preprint arXiv:2301.00234. [9] Huang, J., & Chang, K. C. C. (2022). Towards reasoning in large language models: A survey. arXiv preprint arXiv:2212.10403. [10] Zhang, B., Haddow, B., & Birch, A. (2023, July). Prompting large language model for machine translation: A case study. In International Conference on Machine Learning (pp. 41092-41110). PMLR. [11] https://chat.openai.com/ [12] https://bard.google.com/ [13] https://app.simplified.com/ [14] Promptengineering.org

Copyright

Copyright © 2024 Jay Dinesh Rathod, Dr. Geetanjali V. Kale. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63182

Publish Date : 2024-06-07

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online