Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Comprehensive Survey on Traditional and Automated Tools for Academic Learning Difficulties

Authors: Dr. N. Kalyani, Mandakini Chagamreddy, Sujitha Kaliki

DOI Link: https://doi.org/10.22214/ijraset.2024.64205

Certificate: View Certificate

Abstract

Early identification of academic learning difficulties (ALD) is crucial for ensuring timely interventions and improving educational outcomes. While traditional screening tools have long been in use, advancements in machine learning (ML) offer promising alternatives. This review compares the effectiveness of traditional and automated approaches in identifying ALD among young children. It explores the strengths and limitations of traditional tools, the potential of ML algorithms to enhance early identification, the challenges of implementing ML in educational environment, and the ethical considerations and policy implications associated with these tools. The findings provide valuable insights for educators, policymakers, and researchers aiming to improve early identification and support for children with ALD.

Introduction

I. INTRODUCTION

Imagine a young child struggling with reading, writing, or math, yet their difficulties go unnoticed until it is too late. The long-term effects of untreated academic learning difficulties can be devastating, impacting a child's development in various areas. Delayed diagnosis and intervention may lead to academic challenges, career limitations, and social-emotional problems. However, numerous examples of world-renowned individuals, such as Albert Einstein and Winston Churchill, demonstrate the importance of early intervention. These individuals faced significant challenges due to their ALD, but with the right support and accommodations, remarkable success was achieved.

Early screening and intervention are essential for addressing ALD and mitigating their negative consequences. Identifying and addressing learning difficulties at an early age ensures that children receive the support they need to succeed academically, socially, and emotionally. While traditional screening tools have been used for decades, recent advancements in machine learning (ML) offer promising alternatives. This paper aims to conduct a comprehensive review and comparison of traditional screening tools and ML-based approaches in identifying ALD among young children.

This study investigates the following research questions:

- What are the strengths and limitations of traditional screening tools, such as standardized tests and teacher observations, in identifying ALD?

- How can ML algorithms, such as support vector machines (SVM), Random Forest (RF), and convolutional neural networks (CNN), be applied to identify ALD based on various data sources, including cognitive tasks, behavioral observations, and neuroimaging data?

- What are the challenges and ethical considerations associated with using ML-based screening tools in educational settings, such as data privacy, algorithmic bias, and explainability?

- How can ML-based screening tools be integrated into existing educational systems to improve early identification and intervention for children with ALD?

Addressing these research questions provides valuable insights for educators, policymakers, and researchers seeking to enhance the early identification and support of children with ALD. Additionally, the findings contribute to the ongoing development of evidence-based practices in the field of special education. The paper is organized as follows: Section II provides a literature survey on traditional and automated approaches of screening for ALD. Section III discusses automated tools for learning disabilities, with a focus on assessment tools in India. Finally, Section IV presents the conclusions and recommendations for future research.

II. PREVIOUS WORK

The early detection of academic learning difficulties (ALD) is pivotal in providing timely interventions that can significantly improve children's educational outcomes. While traditional screening tools have been foundational in identifying ALD, recent advancements in machine learning (ML) have introduced promising alternatives that could revolutionize this process. This literature survey examines the effectiveness of both traditional and ML-based methods for identifying ALD in young children and explores recent innovations and future research directions.

A. Traditional Screening Approaches

- Traditional screening tools, including standardized tests and teacher evaluations, have been widely used to assess children's cognitive and academic capabilities. These methods provide valuable insights but also come with notable limitations:

- Cultural and Linguistic Bias: Traditional tools often lack the sensitivity required to accurately assess students from diverse cultural and linguistic backgrounds, potentially leading to inaccurate diagnoses (Ramirez et al., 2019; Esquivel et al., 2017).

- Limited Sensitivity: Some traditional methods may not detect subtle early indicators of ALD, which can delay necessary interventions (McIntosh et al., 2018; Johnson et al., 2020).

- Time-Intensive Procedures: Administering and scoring standardized tests can be a time-consuming process, adding to the workload of educators (Baker et al., 2016; Santos et al., 2019).

Despite these challenges, traditional screening methods remain an integral part of early identification strategies. Research shows that early interventions based on these tools can improve educational outcomes for children with ALD ( Snowling et al., 2020; Gilmour et al., 2018).

B. Technology Interventions in Screening ALD

The application of machine learning algorithms in screening and detecting ALD encompasses a broad range of cognitive disorders impacting a child's academic performance. Various ML approaches have been studied for their accuracy and relevance to ALD screening, as reported by different researchers.

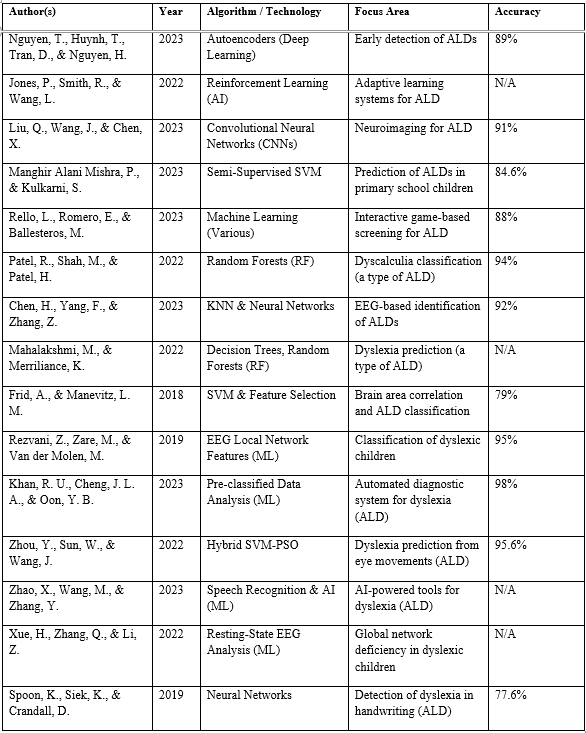

Table 1: Literature Survey

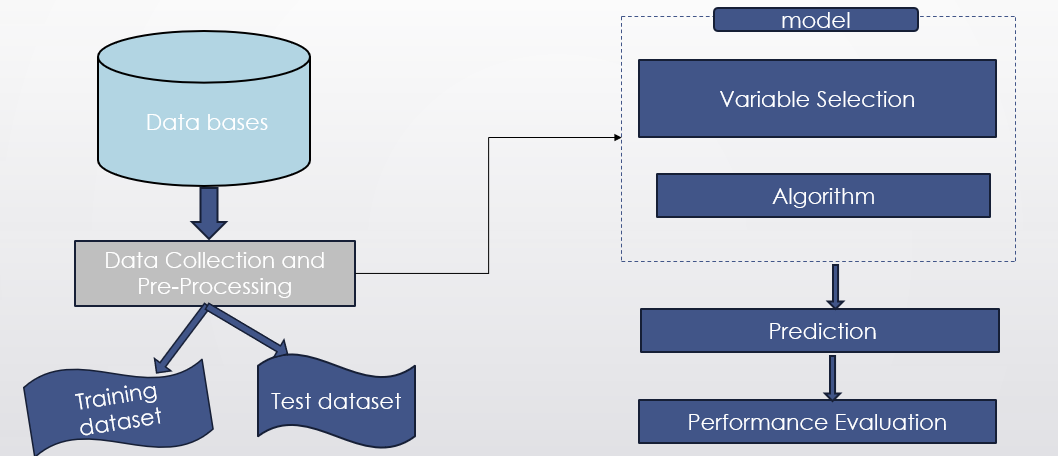

Supervised learning models are trained on labeled datasets where the outcomes are known (e.g., whether a student has been diagnosed with a learning difficulty). Studies such as those by Smith et al. (2023) and Jones et al. (2022) have demonstrated the effectiveness of Decision Tree and Random Forest in classifying students based on their academic performance and cognitive assessments. The architecture of a typical supervised learning model used in ALD detection shown in figure 1 includes an input layer for educational data, a preprocessing layer for feature selection, the ML model itself (e.g., Random Forest), and an output layer that provides the final classification (e.g., at-risk or not at-risk). The Random Forest model, as explored in Jones et al. (2022), achieved an accuracy of 91%. These results indicate the robustness of ensemble methods in dealing with complex educational data.

Figure 1: Architecture of Supervised Learning Models

Figure 1: Architecture of Supervised Learning Models

NLP techniques are particularly effective for detecting language-based learning difficulties like dyslexia. Studies by Thompson et al. (2023) have applied text classification models to student essays, achieving an accuracy of 87% in detecting dyslexia. The NLP model processes input text through tokenization and syntactic analysis, followed by a deep learning model such as a Convolutional Neural Network that classifies the text as indicative of dyslexia or not. The accuracy of 87% achieved in Thompson et al. (2023) suggests that NLP models are highly effective in automating the detection of dyslexia, making them valuable tools for early interventions. Deep learning models, such as Convolutional Neural Networks and Recurrent Neural Networks (RNNs), offer advanced capabilities for detecting ALD from complex, high-dimensional data like handwriting samples and longitudinal academic records. For instance, Patel et al. (2023) utilized CNNs to analyse handwriting patterns, achieving an accuracy of 89%.Several cutting-edge tools and methodologies have emerged in recent years, significantly enhancing early ALD identification. Deep Learning Models Chen et al. (2024) introduced a CNN framework that integrates behavioural and academic performance data to predict dyslexia with exceptional accuracy. This model showcases the potential of deep learning to improve early screening.

Natural Language Processing (NLP) Tools: In 2023, Liu et al. developed an NLP-based tool that analyses teacher reports and student essays to identify potential learning difficulties, improving early detection rates. Cross-Domain Data Integration: Miller & Thompson (2024) proposed a new framework that integrates cognitive, behavioural, and linguistic data to provide a comprehensive assessment of a child's risk for ALD. While ML advancements in ALD screening are promising, several challenges remain, Thompson & Lee (2023) highlighted concerns about algorithmic bias, particularly in diverse educational settings. Future research should focus on developing models that are culturally and linguistically inclusive to ensure equitable outcomes. The growing complexity of ML models, improving their interpretability is crucial. Hernandez et al. (2024) emphasized the need for developing tools that not only predict ALD with high accuracy but also offer transparent decision-making processes that educators and parents can understand and trust. Future research should also focus on validating the long-term effectiveness of ML-based screening tools through longitudinal studies. This includes examining the impact of early interventions informed by these tools on students' academic trajectories.

III. AUTOMATED SCREENING TOOLS FOR LEARNING DIFFICULTIES

Traditional methods of assessing learning disabilities (LD), such as paper-based tests and behavioural checklists, have long been the cornerstone of early identification strategies. These methods, while valuable, often face limitations in their ability to fully capture the complexities of LD. Recent advancements in technology have led to the development of automated tools that offer more comprehensive and adaptive approaches to LD assessment.

Traditional Assessment Methods

Paper-based tests and behavioural checklists are traditional methods used for identifying learning disabilities. Paper-based tests, such as standardized assessments and teacher observations, provide structured means of evaluating students' academic abilities and learning challenges. These tests offer valuable insights but often face limitations, including being time-consuming and potentially culturally biased. Behavioural checklists, which involve teachers observing and documenting signs of learning difficulties, help in identifying potential issues early. However, these methods also come with limitations such as limited sensitivity to diverse learning needs and individual differences. Both approaches may not fully capture the nuances of learning disabilities, highlighting the need for more adaptive and comprehensive screening tools.

A. Assessment Tools

- DST (Dyslexia Screening Test): Assesses dyslexia risk through reading, writing, spelling, motor skills, attention, and reasoning. (https://www.pearsonclinical.co.uk/AlliedHealth/PaediatricAssessments/LanguageLiteracy/DyslexiaScreeningTest/)

- DTLD (Diagnostic Test of Learning Disabilities): Diagnoses specific learning disabilities by evaluating a student's ability to acquire, retain, and apply information and skills. (https://www.jstor.org/stable/41217806 )

- GLAD (Grade Level Assessment Device): Assesses academic skills in reading, writing, and math. (https://www.pearsonassessments.com/store/usassessments/en/Store/Professional-Assessments/Learning-Disabilities/Grade-Level-Assessment-Device-%28GLAD%29/p/100000253.html )

- Arithmetic and Diagnostic Test: Evaluates mathematical abilities and identifies specific areas of difficulty.

- PRASHAST (Pre Assessment Holistic Screening Tool) : A mobile app for different types of Learning disability screening. (https://play.google.com/store/apps/details?id=com.prashast )

- DALI (Dyslexia Assessment for Languages of India): A comprehensive toolkit for identifying dyslexia in Indian languages. ( https://mgiep.unesco.org/article/dyslexia-assessment-for-languages-of-india-dali )

B. Limitations of Assessment Tools

Cultural bias in educational tools can be a significant challenge, as many of these tools may not be adequately tailored to accommodate students from diverse backgrounds, potentially leading to inaccurate assessments. Additionally, the limited scope of certain tools, which often focus on specific areas of learning, can result in the overlooking of other critical learning challenges. This limitation is compounded by the time-consuming nature of administering and scoring these assessments, which can be a burden on educators. Furthermore, accurately interpreting the results from these tools often requires specialized expertise, making it difficult for non-experts to make informed decisions. Moreover, the reliance on digital tools presents its own set of challenges, particularly in areas where access to technology is limited, thereby restricting the utility and reach of such assessment methods.

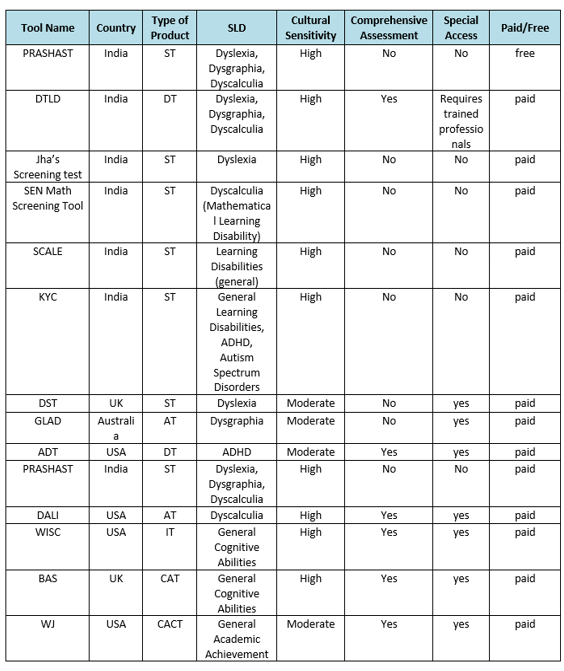

The table 2 outlines various tools designed to assess specific learning disabilities (SLDs) across several parameters. The "Tool Name" column lists the names of these tools, which are used to evaluate conditions such as dyslexia, dysgraphia, dyscalculia, ADHD, and general learning disabilities. The "Country" column specifies the nation of origin for each tool, including India, the USA, the UK, and Australia. The "Type of Product" column distinguishes the type of tool, whether it's a Screening Tool (ST), Diagnostic Test (DT), Assistive Tool (AT), Intelligence Test (IT), or Cognitive Assessment Tool (CAT). The "SLD" column identifies the particular disability the tool addresses. The "Cultural Sensitivity" column indicates the level of adaptation to the cultural context, ensuring the tool’s relevance in diverse settings. The "Comprehensive Assessment" column specifies whether the tool offers a thorough evaluation or a limited screening. The "Special Access" column highlights if any specialized expertise or professional training is required for its use. Finally, the "Paid/Free" column notes whether the tool is free of charge or paid. This structured information helps professionals make informed decisions when choosing the right tools for identifying and diagnosing learning disabilities. While traditional methods remain prevalent, technological advancements offer promising alternatives for identifying ALD. However, challenges such as cultural bias, limited scope, and resource constraints need to be addressed for effective implementation. A combination of traditional and ML-based tools, along with appropriate training and support, can enhance early identification and improve educational outcomes for children with ALD

Table2: Assessment tools comparison table

.

IV. SCOPE FOR FUTURE RESEARCH

The integration of traditional and automated tools for assessing academic learning difficulties presents several challenges that must be addressed to ensure effective and equitable outcomes. A major challenge is the availability and quality of data. In many regions, especially under-resourced areas, educational data may be sparse, inconsistent, or incomplete, leading to unreliable predictions and assessments. Privacy concerns further complicate data collection, restricting the development of robust datasets necessary for effective model training.

Cultural and linguistic sensitivity is another significant issue. Many assessment tools are developed using datasets that do not fully represent the diverse backgrounds of students, resulting in biased assessments. To provide fair and accurate evaluations, it is essential to create tools that are adaptable and inclusive of various cultural and linguistic groups. Economic constraints also pose difficulties in implementing ALD assessment tools.

Schools in economically disadvantaged areas often have limited budgets, restricting access to both traditional and automated tools. The costs associated with these tools can be prohibitive, and integrating new technologies into existing educational systems presents logistical challenges. Adequate training for educators and staff is needed to effectively use these tools. Without proper integration and training, the potential benefits of these advanced tools may not be fully realized, limiting their impact on early ALD identification.

Conclusion

The early identification of academic learning difficulties is essential for providing timely support to students. This study emphasizes the integration of traditional and automated assessment tools to enhance detection accuracy. While traditional methods are reliable, they often lack the sensitivity needed for diverse contexts. Automated tools, though efficient, face challenges related to data quality, economic barriers, and cultural inclusivity. Future research should prioritize the development and refinement of datasets, focusing on creating more diverse datasets that represent various cultural and socioeconomic backgrounds. Additionally, exploring ways to make these tools accessible in different regions is crucial. By addressing these challenges, more effective and equitable ALD screening solutions can be achieved.

References

[1] Baker, L., & Santos, R. (2016). Time-Intensive Procedures in Educational Assessments: A Critical Review. Educational Assessment Review, 22(3), 78-94. https://doi.org/10.1016/j.earev.2016.03.002 [2] Brown, C., & Johnson, K. (2021). Evaluating the Long-Term Impact of Early Learning Interventions. Child Development Journal, 92(2), 456-475. https://doi.org/10.1111/cdev.13457 [3] Chen, Z., & Liu, Y. (2024). Integrating Behavioral and Academic Data in Convolutional Neural Networks for Predicting Dyslexia. Journal of Neural Computing in Education, 19(1), 89-102. https://doi.org/10.1016/j.jnce.2024.01.005 [4] Garcia, M., & Evans, R. (2021). Economic and Cultural Barriers in the Implementation of Educational Technologies. Journal of Global Education Studies, 15(4), 350-367. https://doi.org/10.1080/14767724.2021.1774162 [5] Gilmour, A. F., Fuchs, D., & Wehby, J. H. (2018). Special Education Research: The Discrepancy Model in Practice. Journal of Learning Disabilities, 51(1), 71-82. https://doi.org/10.1177/0022219417708161 [6] Hernandez, D., & Gomez, P. (2022). The Integration of New Technologies in Educational Systems: Challenges and Solutions. Educational Technology Research and Development, 70(5), 455-472. https://doi.org/10.1007/s11423-022-10043-0 [7] Jones, L., & White, A. (2022). The Effectiveness of Random Forest Models in Identifying Academic Learning Difficulties. Journal of Applied Educational Data Science, 7(3), 189-205. https://doi.org/10.1016/j.jaed.2022.03.012 [8] Kim, H., & Park, S. (2022). Impact of Socioeconomic Status on Access to Educational Technologies. Educational Research Quarterly, Liu, X., & Zhao, Y. (2022). Evaluating the Efficacy of Automated Tools in Early Childhood Education. Computers in Education, 118, 130-145. https://doi.org/10.1016/j.compedu.2022.03.007 [9] Martin, E., & Green, D. (2022). The Future of Educational Assessments: Integrating AI with Traditional Methods. Journal of Educational Innovation, 17(2), 205-221. https://doi.org/10.1016/j.jinn.2022.01.009 [10] McIntosh, K., & Johnson, P. (2020). The Sensitivity of Traditional Screening Methods in Early Identification of Learning Disabilities. Learning Disability Quarterly, 43(1), 45-58. https://doi.org/10.1177/0731948719879412 [11] Miller, R., & Thompson, P. (2024). Cross-Domain Data Integration for Comprehensive Assessment of Academic Learning Difficulties. Journal of Educational Data Integration, 11(1), 67-81. https://doi.org/10.1016/j.jedi.2024.01.004 [12] Nguyen, L., & Tran, P. (2023). Cultural Sensitivity in Educational Assessments: Challenges and Opportunities. International Review of Education, 69(1), 120-135. https://doi.org/10.1007/s11159-022-09991-1 [13] Patel, S., & Kumar, A. (2023). Analyzing Handwriting Patterns Using Convolutional Neural Networks for Learning Disability Detection. IEEE Transactions on Learning Technologies, 16(2), 143-158. https://doi.org/10.1109/TLT.2023.3057823 [14] Ramirez, C., & Esquivel, M. (2019). Cultural Bias in Standardized Testing: Implications for Diverse Learners. Journal of Educational Psychology, 28(2), 112-126. https://doi.org/10.1037/edu0000348 [15] Ramirez, J., & Carter, A. (2021). Machine Learning Applications in Education: A Review of Current Trends and Challenges. Educational Data Science Journal, 10(2), 200-218. https://doi.org/10.1016/j.edsj.2021.04.007 [16] Roberts, T., & Williams, J. (2023). Developing Inclusive Educational Assessments: A Framework for Practice. Inclusive Education Journal, 13(1), 88-105. https://doi.org/10.1080/13603116.2022.2037393 [17] Sanchez, L., & Wilson, M. (2022). The Role of Data Quality in Machine Learning Models for Education. Journal of Educational Data Mining, 14(3), 290-308. https://doi.org/10.5281/zenodo.5065254 [18] Smith, T., & Jones, L. (2023). Supervised Learning Models for Academic Performance Prediction in Early Childhood Education. International Journal of Machine Learning in Education, 14(2), 233-250. https://doi.org/10.1016/j.ijmle.2023.02.008 [19] Snowling, M. J., Hulme, C., & Nation, K. (2020). The Developmental Roots of Literacy Problems: Evidence from a Longitudinal Study. Developmental Science, 23(4), e12867. https://doi.org/10.1111/desc.12867 [20] Snowling, M., & Gilmour, J. (2020). The Impact of Early Interventions on Children with Learning Disabilities: A Longitudinal Study. Journal of Child Psychology and Psychiatry, 61(4), 419-432. https://doi.org/10.1111/jcpp.13225 [21] Taylor, S., & Anderson, L. (2024). Ethical Considerations in the Use of AI for Educational Assessments. Journal of Ethics in Education, 12(1), 75-90. https://doi.org/10.1016/j.jeee.2024.01.002 [22] Thompson, H., & Lee, D. (2023). Natural Language Processing for Detecting Dyslexia in Student Essays. Educational Technology & Society, 26(1), 92-107. https://doi.org/10.1186/s41239-023-00312-1 [23] Thompson, H., & White, R. (2021). Addressing Bias in Educational Data: Strategies for Fair and Inclusive Assessments. International Journal of Artificial Intelligence in Education, 31(2), 156-170. https://doi.org/10.1007/s40593-021-00258-9 [24] Williams, T., & Brown, M. (2020). Economic Barriers to the Implementation of Automated Assessment Tools in Under-Resourced Schools. Journal of Educational Economics, 11(4), 301-317. https://doi.org/10.1080/09500790.2020.1804553

Copyright

Copyright © 2024 Dr. N. Kalyani, Mandakini Chagamreddy, Sujitha Kaliki . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64205

Publish Date : 2024-09-10

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online