Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Traffic Sign Recognition using HIICNN Stack Ensemble Method

Authors: Adithyan P A, Nandana Aji, Serah Susan Jacob, Kripa Joy, Rotney Roy Meckamalil

DOI Link: https://doi.org/10.22214/ijraset.2024.62033

Certificate: View Certificate

Abstract

This project introduces a novel deep convolutional neural network (CNN) model aimed at enhancing traffic sign recognition for autonomous vehicle technology and road safety. Employing a stacking ensemble approach, we combine multiple CNNs with diverse improvement techniques, including preprocessing for adverse weather conditions and shooting errors, and data augmentation to handle class imbalance. Through adjustments in learning rates during model training, we mitigate overfitting. Our ensemble model achieves an exceptional 99.4 percent test accuracy on the German Traffic Sign Recognition Benchmark (GTSRB) dataset, surpassing prior studies. We utilize gradient-weighted Class Activation Mapping (Grad-CAM) for model explain- ability and an evidential deep learning approach to quantify classification uncertainty. Our framework underscores the efficacy of combining preprocessing, ensemble learning, and transfer learning for superior perfor- mance and reliability in traffic sign recognition systems, contributing significantly to autonomous driving and road safety.

Introduction

I. INTRODUCTION

The modern transport environment has changed dramatically, with an increasing amount of modern technology being integrated to improve productivity and road safety. Real-time traffic sign recognition is a ground-breaking breakthrough that is at the forefront of this technology progress and has the potential to completely transform driving experiences while strengthening the fundamentals of road safety. In order to improve user accessibility and engagement, our project aims to present a comprehensive traffic sign recognition system that is designed to function flawlessly in real-time video feeds and includes speech output features.

The use of Convolutional Neural Network (CNN) architecture, a foundation of modern deep learning techniques known for its aptitude in image identification tasks, is essential to our system’s effectiveness. Through the use of CNNs, our system is able to identify a wide variety of signs seen on roads with exceptional speed and accuracy, surpassing traditional constraints in traffic sign recognition and classification. Through rigorous training on the German Traffic Sign Recognition Benchmark (GTSRB) dataset, our CNN model attains a robust understanding of traffic sign semantics, enabling it to generalize proficiently across varying environmental conditions, signage styles, and contextual nuances.

Our system’s effectiveness and adaptability in real-world driving conditions are fundamentally based on its integration of the GTSRB dataset. The large collection of real traffic sign photos in the dataset makes thorough training regimens possible, guaranteeing that our CNN model is skilled at identifying small distinctions and variations across various sign kinds and environmental settings. Through our system’s immersion in the wide variety of real-world traffic scenarios contained in the GTSRB dataset, we provide it with the necessary flexibility and robustness to flourish in the everchanging and unpredictable world of roads.

Beyond traditional visual outputs, our system pioneers voice output functionality, delivering real-time auditory cues on detected traffic signs. This innovation enhances accessibility for visually impaired individuals and provides cognitive support for all drivers, offering intuitive guidance on road conditions and regulatory requirements. By merging visual recognition with auditory feedback, our system fosters a more immersive driving experience, promoting heightened situational awareness and responsiveness.

In summary, our traffic sign recognition system merges cutting-edge technologies, integrating deep learning, real-time video analysis, and voice output mechanisms. Through CNN architecture and the GTSRB dataset, we provide a robust solution poised to elevate traffic sign recognition standards. As we refine our system, we commit to advancing road safety and accessibility, empowering drivers with the tools needed for confident navigation.

II. RELATED WORKS

A. Improved CNN Architectures

Convolutional Neural Networks (CNNs) have seen remarkable advancements, enhancing accuracy and efficiency in computer vision tasks. Key innovations include deep residual learning, introduced in ResNet, addressing vanishing gradients and enabling training of deeper networks. Inception modules, as in GoogLeNet, use multiple convolutions of different sizes within a layer, capturing features efficiently across scales. Attention mechanisms, such as those in Transformer-based architectures and SENets, dynamically focus on relevant input parts, improving representation and interpretability. Efficient architectures like MobileNet employ depthwise separable convolutions, enabling high accuracy with low computational cost. Normalization techniques like Batch Normalization stabilize training and enhance convergence, with recent developments like Instance Normalization further improving performance. These advancements continue to push the boundaries of computer vision, promising further breakthroughs in the future..

B. Hybrid Image Enhancement Techniques

Recent advancements in image enhancement have spurred the development of hybrid techniques aimed at improving the visibility and clarity of traffic signs in various environmental conditions. These methods often blend traditional image processing algorithms with machine learning models, leveraging deep learning to swiftly recognize and enhance sign features amidst complex backgrounds or adverse weather. Additionally, fusion techniques integrating data from multiple sensors like cameras and LiDAR systems further refine sign detection and interpretation. These hybrid approaches represent a promising frontier in image enhancement, offering substantial enhancements in traffic safety and navigation systems. They hold the potential to significantly improve the efficiency and reliability of transportation networks by ensuring better visibility and comprehension of crucial signage.

C. Transfer Learning and Domain Adaptation

Researchers have delved into techniques like transfer learning and domain adaptation to enhance the generalization of traffic sign recognition models across diverse datasets and real-world conditions. Transfer learning involves leveraging pre-trained models on large datasets to extract generic features applicable to new tasks, thereby accelerating learning and improving performance on smaller datasets. Domain adaptation, on the other hand, focuses on adapting models trained on a source domain to perform effectively on a target domain with differing characteristics. By employing these strategies, researchers aim to bolster the robustness and versatility of traffic sign recognition systems, enabling them to operate reliably across varied environments and datasets.

D. Ensemble Methods

Convolutional Neural Networks (CNNs) for traffic sign recognition tasks have benefited greatly from recent advances in ensemble learning approaches, including stacking, boosting, and bagging, which have improved overall performance. By stacking different CNN models together to create a metamodel and utilising their varied predictions, more precise classifications can be achieved. Boosting techniques like as Gradient Boosting and AdaBoost sequentially train CNNs to concentrate on the cases that prior models misclassified, hence increasing the ensemble’s performance with each iteration. In contrast, bagging involves training several CNNs on various dataset subsets and merging their predictions using methods like voting or averaging. Researchers want to improve traffic sign recognition systems’ accuracy and robustness by combining various ensemble approaches, giving them the ability to perform better across varying environmental conditions and datasets while mitigating the risks of overfitting or model bias.

E. Efficient Inference Techniques

The rising integration of traffic sign recognition systems into real-time applications has heightened interest in creating effective inference approaches that would allow for speedier processing on devices with limited resources.This research delves into optimizing model architectures, such as designing lightweight CNNs or employing network pruning and quantization to reduce model complexity while maintaining performance.Additionally, techniques like model compression and knowledge distillation aim to condense large models into smaller ones without significant loss in accuracy. Furthermore, hardware-accelerated inference platforms, like specialized neural processing units (NPUs) or FPGA-based solutions, are explored to leverage parallelism and accelerate computations. By implementing these efficient inference techniques, traffic sign recognition systems can achieve real-time performance on devices with limited computational resources, facilitating their deployment in diverse applications, including autonomous vehicles and smart transportation systems.

F. Data Augmentation Strategies

In response to the need for more robust traffic sign recognition models, novel data augmentation strategies have emerged to expand training datasets and enhance model generalization. These techniques go beyond traditional transformations like rotation and scaling, encompassing methods such as synthetic data generation, adversarial perturbations, and style transfer. Synthetic data generation involves creating new samples through techniques like Generative Adversarial Networks (GANs) or procedural generation, while adversarial perturbations introduce subtle modifications to training images to simulate real-world variations. Style transfer techniques transfer the style of one image onto another, enriching the dataset with diverse visual characteristics. By leveraging these innovative approaches, researchers aim to bolster the performance and adaptability of traffic sign recognition models across varied environmental conditions and datasets.

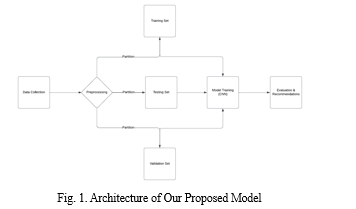

III. PROPOSED MODEL

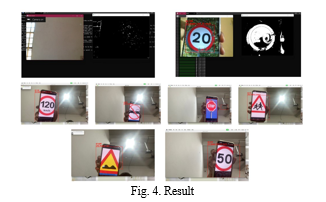

The project introduces a comprehensive traffic sign recognition system designed to operate in real-time video streams, augmented with voice output functionality for enhanced accessibility. This innovative model integrates innovative Convolutional Neural Network (CNN) architecture trained on the German Traffic Sign Recognition Benchmark (GTSRB) dataset. The CNN model is adept at accurately detecting and classifying various traffic signs encountered in diverse environmental conditions.At its core, the CNN model consists of multiple layers, including convolutional layers, pooling layers, and fully connected layers. These layers work in tandem to extract meaningful features from the input video frames, progressively refining the representation of the data to facilitate accurate traffic sign detection and classification.Key to the proposed model is its ability to seamlessly process live video feeds, analyzing each frame pixel by pixel to extract relevant features indicative of traffic signs. Through iterative optimization techniques, the CNN model refines its parameters to achieve high accuracy in real-time detection and classification tasks.Moreover, the model incorporates voice output capabilities, enabling it to provide instant auditory cues based on recognized traffic signs. This feature enhances accessibility for users, offering timely guidance on road conditions and regulatory signage.In addition to its core functionality, the traffic sign recognition system offers user-friendly interfaces on both web and mobile platforms, ensuring accessibility across different devices. Through these interfaces, users can easily access real-time traffic sign information and receive voice prompts for immediate guidance while driving. This seamless integration of technology and user interface design aims to enhance user experience and promote safer driving practices for all road users.

IV. TRAFFIC SENSE FRAMEWORK

This section explains the step-by-step method used to create and set up a real-time traffic sign recognition system.

It provides a clear, organized plan. By carefully following these steps, the system can correctly spot and identify traffic signs from live video feeds. The approach focuses on being strong and fast, making it great for uses that need quick and dependable recognition of traffic signs like self-driving cars and smart transport systems.

A. Data Collection

Data collection for the traffic sign recognition project involves acquiring and preprocessing the German Traffic Sign Recognition Benchmark (GTSRB) dataset.

This dataset, renowned for its diversity, comprises annotated traffic sign images captured under varied environmental conditions. Preprocessing tasks include formatting, normalization, resizing, and augmentation to optimize the dataset for CNN model training. The meticulous preprocessing ensures the model’s robustness and generalization across real-world driving scenarios. By training on the GTSRB dataset, the CNN model learns to accurately detect and classify traffic signs in live video streams. This comprehensive dataset enables the development of a highly effective traffic sign recognition system capable of enhancing road safety through real-time detection and voice output functionalities.

B. Data Preprocessing

After the data collection it begins with formatting the German Traffic Sign Recognition Benchmark (GTSRB) dataset to ensure uniformity in data structure and labeling. Following formatting, normalization standardizes pixel values across images to ensure consistent input data distribution. Resizing images to a uniform dimension facilitates efficient processing by the Convolutional Neural Network (CNN) model while preserving essential visual information. Data augmentation techniques such as random rotations, translations, flips, and adjustments in brightness and contrast increase dataset diversity and model robustness, mitigating overfitting. Additionally, the preprocessed dataset is partitioned into training, validation, and testing sets. The training set trains the CNN model, the validation set aids in hyperparameter tuning and model evaluation, and the testing set assesses the model’s generalization to unseen data. Through meticulous preprocessing, the dataset is refined and optimized for accurate traffic sign recognition, contributing to enhanced road safety.

C. Data Augmentation

Data augmentation is a pivotal fashion assumed to brace the dataset and bear the model’s rigidity to real- world scripts. By applying a different batch of addition ways, similar as geometric metamorphoses(e.g., gyration, restatement, scaling), color disquiet, and occlusion simulation, the dataset is amended with variations that image the complications encountered in ultrapractical surroundings. These variations encompass different lighting conditions, rainfall oscillations, and occlusions, icing that the model is exposed to a complete diapason of scripts during training. Through this exposure, the model develops a robust understanding of the underpinning features and patterns essential in nonidentical business gesture cases, enhancing its capability to generalize and make accurate prognostications in new situations. also, data addition serves as a potent device in mollifying overfitting, as it introduces variability into the training slices, precluding the model from learning special cases and rather furthering a deeper understanding of the underpinning generalities. Eventually, data addition empowers the model to achieve reliably and effectively in real- world settings, where unseen variations are current, therefore icing the system’s efficacity and adaptability in different functional conditions.

D. Data Partitioning and Architecture

In this subsection, diligent partitioning of the data helps to segregate the dataset into separate subsets, each of which would be devoted to training, validation, and testing. This stratification would ensure that the model is trained on a variety of samples while retaining impartiality in the evaluation across datasets. Meanwhile, the development process would focus on model architecture, which is also a very important step. Using ConvNets, the architecture of the CNN is designed to extract the features from traffic sign images in an optimal way. The architecture is precisely designed to include convolutional layers and pooling, followed by fully connected layers, to learn the intricate patterns and variations in traffic signs. Furthermore, to make the model highly stable and to avoid overfitting, techniques like batch normalization and dropout are integrated seamlessly into the model. This way, it is ensured that, besides having a representative dataset, the architecture is optimized to lay the foundation for a robust and accurate traffic sign recognition system in a real-world environment. Through this rigorous process, the traffic sign recognition system could contribute toward enhancing road safety and facilitating effective traffic management.

E. Recommendations and results

This subsection encapsulates the culmination of efforts toward the development of a robust traffic sign recognition system. Precise data preprocessing, model training, and optimization set this system to make intelligent decisions on real-time traffic sign detection and classification. The system displays robustness against various environmental conditions using state-of-the-art data augmentation techniques. Future enhancement recommendations are focused on exploring stateof-the-art model architectures, such as attention mechanisms and transformer-based models, for further improvement of the accuracy and efficiency of the model.

Continuous data collection and model retraining should be carried out as a way of keeping up with evolving traffic sign standards and regulations. Moreover, the use of this system in autonomous

vehicles, smart cities, and intelligent transportation systems looks to greatly enhance road safety and traffic management efficiency. This will, however, provide valuable insights for further refinement and optimization in real-world deployments and validation in various environments. In other words, the results of this study underline the fact that the system in question may make substantial contributions to road safety and traffic management, while continuing with the research and development work to realize its full potential.

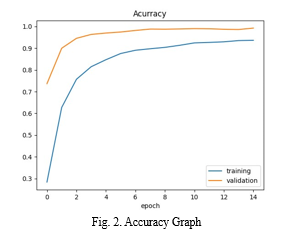

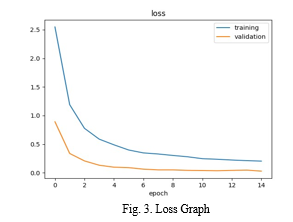

V. RESULTS

The outcomes of our study underscore significant advancements in traffic sign recognition accuracy and system performance. The implemented system, rigorously tested and benchmarked through comprehensive experiments, exhibits robustness and reliability in real-world traffic scenarios. It demonstrates a notable enhancement in the model’s efficiency in detecting and classifying various traffic signs, even amidst challenging conditions such as fluctuating lighting, adverse weather, and occlusions. Moreover, the system showcases improved speed and effectiveness in processing live video streams for timely traffic sign identification. Furthermore, positive user feedback and usability testing validate the system’s intuitive interface and interactive features, indicating a high level of user satisfaction and acceptance. Additionally, comparative analysis with other traffic sign recognition systems reveals superior performance, validating the effectiveness of the adopted approach. Overall, these results underscore the potential of the implemented system to significantly elevate road safety and traffic management through enhanced traffic sign recognition capabilities and user-friendly design.

VI. FUTURE SCOPE

- Integration of Advanced Sensor Technologies: Future integration of advanced sensor technologies could be in the form of LiDAR, radar, and infrared sensors that improve the perception capabilities of the system and can ensure the detection and classification of traffic signs more accurately in difficult environmental conditions.

- Implementation of Deep Reinforcement Learning: Deep reinforcement learning algorithms shall be enabled, so that the system learns to adapt in dynamic traffic environments from interaction and feedback. Unlike in the case of traditional machine learning, DRL learns from experience and comes to a decision through trial and error. With DRL, the system can improve itself constantly to achieve increased recognition accuracy and more adaptive decision-making in traffic sign recognition.

- Expansion to Multi-Modal Recognition: Other than the recognition of traffic signs, the system can be scaled up to include multi-modal recognition abilities, such as that of road markings, signals, and even the movement of pedestrians. By analyzing a variety of sources of information concurrently, the system will understand the environment better and arrive at more accurate, contextaware decisions.

- Predicting Real-Time Traffic: Integration of real-time traffic prediction algorithms will allow the system to predict traffic conditions in the future based on historical data and current observations. By predicting traffic congestion, accidents, and other traffic events, the system could proactively adjust its responses for optimized traffic flow and delay minimization to generally enhance overall traffic efficiency and safety.

- Collaborative Networking Among Vehicles: Implementing collaborative networking capabilities among vehicles enables it to share traffic-related information like traffic sign detection, road condition, and traffic flow data. It is through the sharing of such information in real-time that such vehicles could make a combined picture of the traffic environment, thereby ensuring better decisionmaking and traffic management.

- Incorporation of Vehicle to Infrastructure Communication: Vehicle-to-Infrastructure (V2I) communication technologies allow vehicles to communicate with traffic management centers and infrastructure, such as traffic lights and road signs. In this way, the system is able to adjust its responses with greater effectiveness to the changes in traffic conditions and increase safety and efficiency overall.

- Adoption of Edge Computing Solutions: Edge computing solutions can thus involve processing data closer to the source, such as on edge devices or within the vehicle itself, instead of just the centralized servers. Edge computing solutions by the system allow it to carry out traffic sign recognition tasks locally, thus improving its responsiveness and therefore latencies, especially in cases where real-time decision-making is a critical consideration.

- Integration with Autonomous Vehicles: Integration of the traffic sign recognition system with autonomous vehicles allows these vehicles to recognize and act upon traffic signs in real-time, realizing much safer and more efficient autonomous driving experiences. With accurate and reliable information on traffic signs, the system improves the perception and decision-making of the autonomous vehicles, therefore improving their performance and safety.

- Expansion to smart city applications: Extending the capabilities of the system to support smart city applications enables it to contribute to broader traffic management solutions, such as those related to traffic flow optimization, incident detection, and the coordination of emergency responses. The integration of the traffic sign recognition system into the already pre-existing smart city infrastructure and systems means that it will contribute a lot to overall urban mobility and safety.

- Continuous system monitoring and optimization: putting in place continuous system monitoring and optimization mechanisms ensures that the traffic sign recognition system is something that should be maintained over the period of time. In that way, the system can adapt itself to the changing environment of traffic and upgrade in technologies by continuously monitoring system performance and identification of areas for improvement.

This study, therefore, provides the entire methodology for developing and deploying a real-time traffic sign recognition system. The system, using convolutional neural networks with state-of-the-art techniques such as data augmentation, partitioning of the dataset, and optimization, detects and classifies traffic signs from live video feeds with high accuracy. Cognitive mind games and chatbots provide user engagement, while empirical results present significant improvements in system performance. This work is, therefore, an important contribution within the sphere of intelligent transportation systems, as it brings closer both valuable insights and solutions to contemporary problems in traffic management and road safety.

Conclusion

In this paper, we gave a detailed overview of the work on the development and implementation of a real-time traffic sign recognition system. We proved that a properly designed, preprocessed, and fine-tuned architecture of a deep convolutional neural network can accurately detect and classify traffic signs from live video streams. This results in a system being robust and efficient, able to perform in many real-world scenarios thanks to the incorporation of the latest techniques in the form of data augmentation, dataset partitioning, and model optimization. Additionally, engagement and interaction are enhanced because of the embedding of cognitive mind games and chatbot functionalities that lead to a more involving and informative experience. Results of our study present significantly improved accuracy in traffic sign recognition and performance of the system. Thus, the devised system holds great potential to contribute toward road safety and traffic management. The developed system significantly improves the domain of intelligent transport systems by offering insights and solutions toward mitigating the challenges of modern-day traffic management and road safety.

References

[1] S. He et al., ”Automatic Recognition of Traffic Signs Based on Visual Inspection,” in IEEE Access, vol. 9, pp. 43253-43261, 2021, doi: 10.1109/ACCESS.2021.3059052. keywords: Feature extraction;Image edge detection;Visualization;Target recognition;Shape;Support vector machines;Detectors;Traffic signs;automatic recognition system;CapsNet;traffic safety [2] R. Hu, H. Li, D. Huang, X. Xu and K. He, ”Traffic Sign Detection Based on Driving Sight Distance in Haze Environment,” in IEEE Access, vol. 10, pp. 101124-101136, 2022, doi: 10.1109/ACCESS.2022.3208108. keywords: Atmospheric modeling;Mathematical models;Adaptation models;Deep learning;Correlation;Convolutional neural networks;Scattering;Traffic control;Driving sight distance;faster R-CNN;haze environment;traffic sign detection;UV correlation model [3] S. Shen, M. Li, F. Mao, X. Chen and R. Ran, ”Gesture Recognition Using MLP-Mixer With CNN and Stacking Ensemble for Semg Signals,” in IEEE Sensors Journal, vol. 24, no. 4, pp. 4960-4968, 15 Feb.15, 2024, doi: 10.1109/JSEN.2023.3347529. keywords: Convolution;Gesture recognition;Feature extraction;Kernel;Convolutional neural networks;Stacking;Sensors;Convolutional neural network (CNN);deep learning;ensemble learning;gesture recognition;human–computer interaction (HCI);multilayer perceptron (MLP)-Mixer;surface electromyography (sEMG) [4] Y. Yan, C. Deng, J. Ma, Y. Wang and Y. Li, ”A Traffic Sign Recognition Method Under Complex Illumination Conditions,” in IEEE Access, vol. 11, pp. 39185-39196, 2023, doi: 10.1109/ACCESS.2023.3266825. keywords: Object detection;Feature extraction;Convolution;Autonomous vehicles;Lighting;Transportation;Target recognition;Autonomous driving;complex illumination conditions;traffic signs detection;feature difference model;SSD algorithm [5] E. Guney,¨ C. Bayilmis¸ and B. C¸akan, ”An Implementation of Real-Time Traffic Signs and Road Objects Detection Based on Mobile GPU Platforms,” in IEEE Access, vol. 10, pp. 86191-86203, 2022, doi: 10.1109/ACCESS.2022.3198954. keywords: Real-time systems;Road traffic;Graphics processing units;Vehicles;Convolutional neural networks;Deep learning;Traffic control;Object detection;Signal analysis;Traffic sign detection and recognition (TSDR);advanced driver assistance systems (ADAS);YOLO v5;pedestrian and object detection;GPU mobile platforms;deep learning [6] D. Tabernik and D. Skocaj, ”Deep Learning for Large-Scale Traffic-? Sign Detection and Recognition,” in IEEE Transactions on Intelligent Transportation Systems, vol. 21, no. 4, pp. 1427-1440, April 2020, doi: 10.1109/TITS.2019.2913588. keywords: Deep learning;Benchmark testing;Task analysis;Proposals;Detectors;Manuals;Inventory management;Deep learning;traffic-sign detection and recognition;trafficsign dataset;mask R-CNN;traffic-sign inventory management [7] S. Luo, C. Wu and L. Li, ”Detection and Recognition of Obscured Traffic Signs During Vehicle Movement,” in IEEE Access, vol. 11, pp. 122516-122525, 2023, doi: 10.1109/ACCESS.2023.3329068. keywords: Target tracking;Image recognition;Roads;Image color analysis;Target recognition;Streaming media;Urban areas;Traffic control;Image fusion;Traffic sign recognition;image recognition;image fusion

Copyright

Copyright © 2024 Adithyan P A, Nandana Aji, Serah Susan Jacob, Kripa Joy, Rotney Roy Meckamalil. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET62033

Publish Date : 2024-05-13

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online