Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Understanding Artificial Neural Networks and its formation

Authors: Shravani Meshram, Prathmesh Tambakhe, Prof. N. G. Gupta

DOI Link: https://doi.org/10.22214/ijraset.2024.59660

Certificate: View Certificate

Abstract

A brain network is an information handling framework comprising of countless basic, exceptionally interconnected handling components in an engineering motivated by the construction of the cerebral cortex piece of the cerebrum. Thus, brain networks are frequently equipped for doing things which people or creatures get along nicely however which customary PCs frequently do inadequately. Brain networks have arisen in the beyond couple of years as an area of surprising an open door for research, improvement and application to various true issues. For sure, brain networks display attributes and abilities not given by some other innovation. Models incorporate perusing Japanese Kanji characters and human penmanship, perusing typewritten text, making up for arrangement mistakes in robots, deciphering extremely \"uproarious\" signals (e.g., electrocardiograms), demonstrating complex frameworks that can\'t be displayed numerically, and anticipating whether proposed advances will be great or fizzle. This report presents a short instructional exercise on brain organizations and momentarily depicts a few applications.

Introduction

I. INTRODUCTION

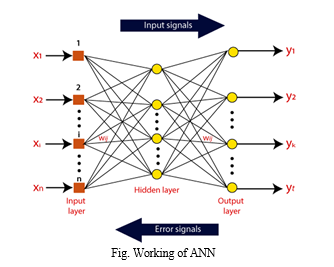

Artificial neural networks are modelled after the human brain, with nodes representing neurons and layers representing synapses. These networks can be trained to recognize patterns, make predictions, and even generate new content. The possibilities are endless, and the potential for innovation is limitless. Artificial Neural Network Tutorial provides basic and advanced concepts of ANNs. Our Artificial Neural Network tutorial is developed for beginners as well as professions. The term "Artificial neural network" refers to a biologically inspired sub-field of artificial intelligence modelled after the brain. An Artificial neural network is usually a computational network based on biological neural networks that construct the structure of the human brain. Similar to a human brain has neurons interconnected to each other, artificial neural networks also have neurons that are linked to each other in various layers of the networks. These neurons are known as nodes. Artificial neural network tutorial covers all the aspects related to the artificial neural network. In this tutorial, we will discuss ANNs, Adaptive resonance theory, Kohonen self-organizing map, Building blocks, unsupervised learning, Genetic algorithm, etc. An Artificial Neural Network (ANN) is a computational model inspired by the human brain’s neural structure.

It consists of interconnected nodes (neurons) organized into layers. Information flows through these nodes, and the network adjusts the connection strengths (weights) during training to learn from data, enabling it to recognize patterns, make predictions, and solve various tasks in machine learning and artificial intelligence.

II. WHAT ARE ARTIFICIAL NEURAL NETWORKS?

Artificial neural networks are a type of machine learning algorithm that are designed to mimic the structure and function of the human brain. Like the brain, these networks are made up of interconnected nodes that process and transmit information. Each node receives input from other nodes and uses this information to decide or produce an output. The term "Artificial Neural Network" is derived from Biological neural networks that develop the structure of a human brain. Similar to the human brain that has neurons interconnected to one another, One of the key features of artificial neural networks is their ability to learn and adapt to new data. This is achieved through a process known as training, where the network is fed large amounts of data and adjusts its weights and connections to improve its accuracy.

As a result, artificial neural networks are capable of performing complex tasks such as image recognition, natural language processing, and financial forecasting. Artificial neural networks also have neurons that are interconnected to one another in various layers of the networks. These neurons are known as nodes.

III. HOW DO ARTIFICIAL NEURAL NETWORKS WORK?

Artificial neural networks are modelled after the human brain and consist of nodes, layers, and weights. The nodes represent neurons, which receive inputs and produce outputs based on their activation function. The layers organize the nodes into hierarchical structures, with each layer processing increasingly complex features. The weights determine the strength of the connections between nodes, and are adjusted during training to optimize the network's performance. To use an analogy, think of a neural network as a team of detectives trying to solve a mystery. Each detective (node) receives clues (inputs) and uses their own expertise (activation function) to make deductions (outputs). As they work together and share information (layers), they build a more complete picture of the case. The weight of each detective's opinion (connection strength) is influenced by their past successes and failures (training data), and is constantly updated as new evidence comes to light. To understand the concept of the architecture of an artificial neural network, we have to understand what a neural network consists of. In order to define a neural network that consists of a large number of artificial neurons, which are termed units arranged in a sequence of layers. Let us look at various types of layers available in an artificial neural network.

- Input Layer: As the name suggests, it accepts inputs in several different formats provided by the programmer.

- Hidden Layer: The hidden layer presents in-between input and output layers. It performs all the calculations to find hidden features and patterns.

- Output Layer: The input goes through a series of transformations using the hidden layer, which finally results in output that is conveyed using this layer.

IV. TRAINING ARTIFICIAL NEURAL NETWORKS

Training an artificial neural network involves adjusting the weights between nodes to minimize the difference between the predicted output and the actual output. This is done through a process called backpropagation, where the error is propagated backwards through the network to adjust the weights. Gradient descent is used to find the optimal values for the weights by iteratively adjusting them in the direction of steepest descent. The process of training an artificial neural network can be time-consuming and computationally intensive, especially for large datasets and complex architectures. However, with advancements in hardware and software, training times have been greatly reduced, making it possible to train deep neural networks with millions of parameters. We can train the neural network by feeding it by teaching patterns and letting it change its weight according to some learning rule. We can categorize the learning situations as follows.

- Supervised Learning: In which the network is trained by providing it with input and matching output patterns. And these input-output pairs can be provided by an external system that contains the neural network.

- Unsupervised Learning: In which output is trained to respond to a cluster of patterns within the input. Unsupervised learning uses a machine learning algorithm to analyse and cluster unlabelled datasets.

- Reinforcement Learning: This type of learning may be considered as an intermediate form of the above two types of learning, which trains the model to return an optimum solution for a problem by taking a sequence of decisions by itself.

Another method of teaching artificial neural networks is Backpropagation Algorithm. It is a commonly used method for teaching artificial neural networks. The backpropagation algorithm is used feed-forward ANNs. The motive of the backpropagation algorithm is to reduce this error until the ANN learns the training data.

V. TYPES OF ARTIFICIAL NEURAL NETWORKS

Feedforward neural networks are the simplest type of artificial neural network. In this type of network, the information flows in only one direction, from input to output, without any feedback loops. They are widely used in pattern recognition applications. Feedforward neural networks, or multi-layer perceptron’s (MLPs), are what we’ve primarily been focusing on within this article. They are comprised of an input layer, a hidden layer or layers, and an output layer. While these neural networks are also commonly referred to as MLPs, it’s important to note that they are actually comprised of sigmoid neurons, not perceptron’s, as most real-world problems are nonlinear. Data usually is fed into these models to train them, and they are the foundation for computer vision, natural language processing, and other neural networks.

- Recurrent neural networks have feedback connections that allow them to process sequences of inputs. This makes them well-suited for tasks such as speech recognition and natural language processing. Recurrent neural networks (RNNs) are identified by their feedback loops. These learning algorithms are primarily leveraged when using time-series data to make predictions about future outcomes, such as stock market predictions or sales forecasting.

- Convolutional neural networks are designed to process data with a grid-like topology, such as images or videos. They use convolutional layers to extract features from the input data and are commonly used in image classification and object detection tasks. Convolutional neural networks (CNNs) are similar to feedforward networks, but they’re usually utilized for image recognition, pattern recognition, and/or computer vision. These networks harness principles from linear algebra, particularly matrix multiplication, to identify patterns within an image.

- Radial basis function Neural Network There are two layers in the functions of RBF. These are used to consider the distance of a centre with respect to the point. In the first layer, features in the inner layer are united with the Radial Basis Function. In the next step, the output from this layer is considered for computing the same output in the next iteration. One of the applications of Radial Basis function can be seen in Power Restoration Systems. There is a need to restore the power as reliably and quickly as possible after a blackout

VI. APPLICATIONS OF ARTIFICIAL NEURAL NETWORKS

Artificial neural networks have been successfully applied in a variety of fields, including image recognition, natural language processing, and finance. In image recognition, neural networks can be trained to identify objects within images with high accuracy. For example, Google's DeepMind has developed a neural network that can recognize images of cats and dogs with near-human accuracy. In natural language processing, neural networks can be used for tasks such as language translation and sentiment analysis. For instance, Facebook uses neural networks to automatically translate posts and comments between different languages. In finance, neural networks can be used for tasks such as fraud detection and stock price prediction. For example, JPMorgan Chase uses neural networks to detect credit card fraud with high accuracy. The potential applications of artificial neural networks are vast and varied, and their use is only limited by the creativity of researchers and developers. As technology continues to advance, we can expect to see even more innovative applications of neural networks in areas such as healthcare, transportation, and entertainment

Applications of Artificial Neural Networks

- Social media: Artificial Neural Networks are used heavily in social media. For example, let’s take the ‘People you may know’ feature on Facebook that suggests people that you might know in real life so that you can send them friend requests. Well, this magical effect is achieved by using Artificial Neural Networks that analyse your profile, your interests, your current friends, and also their friends and various other factors to calculate the people you might potentially know. Another common application of Machine Learning in social media is facial recognition. This is done by finding around 100 reference points on the person’s face and then matching them with those already available in the database using convolutional neural networks.

- Marketing and Sales: When you log onto E-commerce sites like Amazon and Flipkart, they will recommend your products to buy based on your previous browsing history. Similarly, suppose you love Pasta, then Zomato, Swiggy, etc. will show you restaurant recommendations based on your tastes and previous order history. This is true across all new-age marketing segments like Book sites, Movie services, Hospitality sites, etc. and it is done by implementing personalized marketing. This uses Artificial Neural Networks to identify the customer likes, dislikes, previous shopping history, etc., and then tailor the marketing campaigns accordingly.

- Healthcare: Artificial Neural Networks are used in Oncology to train algorithms that can identify cancerous tissue at the microscopic level at the same accuracy as trained physicians. Various rare diseases may manifest in physical characteristics and can be identified in their premature stages by using Facial Analysis on the patient photos. So the full-scale implementation of Artificial Neural Networks in the healthcare environment can only enhance the diagnostic abilities of medical experts and ultimately lead to the overall improvement in the quality of medical care all over the world.

- Personal Assistants: I am sure you all have heard of Siri, Alexa, Cortana, etc., and also heard them based on the phones you have!!! These are personal assistants and an example of speech recognition that uses Natural Language Processing to interact with the users and formulate a response accordingly. Natural Language Processing uses artificial neural networks that are made to handle many tasks of these personal assistants such as managing the language syntax, semantics, correct speech, the conversation that is going on, etc.

Conclusion

In conclusion, artificial neural networks are a powerful tool for solving complex problems and making predictions based on large amounts of data. They are modelled after the human brain and consist of layers of interconnected nodes that can learn and adapt to new information. There are several types of artificial neural networks, including feedforward, recurrent, and convolutional, each with their own strengths and applications. While there are many advantages to using artificial neural networks, such as their ability to learn from data and make accurate predictions, there are also limitations to their use, such as the need for large amounts of data and the risk of overfitting. However, as technology continues to advance, we can expect to see even more powerful and sophisticated artificial neural networks in the future.

References

[1] National Conference on ‘Unearthing Technological Developments & their Transfer for Serving Masses’ GLA ITM, Mathura, India 17-18 April 2005 Nitin Malik Department of Electronics and Instrumentation Engineering, Hindustan College of Science and Technology, Mathura, India [2] K. Liu, X. Hu, J. Meng, J. M. Guerrero, and R. Teodorescu, “RUBoost-based ensemble machine learning for electrode quality classification in Li-ion battery manufacturing,” IEEE, 2021. [3] M. Madhiarasan and S. N. Deepa, “Determination of adequate hidden neurons in combo neural network using new formulation and fine tuning with IMGWOA for enrich wind-speed forecasting,” International Journal of Applied Research on Information Technology and Computing, vol. 9, no. 1, pp. 89–101, 2018. [4] J. I. Athavale, M. Yoda, and Y. Joshi, “Comparison of data driven modelling approaches for temperature prediction in data centers,” International Journal of Heat and Mass Transfer, vol. 135, pp. 1039–1052, 2019. 5. S. Manandhar, S. Dev, Y. H. Lee, Y. S. Meng, and S. Winkler, “A data-driven approach for accurate rainfall prediction,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 11, pp. 9323–9331, 2019.

Copyright

Copyright © 2024 Shravani Meshram, Prathmesh Tambakhe, Prof. N. G. Gupta. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59660

Publish Date : 2024-03-31

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online