Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Virtual Canvas using OpenCV

Authors: Santosh Bachkar , Shivam Bangar, Kumar Dalvi, Piyush Anantwar

DOI Link: https://doi.org/10.22214/ijraset.2024.60589

Certificate: View Certificate

Abstract

The main purpose of this project is to identify the human gestures according to that specific gestures it will perform specific tasks. Project are going to use OpenCV library, Python programming language and also we will use colour detection and image segmentation techniques to achieve this. OpenCV is an open source computer vision library for performing various advanced image processing. In colour detection we can detect any colour in a given range of HSV colour space. The image segmentation is the process of labelling every pixel in an image, where each pixel shares the same certain characteristic. Python is use for developing this whole project. After processing is complete for those input gestures it will show output on screen according to that user inputs. The main objective of the project is that the “Virtual Canvas” and put before readers some useful information regarding our project. We have made sincere attempts and have tried to present this matter in precise and compact form, the language being as simple as possible. We are sure that the information contained this volume would certainly prove useful for better insight in the scope and the dimension of this project in this true prospective. The perseverance and deep involvement shown by our group members made this vague task look very interesting and simple. We have designed this software project completely from scratch and we have not incorporated any readymade material from the Internet or any other sources to make our project seemingly more attractive and meaningful.

Introduction

I. INTRODUCTION

To provide game-based platform to train specially beginner farmer for better yield & better marketplace in mobile application. Farmers suffer a lot while farming or post farming. This is typically because of the weather, land fertility, marketplace, disease and many more. So, our application will do stuffs like weather forecasting which will be helping water management, then we will be monitoring crops which will help in better yield, marketplace for better profit of farmers, and much more.

The main purpose of this is to identify the human gestures according to that specific gestures it will perform specific tasks. We are going to use OpenCV library, Python programming language and also we will use color detection and image segmentation techniques to achieve this. OpenCV is an open source computer vision library for performing various advanced image processing. In color detection we can detect any color in a given range of HSV color space. The image segmentation is the process of labeling every pixel in an image, where each pixel shares the same certain characteristic. Python is use for developing this whole project. After processing is complete for those input gestures it will show output on screen according to that user inputs. The main objective of the project is that the “Virtual Canvas” and put before readers some useful information regarding our project. We have made sincere attempts and have tried to present this matter in precise and compact form, the language being as simple as possible. We are sure that the information contained this volume would certainly prove useful for better insight in the scope and the dimension of this project in this true prospective. The perseverance and deep involvement shown by our group members made this vague task look very interesting and simple. We have designed this software project completely from scratch and we have not incorporated any readymade material from the Internet or any other sources to make our project seemingly more attractive and meaningful.

In the era of digital world, traditional art of writing is being replaced by digital art. Digital art refers to forms of expression and transmission of art form with digital form. Relying on modern science and technology is the distinctive characteristics of the digital manifestation. Traditional art refers to the art form which is created before the digital art. From the recipient to analysis, it can simply be divided into visual art, audio art, audio-visual art and audio-visual imaginary art, which includes literature, painting, sculpture, architecture, music, dance, drama and other works of art. Digital art and traditional art are interrelated and interdependent. Social development is not a people's will, but the needs of human life are the main driving force anyway. The same situation happens in art.

The image segmentation is the process of labeling every pixel in an image, where each pixel shares the same certain characteristic. Python is use for developing this whole project. After processing is complete for those input gestures it will show output on screen according to that user inputs. The main objective of the project is that the “Virtual Canvas” and put before readers some useful information regarding our project.

We have made sincere attempts and have tried to present this matter in precise and compact form, the language being as simple as possible.

We are sure that the information contained this volume would certainly prove useful for better insight in the scope and the dimension of this project in this true prospective. We have designed this software project completely from scratch and we have not incorporated any readymade material from the Internet or any other sources to make our project seemingly more attractive and meaningful. In the era of digital world, traditional art of writing is being replaced by digital art. Digital art refers to forms of expression and transmission of art form with digital form.

II. RATIONALE

A. Problem Statement

Contactless Canvas is a hands-free digital drawing canvas in which the user can draw or perform any task without physically interacting with device. We will be using the computer vision techniques of OpenCV and Python as programming language to build this project. In this project we are going to build a contactless canvas which can draw anything on it by just capturing the marker. Here a tip of the finger is used as the marker which can be captured by the camera.

B. Objectives

Provide a useful, informative, mobile based solution for navigation inside a campus, which will contain all the necessary details, to make sure that it is easy, accurate navigation and identification of various buildings, departments and help the students and visitors to reach their desired location without any trouble.

C. Goal

The preliminary analysis aims to uncover insurmountable obstacles that would render a feasibility study useless. If no major roadblocks are uncovered during this pre-screen, a more intensive feasibility study will be conducted.

III. TECHNICAL SPECIFICATION

OpenCV:

OpenCV-Python is a library of Python bindings designed to solve computer vision problems. Python is a general purpose programming language started by Guido van Rossum that became very popular very quickly, mainly because of its simplicity and code readability. It enables the programmer to express ideas in fewer lines of code without reducing readability. Compared to languages like C/C++, Python is slower. That said, Python can be easily extended with C/C++, which allows us to write computationally intensive code in C/C++ and create Python wrappers that can be used as Python modules. This gives us two advantages: first, the code is as fast as the original C/C++ code (since it is the actual C++ code working in background) and second, it easier to code in Python than C/C++. OpenCV-Python is a Python wrapper for the original OpenCV C++ implementation.

OpenCV-Python makes use of Numpy, which is a highly optimized library for numerical operations with a MATLAB-style syntax. All the OpenCV array structures are converted to and from Numpy arrays. This also makes it easier to integrate with other libraries that use Numpy such as SciPy and Matoplotlib.

Intended audience and reading suggestions

This document is primarily intended for all project developers associated with this project. Users, testers, and other parties that have an interest in this project can also use this document to gain a better understanding of the drone. This document is organized into several sections that can be read and referenced as needed. Readers who wish to explore the features of effective sharing scheme of AGROW-BAS-X in more detail should read on to Part 3.2 (System Features), which expands upon the information laid out in the main overview. Part 3.3(External Interface Requirements) offers further technical details.

Including information on the user interface as well as the hardware and software platforms on which the application will run. Readers interested in the non-technical aspects of the project should read Part 3.4, which covers performance, safety, security, and various other attributes that will be important to users. Readers who have not found the information they are looking for should check Part 3.5 (Other Requirements), which includes any additional information which does not fit logically into the other sections

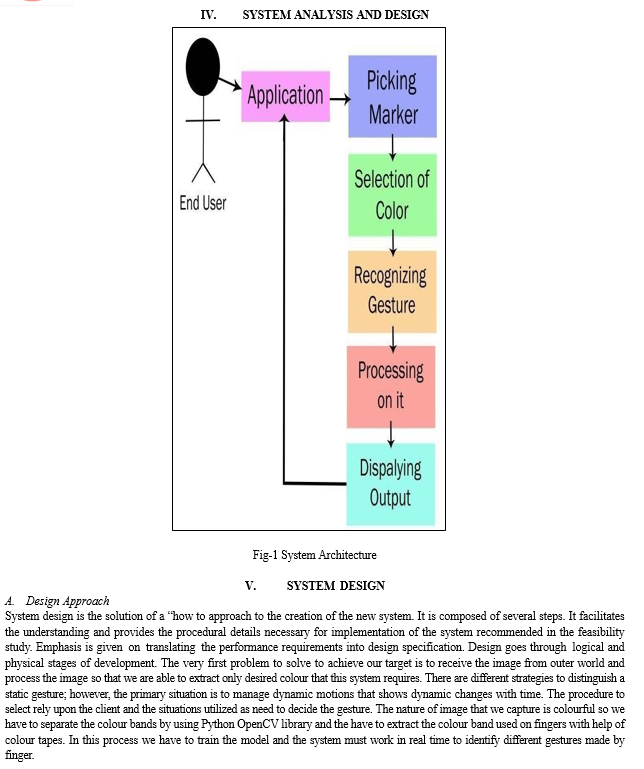

VI. METHODOLOGY

This methodology needs a lot of data to be stored and sometimes it leads to wrong prediction due to background difference or the skin colour difference. Some of the existing papers followed the process of image processing using threshold values and database data of different images. To overcome all these limitations and drawbacks, we proposed the system using media pipe. In the proposed system hand tracking is done using Media pipe which first detects hand landmarks and then obtain positions according to it. Below figure describes the flow of system or the process of project in which it works. This is the complete work flow that occurs to execute the system and draw images just by waving hands in efficient manner.

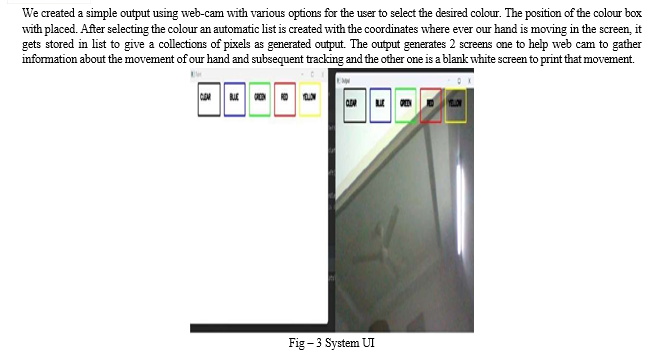

A. The Camera Used in the AIR CANVAS :

The frames that have been recorded by a laptop or PC’s webcam serve as the foundation for the proposed AIR CANVAS. As seen in Figure 4, the web camera will begin recording video after the video capture object is created using the Python computer vision package OpenCV. The virtual AI system receives frames from the web camera and processes them.

B. Capturing the Video and Processing :

The webcam is used by the AIR CANVAS system, and every frame is recorded up until the end of the application. As illustrated in the accompanying code, the video frames are converted from BGR to RGB in order to find the hands in the video frame by frame.

Different Hands(self, img, draw = True): imgRGB=cv2.cvtColor(img,cv2.COLOR_BGR2RGB)self.results = self.hands.pro cess(imgRGB)

C. Detecting Which Finger Is Up and Performing the Particular Function :

Using the tip Id of the specific finger that we located using the Media Pipe and the corresponding co-ordinates of the fingers that are up, as shown in Figure 6, we are able to determine which finger is up at this point. Then, in accordance with that determination, the specific mouse function is carried out.

The methodologies or the stages of the proposed system are discussed below:

- Run or Execute the Code: Execute the code once all the libraries are installed, this leads to turning the camera on automatically and the OpenCV frame with buttons displaying various shapes, colors, size, save, clear, erase etc.

- Webcam Starts: Webcam starts recording and converts the video each frame and sends the frame to hand tracker class to track or detect the positions of finger. Figure 2 displays the buttons and frame which records video

- Detects Hand Landmarks: Each frame received is compared with media pipe hand landmarks i.e., positions of finger are found using get Positions () and which finger is opened using getUpFingers () functions of hand tracker class. These 2 functions are part of the Hand tracking module. 4. Perform actions according to button Different buttons are chooses with the help of index finger hover over those buttons. In this way different colors are chose and also among them is the option of clear the screen.

VII. MODULE IMPLEMENTATION

A. SLAM Navigation Module

In the actual scene, the theoretical basis for estimating the motion state of the mobile device is the motion equation, which is based on the state of the previous moment and the motion of the current moment. The input us predicts the current motion trajectory. Combined with the sensor, it corrects the motion prediction based on its measurement equation. In the single vision slam, there is no input of motion state; thus, the information provided by the correction equation is limited. After the introduction of IMU data, IMU is used as the motion input us. The experimental results show that this method can more exactly predict the motion state. The essence of algorithm is using motion prediction and a measurement equation for joint prediction. During the process of system navigation, the real-time navigation information of the camera following the target (anchor) is fed back to the user. The script (Hello AR Controller .cs) used to control tracking parameters in Google AR Core development package is modified, which include text components that are used to display messages publicly and the camera that follows target. These common fields are filled by dragging and dropping the corresponding objects from the scene hierarchy, and the Quit on Connection Errors method is also modified. Meanwhile, the Camera Pose Text components used to display the current message on the screen. The campus navigation system needs to match the instance of the camera moving in the real world with the distance of the camera moving in the 3D map the plane, any translations on the y-axis are removed to ensure that the tracking point will not be lower than the plane when the users are holding the mobile phone camera for campus navigation. At the same time, the campus navigation system tries to keep the tracking point stable when moving. The tracking type was set to “Position Only” in AR Core Device and the position and rotation information was provided by the Frame. Posing was used to apply position information to the translation of the sphere from frame to frame in the Follow Target script. In order to render the rotation of the anchor point in the 3D map consistent with the rotation of the mobile phone, the rotation was set to the third-person camera, which is always behind the sphere. The player settings were set according to Google’s recommendations as follows:

- Other Settings > Multithreaded Rendering: Off;

- Other Settings > Minimum API Level: Android 7.0 or higher;

- Other Settings > Target API Level: Android 7.0 or 7.1;

- XR Settings > AR Core (Tango) Supported:

Conclusion

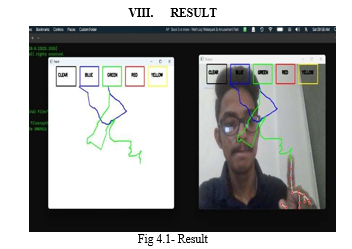

The system has the potential to challenge traditional writing methods. It eradicates the need to carry a mobile phone in hand to jot down notes, providing a simple on the go way to do the same. It will also serve a great purpose in helping especially abled people communicate easily. Even senior citizens or people who find it difficult to use keyboards will able to use system effortlessly. Extending the functionality, system can also be used to control IoT devices shortly. Drawing in the air can also be made possible. The system will be an excellent software for smart wearable\'s using which people could better interact with the digital world. Augmented Reality can make text come alive. There are some limitations of the system which can be improved in the future. Firstly, using a handwriting recognizer in place of a character recognizer will allow the user to write word by word, making writing faster. Secondly, hand-gestures with a pause can be used to control the real-time system

References

[1] Prof. S.U.Saoji, Nishtha Dua, Akash Kumar Choudhury, Bharat Phogat, \"VIRTUAL CANVAS APPLICATION USING OPENCV AND NUMPY IN PYTHON\" in 2021 in International Research Journal of Engineering and Technology(IRJET) [2] Surya Narayan Sharma, Dr A Rengarajan, “Hand Gesture Recognition using OpenCV and Python”, Department of Master of Computer Applications, Jain Deemed to be University, Bengaluru, Karnataka, India. [3] Yuan Hsiang Chang, Chen Ming Chang, \"Automatic Hand Pose Trajectory Tracking System Using Video Sequences\", INTECH, pp 132 152 Croatia, 2010. [4] Justin Joco and Stephanie Lin, Lin, “Virtual Canvas” in 2019 in ECE. [5] P. Ramasamy, G. Prabhu, and R. Srinivasan, \"An economical air writing system is converting finger movements to text using a web camera,\" 2016 International Conference on Recent Trends in Information Technology (ICRTIT), Chennai, pp. 1- 6, 2016. [6] Saira Beg, M. Fahad Khan and Faisal Baig, \"Text Writing in Air,\" Journal of Information Display Volume 14, Issue 4, 2013.

Copyright

Copyright © 2024 Santosh Bachkar , Shivam Bangar, Kumar Dalvi, Piyush Anantwar. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60589

Publish Date : 2024-04-19

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online