Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Virtual Mouse Using Artificial Intelligence

Authors: Jasdeep Singh, Sahil Goyal, Bhavik Kwatra, Sandeep Kaur

DOI Link: https://doi.org/10.22214/ijraset.2023.56223

Certificate: View Certificate

Abstract

The \\\"AI Virtual Mouse\\\" project represents a pioneering exploration at the intersection of computer vision, machine learning, and digital artistry. This endeavor seeks to revolutionize the conventional modes of digital art creation by introducing an immersive, hands-free painting experience through the interpretation of hand gestures. Motivated by a vision to make digital art more intuitive and interactive, the project employs sophisticated hand tracking facilitated by the MediaPipe library and robust image processing via the OpenCV framework. By discerning the nuanced movements of the hand in real- time, the system translates these gestures into dynamic strokes on a virtual canvas, eliminating the physical barriers that often accompany traditional input devices. This abstract encapsulates the project\\\'s core objectives, highlighting its architectural foundations, implementation strategies, and future trajectories. The convergence of technology and artistic expression in the AI Virtual Mouse project presents a paradigm shift in how individuals engage with digital canvases. It not only democratizes the creative process by mirroring natural artistic gestures but also lays the groundwork for a more personalized and collaborative approach to digital art creation. As technological landscapes continue to evolve, this project stands as a testament to the potential of artificial intelligence in fostering innovative and accessible avenues for artistic exploration. The paper delves into the details of the AI Virtual Mouse, offering insights into its current capabilities and paving the way for an exciting future where the realms of technology and creativity seamlessly intertwine.

Introduction

I. INTRODUCTION

In the dynamic intersection of technology and artistic expression, the "AI Virtual Mouse" project emerges as a groundbreaking initiative, reshaping the landscape of digital art creation. At its core, this endeavor seeks to transcend the limitations of traditional input devices, offering users an immersive and hands-free painting experience through the integration of computer vision and machine learning technologies.

The motivation behind the AI Virtual Mouse project arises from the recognition that while digital art has become an integral part of contemporary creative processes, the tools available often impose a level of detachment between the artist and their creation. Standard input devices like mice or styluses, while functional, lack the intuitive fluidity inherent in traditional artistic mediums. This project aspires to bridge this gap by introducing a novel paradigm where users can engage with digital canvases in a manner that mirrors the natural and expressive movements of physical artistry.

The project hinges on the sophisticated integration of two key technologies. First, the MediaPipe library provides advanced hand

framework facilitates robust image processing, ensuring the accurate translation of these hand gestures into dynamic strokes on a virtual canvas. The result is a virtual mouse that becomes an extension of the artist's hand, enabling a more organic and responsive digital painting experience.

As we delve into the architectural intricacies of the AI Virtual Mouse project, it is crucial to recognize its potential impact on the democratization of digital art creation. By eliminating physical barriers and enhancing user interaction, this project not only makes digital art more accessible but also enriches the creative process. Artists can express themselves more authentically, breaking away from the constraints of traditional tools.

This paper comprehensively explores the nuances of the AI Virtual Mouse, delving into its architecture, implementation strategies, and future trajectories. Beyond a mere technological innovation, this project symbolizes a transformative shift in how technology and art converge.

The coming sections will unravel the intricate layers of this visionary project, showcasing its current capabilities and laying the foundation for future developments in the ever-evolving realms of digital artistry and artificial intelligence.

II. LITERATURE REVIEW

The convergence of artificial intelligence (AI) and human-computer interaction (HCI) has given rise to transformative possibilities, particularly in the realm of gesture-based interfaces.

This literature review embarks on a comprehensive exploration of the intricate interplay between AI and HCI, focusing on the implementation of a virtual mouse controlled by gestures within drawing applications. Gesture-based interfaces have emerged as a captivating modality in HCI, offering a bridge between human expression and digital systems. Wachs et al. (2011) assert that gestures provide an intuitive means of communication, enabling users to interact with computing systems in a more natural and expressive manner. This shift is particularly pertinent in scenarios where traditional input devices, such as keyboards and mice, may impose limitations.

The infusion of AI into gesture recognition systems represents a pivotal stride forward. Li et al. (2019) conduct a thorough review of deep learning techniques for hand gesture recognition. They showcase the efficacy of these techniques across various applications, ranging from virtual interfaces to augmented reality (AR). The utilization of AI not only enhances the accuracy of gesture interpretation but also opens avenues for recognizing complex and dynamic hand movements. Drawing applications, as a subset of gesture-based interfaces, offer a compelling avenue for creative expression. Lee et al. (2017) delve into the design and implementation of a gesture-based drawing application, emphasizing its potential to enhance user creativity and enjoyment. The fusion of gestures with drawing introduces a dynamic and interactive dimension to digital canvases, providing users with a more immersive and expressive medium. tracking capabilities, allowing the system to discern and interpret Virtual mice controlled by gestures represent a departure from traditional intricate hand movements in real-time. Second, the OpenCV pointing devices. Khan et al. (2020) present a vision-based virtual mouse system driven by gesture recognition techniques. This innovation not only broadens accessibility but also addresses challenges faced by users with physical disabilities. The virtual mouse, guided by AI and responsive to intuitive gestures, becomes a more inclusive tool for interacting with computing systems.

Challenges persist in the realm of gesture-based systems. Li et al. (2021) shed light on issues related to gesture ambiguity, underscoring the necessity for robust algorithms capable of accurately interpreting a diverse range of gestures. The challenge lies not only in recognizing specific gestures but also in ensuring that the system can discern between different gestures with a high degree of accuracy. Additionally, ergonomic considerations and system robustness emerge as critical factors influencing the overall user experience. Striking a balance between a diverse range of gestures and ensuring a seamless user experience remains a key challenge in the development of gesture- based systems.

User-centered design principles are pivotal in creating effective gesture-based HCI systems. Oviatt (2018) emphasizes the importance of factors such as responsiveness, accuracy, and user satisfaction in determining the success of gesture-based applications. User experience analysis, therefore, becomes a critical component of the development process. Understanding how users perceive and interact with gesture- based systems is essential for refining the design and ensuring that these systems align with user expectations.

The integration of AI into gesture-based drawing applications represents a relatively nascent but rapidly evolving area of research. Chen et al. (2022) present a system that combines machine learning with computer vision to enhance the accuracy of hand gesture recognition in a drawing application. The study underscores the potential of AI to enrich the creative experience, paving the way for more intuitive and expressive digital interactions.

Looking ahead, future directions involve addressing identified challenges, exploring novel machine learning architectures, and expanding the scope of applications. The evolution of AI-driven virtual interfaces extends beyond drawing applications. Wang et al. (2023) propose integrating AI-driven virtual interfaces into augmented reality (AR) systems. This visionary approach envisions new possibilities for immersive creativity, where users can interact with digital content seamlessly in augmented environments.The future trajectory of research in AI-driven virtual mice through gesture-based drawing applications holds promise in several dimensions. Firstly, addressing the identified challenges, particularly in gesture recognition accuracy, will be paramount. Advancements in machine learning models and algorithms that can discern complex hand movements with high precision are crucial for the continued success of these systems.

Secondly, exploring novel machine learning architectures and techniques is essential for pushing the boundaries of what is currently achievable. As AI technologies continue to advance, the integration of more sophisticated models, potentially incorporating aspects of reinforcement learning, could contribute to more adaptive and context- aware virtual mice. Thirdly, expanding the scope of applications beyond traditional drawing environments presents exciting possibilities. The integration of AI-driven virtual mice into diverse domains, such as 3D modeling, virtual reality (VR), and collaborative environments, could redefine how individuals interact with digital content. The potential for AI-driven interfaces to become integral tools in various professional and creative fields is a promising avenue for exploration.

In conclusion, the reviewed literature collectively underscores the transformative potential of AI-driven virtual mice through gesture- based drawing applications.

The marriage of AI and gesture-based interfaces has already begun reshaping the landscape of human- computer interaction. From virtual mice that respond to dynamic hand movements to immersive drawing applications, the fusion of AI and gestures offers novel avenues for creative expression and accessibility in the digital realm.

The challenges posed by gesture ambiguity, ergonomic considerations, and system robustness are inherent aspects of this evolving field.

However, with ongoing advancements in AI technologies, machine learning, and computer vision, these challenges can be addressed, paving the way for more seamless and natural interactions between humans and digital systems.

As we look ahead, the future of AI-driven virtual interfaces extends beyond drawing applications. The integration of these interfaces into augmented reality systems opens up new dimensions for immersive creativity. The envisioned future involves users seamlessly interacting with digital content in augmented environments, where AI-driven virtual interfaces become indispensable tools in various professional and creative endeavors.

In essence, the synthesis of AI and gesture-based interfaces is not merely a technological advancement but a paradigm shift in how individuals interact with digital systems.

From enhancing accessibility to fostering creativity, the implications of this research extend far beyond the confines of the digital canvas, shaping the future of human-computer interaction in profound and innovative ways.

III. ALGORITHMS

In the realm of hand gestures and tracking, the dynamic duo of MediaPipe framework and OpenCV library in the Python programming language takes center stage.

These cutting-edge technologies synergize seamlessly to empower the efficient learning of gestures and the precise tracking of hand movements.

MediaPipe, a visionary open-source framework from the tech juggernaut Google, emerges as a versatile force in the domain of machine learning pipelines. Its prowess extends to cross-platform development, harnessing real-time data for a myriad of audio and video applications.

Developers, captivated by the potential of MediaPipe, embark on journeys to create and analyze graphics, architecting systems tailored for diverse applications.The framework's brilliance lies in its multi-modal capabilities, gracefully adapting to both mobile and desktop platforms. At its core,

MediaPipe embodies three foundational values: meticulous performance measurements, a robust infrastructure for storing sensor data, and the ingenious concept of reusable sets known as computes. The orchestration of interconnected computers in the form of pipes lays the groundwork for systems powered by MediaPipe.

Developers wield the freedom to customize or define calculators within the image canvas, enriching the landscape of possibilities for crafting unique applications.

This dynamic interplay of calculators and flow gives birth to a sophisticated data flow graph, meticulously constructed through MediaPipe, where each calculator and node intricately weaves into the stream of innovation.

On the other front, OpenCV emerges as a stalwart in the arsenal, a computer vision library laden with potent image processing algorithms designed for tasks as intricate as object detection. This Python-based library extends an invitation to developers, beckoning them to create real- time computer vision applications.

Its prowess is particularly evident in the realms of image and video processing, where it unveils capabilities for intricate analyses such as face and object detection. In the symphony of technological innovation, OpenCV plays a pivotal role, offering a canvas upon which developers paint the future of visual intelligence.

The harmonious collaboration of MediaPipe and OpenCV sets the stage for a new era in gesture-based interactions, where the marriage of sophisticated frameworks and powerful libraries elevates the art of hand gesture recognition to unprecedented heights. Developers, armed with these tools, embark on a journey of creativity and innovation, sculpting applications that redefine the boundaries of human-computer interaction.

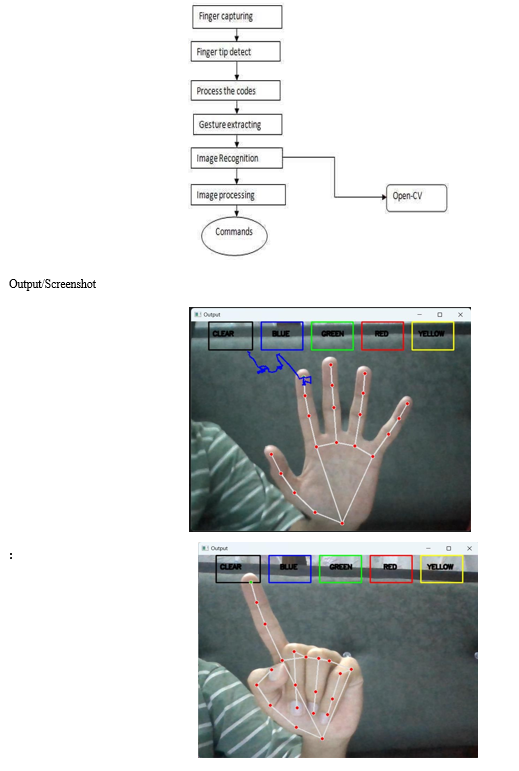

IV. PROPOSED METHODOLOGY

Within the methodology, the technique utilized in every issue of the system might be defined one at a time. They are the following subsections:

V. FUTURE SCOPE

The AI Virtual Mouse project holds significant potential for future enhancements and expansions, making it a versatile platform for creative expression. Here are several avenues for extending the capabilities of the system:

- Gesture Customization: Empowering users to define and customize their own gestures for specific actions is a crucial step towards enhancing user engagement and personalization. This feature would enable users to tailor the system to their preferences, fostering a more intuitive and user-friendly experience.

- Additional Features: Introducing additional features such as erasing, text input, and shape recognition would significantly broaden the scope of creative possibilities. Erasing gestures can allow users to seamlessly correct or modify their drawings, while text input and shape recognition could add versatility to the types of artwork that can be created.

- Multi-User Collaboration: Enabling multiple users to collaborate on the same canvas simultaneously enhances the social aspect of the application. This feature promotes shared artistic experiences, making the AI Virtual Mouse not only a tool for individual creativity but also a platform for collaborative art projects.

- 3D Drawing: Expanding the project to support three-dimensional drawing in virtual space would introduce a new dimension to the artistic experience. Users could create sculptures and drawings with depth, adding a layer of immersion and realism to their creations.

- Integration with VR/AR: Exploring integration with virtual reality (VR) or augmented reality (AR) devices represents a natural progression for the project. By leveraging VR/AR technologies, users could immerse themselves in a virtual art studio, enhancing the intuitive and interactive aspects of the painting environment.

In conclusion, the proposed future developments aim to transform the AI Virtual Mouse into a comprehensive and adaptable tool for digital art creation. By incorporating user customization, expanding features, promoting collaboration, embracing three-dimensional space, and integrating with immersive technologies, the project can reach new heights in redefining the boundaries of virtual artistic expression.

Conclusion

In conclusion, the AI Virtual Mouse project represents a significant leap forward in redefining digital art creation through innovative gesture- based interaction. The integration of hand tracking and computer vision technologies has paved the way for a hands-free and intuitive painting experience. As the project currently stands, it offers users the ability to draw on a virtual canvas by translating their hand gestures into artistic strokes. Looking ahead, the identified future scope highlights the commitment to continuous improvement and adaptation to user needs. The prospect of gesture customization introduces a personal touch, allowing users to define their own gestures for specific actions, fostering a sense of ownership and individuality. The addition of features such as erasing, text input, and shape recognition expands the creative palette, making the platform more versatile and capable of accommodating diverse artistic expressions. The envisioned multi-user collaboration feature introduces a social dimension to the project, turning it into a shared canvas where artistic ideas can be exchanged and developed collaboratively. Furthermore, the exploration of three-dimensional drawing in virtual space enhances the immersive qualities of the system, enabling users to create art with depth and perspective. In the ever-evolving landscape of technology, the integration with virtual reality (VR) or augmented reality (AR) devices emerges as a logical progression. This step aims to provide users with an even more immersive and intuitive painting environment, unlocking new possibilities for artistic exploration. In essence, the AI Virtual Mouse project, while currently a powerful tool for digital art creation, is poised for further evolution. The commitment to user-centric customization, collaborative features, three-dimensional capabilities, and integration with emerging technologies positions this project as a dynamic and adaptable platform for the future of digital artistic expression. As the development continues, it holds the promise of revolutionizing how individuals engage with and create art in the digital realm.

References

[1] J. Katona, “A review of human–computer interaction and virtual reality research fields in cognitive InfoCommunications,”Applied Sciences, vol. 11, no. 6, p. 2646, 2021. [2] D. L. Quam, “Gesture recognition with a DataGlove,” IEEE Conference on Aerospace and Electronics, vol. 2, pp. 755– 760, 1990. [3] D.-H. Liou, D. Lee, and C.-C. Hsieh, “A real time hand gesture recognition system using motion history image,” in Proceedings of the 2010 2nd International Conference on Signal Processing Systems, July 2010. [4] S. U. Dudhane, “Cursor control system using hand gesture recognition,” IJARCCE, vol. 2, no. 5, 2013. [5] K. P. Vinay, “Cursor control using hand gestures,” International Journal of Critical Accounting, vol. 0975–8887, 2016. [6] L. $omas, “Virtual mouse using hand gesture,” International Research Journal of Engineering and Technology (IRJET, vol. 5, no. 4, 2018. [7] P. Nandhini, J. Jaya, and J. George, “Computer vision system for food quality evaluation—a review,” in Proceedings ofthe 2013 International Conference on Current Trends in Engineering and Technology (ICCTET), pp. 85–87, Coimbatore, India, July 2013. [8] Jaya and K. $anushkodi, “Implementation of certain system for medical image diagnosis,” European Journal of Scientific Research, vol. 53, no. 4, pp. 561–567, 2011. [9] P. Nandhini and J. Jaya, “Image segmentation for food quality evaluation using computer vision system,” International Journal of Engineering Research and Applications, vol. 4, no. 2, pp. 1– 3, 2014. [10] J. Jaya and K. $anushkodi, “Implementation of classification system for medical images,” European Journal of Scientific Research, vol. 53, no. 4, pp. 561–569, 2011. [11] J. T. Camillo Lugaresi, “MediaPipe: A Framework for Building Perception Pipelines,”2019, https://arxiv.org/abs/1906.08172. [12]Google,MP,https://ai.googleblog.com/2019/08/on- devicereal- time-hand-tracking with.html. [12] V. Bazarevsky and G. R. Fan Zhang. On-Device, MediaPipe for Real-Time Hand Tracking. [13] K. Pulli, A. Baksheev, K. Kornyakov, and V. Eruhimov, “Realtime computer vision with openCV,” Queue, vol. 10, no.4, pp. 40–56, 2012. [14] Bradski, G. (2000). \\\"The OpenCV Library.\\\" Dr. Dobb\\\'s Journal of Software Tools. [15] An, Y., & Choi, Y. (2020). \\\"MediaPipe: A Framework for Building Perception Pipelines.\\\" Google AI Blog. [16] Abadi, M., Barham, P., Chen, J., Chen, Z., Davis, A., Dean, J., ... & Ghemawat, S. (2016). \\\"TensorFlow: A System for Large-Scale Machine Learning.\\\" 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI 16). [17] Opencv.org. (n.d.). OpenCV Documentation.

Copyright

Copyright © 2023 Jasdeep Singh, Sahil Goyal, Bhavik Kwatra, Sandeep Kaur. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET56223

Publish Date : 2023-10-19

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online