Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Virtual try-on using Open-CV

Authors: Prof. Dr. Sonali Antad, Vincent Bardekar, Ganesh Damre, Bhumika Chule, Durgesh Dhurve

DOI Link: https://doi.org/10.22214/ijraset.2024.62234

Certificate: View Certificate

Abstract

Virtual Try-On (VTO) technology has drawn a lot of interest recently as a potentially effective way to improve online shopping experiences, eliminate the need for in-person trials, and raise consumer happiness. This report provides a comprehensive analysis of the state-of-the-art methods, challenges, and possible future opportunities in the virtual try-on industry. In the future, consider integrating augmented reality (AR) for real-time virtual fitting in physical environments, deep learning to improve the accuracy of cloth simulation, and effortlessly creating an effective and user-friendly VTO system for users to boost customer satisfaction. With aim to create a reliable e-commerce platform that will enable customers to try on clothing wherever they are, whenever they like, with the necessary privacy and security. The report concludes by emphasizing the transformative potential of VTO in transcending temporal and spatial constraints, presenting a vision where customers can try on clothing at their convenience. This vision aligns with the overarching goal of creating a reliable e-commerce platform that not only meets but exceeds customer expectations, thus setting a new standard in the online shopping landscape. The findings and recommendations herein provide a roadmap for industry stakeholders to navigate the challenges, seize opportunities, and collectively usher in a new era of online retail.

Introduction

I. INTRODUCTION

With a market volume of $598,631 million in 2019 and an anticipated market volume of $835,781 million by 2023, fashion is the market's largest category and has seen a gain in revenue recently. The paper discusses a variety of VTO methods, including image- and 3D-based ones. While 3D-based approaches include developing virtual avatars or models that precisely imitate garment fitting, image-based methods concentrate on transferring clothing items onto user photos. Realistic texture and appearance transfer has been made possible by a variety of image synthesis techniques, including generative adversarial networks (GANs), creating aesthetically convincing virtual try-on experiences. However, 3D-based methods use precise body scanning and clothing modelling to provide more realistic virtual fitting simulations. Accurate body modelling, dealing with different fabric characteristics, resolving user-specific variances, and real-time interaction are challenges in VTO. Innovative approaches in body scanning technology, fabric deformation algorithms, and user-adaptive modelling are needed to address these problems. To promote responsible adoption, ethical issues of data security, privacy, and possible abuses of VTO technology must also be addressed. The trend of online buying for clothing has increased recently. Even though buying clothes online offers a number of advantages over traditional methods, genuine in-person shopping experience, where consumers may inspect and try on clothes before buying them in person, is still preferred by many shoppers. Because it enables customers to try on several costumes without actually donning them, having a lively shop environment close by is crucial[1]. The picture-based virtual try-on system aims to substitute specific clothing items with a fashion style. Two categories might be used to classify earlier work. One is to directly produce pictures using GAN. The GAN model's instability and difficulty in retaining garment details are the work's main problems. The alternative involves putting the clothes through a thin plate spline and entering the distorted clothes into the network to get the desired pictures[7]. We can see real-world examples in India as well, such as the Lenskart app, which makes use of your camera to let you try on glasses online before buying. Brands like Zeekit, StyleMe are so of the cool apps that allows consumer to virtually try-on. In contrast to Snapchat, YouTube primarily uses influencer content and paid advertisements for AR try-ons. To make this possible, Google has partnered with a number of businesses in the beauty technology sector, including Perfect Corp. With its AR campaigns on YouTube, e.l.f Cosmetics has had great success, and who can forget L'oreal? Over 55% of social media users in the US between the ages of 18 and 24 and about 48% of users between the ages of 25 and 34 have bought something on social media. Analysis this our modest attempt to create an independent e-commerce website that will draw customers of all ages due to its special feature that allows you to virtually try clothes on in order to make better purchasing decisions. Due to the advancement of technologies like AR and VR, this website may become obsolete.

II. LITERATURE SURVEY

The prior study on virtual try-on that was based on different technologies and algorithms developed by other academics from across the world is described in this section. The system architecture put out by Cong Yang, Yangjie Cao, Bo Zhang, Jie Li, and Xiaoyang Lv uses The virtual try-on procedure is divided into two sections by MSVTON to improve the produced pictures' semantic coherence and visual realism. The two networks that comprise MS-VTON are the Content Learning Network (CLN) and the Scene Learning Network (SLN). Second, CLN improves details such as garment textures, etc. SLN first generates approximate try-on photographs by learning the semantics of try-on circumstances. MS-VTON outperforms other image-to-image translation techniques in trials, achieving a FID score of 9.8[5]. The configuration is the same for the SLN and CLN generators. Their foundation is in the U-Net encoder-decoder network. The two discriminators each have five Conv-NormLeakyRelu blocks, regardless of their various layer configurations. To provide realistic texture and dependable alignment, a multi-stage warping module that combines feature and pixel alteration was used to V Krishna Ganesh3, S B Bindu4, and M Amarnath1. To facilitate advanced body occlusion and posture control, we present a semantic segmentation prediction module. They provide a module that uses the reference picture to create an arm image that will be altered after try-on developed by Hyug Jae Lee, Rokkyu Lee, created by Myounghoon Cho, Rokkyu Lee, Minseok Kang, Gunhan Park, and Hyug Jae Lee in Los Angeles VITON is a platform for visually appealing virtual try-ons.. It was published in a paper titled "LA-VITON in 2019. It enables the creation of superb try-on photographs while maintaining the general style and attributes of apparel products. The Try-On Module (TOM) and the Geometric Matching Module (GMM) are the two modules that make up the suggested network.[4] One of the main goals of GMM is to align clothes while maintaining their qualities by using Rocco's bottom-up, end-to-end trainable geometric matching framework. The most well-liked virtual try-on tools for makeup and facial accessories are Glassify from XLabz, Ditto's Virtual Try-on, and YouCam cosmetics from Perfect Corp., thanks to the efforts of Davide Marelli, Simone Bianco, and Gianluigi Ciocca[6]. Additionally, they put forth the most widely used techniques, such as shape regression and 3D Morphable Model (3DMM) fitting techniques, which allow for 3D face reconstruction from a single image. The approach put forward by [2] is one that merits emphasis. A synthesis network with two encoders is described in DP-VTON [1] Yuan Chang, Tao Peng∗ , Ruhan He, Xinrong Hu, Junping Liu, Zili Zhang, Minghua Jiang [1] in order to maintain details of clothes and body parts. The outcomes of the three approaches for PSNR, SSIM, IS, and FID. For FID, lower scores are preferable. Greater scores denote superiority in terms of SSIM, IS, and PSNR. The metrics Inception Score which is(IS), Frechet Inception Distance (FID), along with Peak Signal to Noise Ratio (PSNR) are used to evaluate the performance of the models in terms of the quality of the generated try-on photographs. The table shows the results of CP-VTON, ACGPN, and DP-VTON for pair-wise structural similarity (SSIM) and image quality (IS, FID, and PSNR). Compared to other methods, DP-VTON performs better, according to quantitative measures.

Table 1: SSIM,IS,FID,PSNR values of different algorithms

|

Method |

SSIM |

IS |

FID |

PSNR |

|

CP-VTON |

0.745 |

2.757 |

19.108 |

21.111 |

|

ACGPN |

0.845 |

2.829 |

14.420 |

23.067 |

|

DP-VTON |

0.871 |

3.044 |

8.726 |

25.278 |

Table 2: Comparison on dataset between different methods

|

Method |

CP-VTON |

Pose-Guide |

Fit-Me |

Real Images |

|

Mean |

2.5733 |

3.0064 |

3.3360 |

3.6744 |

|

Variance |

0.0050 |

0.0094 |

0.0162 |

0.0171 |

Table 3: FID score for different model

|

|

|

Single |

attribute |

|

Multiple attributes |

|

side |

back |

white |

black |

||

|

Pix2pix |

32.83 |

30.41 |

31.12 |

20.13 |

- |

|

CycleGAN |

17.27 |

18.83 |

18.73 |

19.82 |

- |

|

MS-VTON |

7.91 |

9.71 |

8.13 |

9.57 |

9.80 |

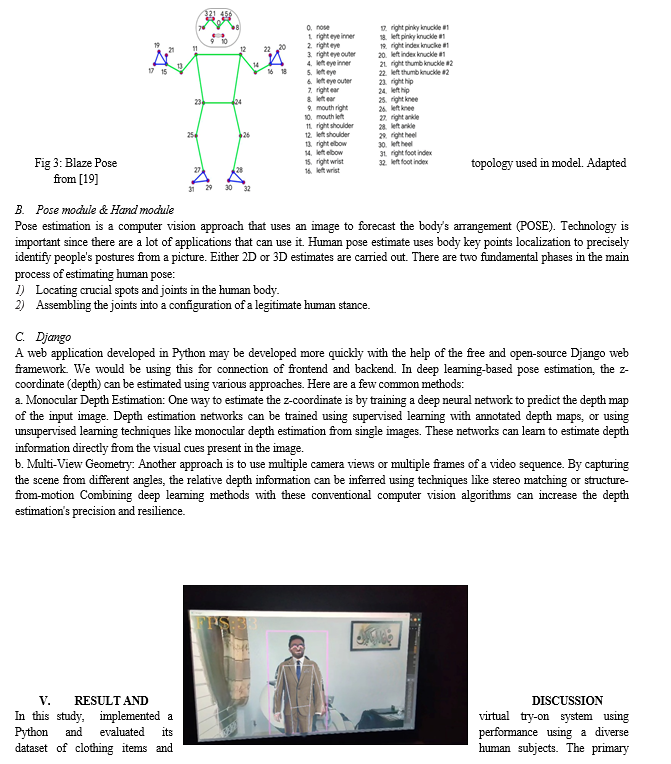

A range of products that can be evaluated using technology including watches, sunglasses, eyeglasses, tinted cosmetics, and many more that will eventually be available. network that improves visual detail retention and addresses the issue of missing body parts. Relevant tests on the clothing standard have shown the viability of various approaches is yet to come.

The tables above provide us with a starting point for comparing various algorithms with leading MS-VTON features.[5]

III. PROPOSED METHOD

Some drawbacks of the current generation of virtual try-on clothing solutions were covered in the Section. Our goal with the suggested method is to develop a system that lets the user try on several outfits from the first picture that is taken using a computer or mobile device's camera. For this effort, Open-CV and Python programming are used. Following the building stage, a few key body locations are identified in order to estimate the parameters for the garments fitting phase. To place the selected clothing correctly on the rebuilt mesh, the size, translation, and orientation are computed for a database of different eyewear models. Let's take a closer look:

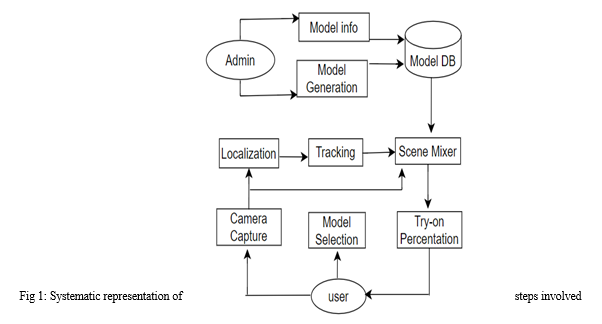

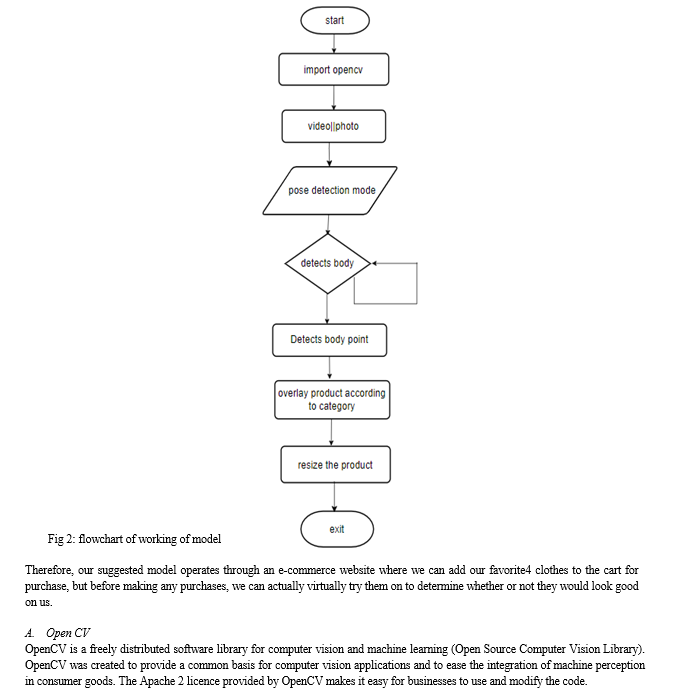

IV. SYSTEM ARCHITECTURE

The diagram mention below gives idea about your models architecture where localization, camera capture, try-on presentation is involved. For understanding how our systems works following flowchart diagram can clear all doubts.

VI. FUTURE SCOPE

We are offering customers a real-life experiment through our model, but there are some points or, as we might measure it, limitations where there is room for further improvement. There is no doubt that websites that offer virtual trials are necessary in today's world. The length of time it takes for clothing to adapt to a user's body, improving fit accuracy, and moving from try trials to actual experiments are all things that need to be observed. In the future, we plan to enhance our virtual try-on system by incorporating machine learning-based fashion recommendation algorithms to suggest clothing combinations that match a user's style and preferences. Additionally, we will continue to collect user feedback to refine and optimize the system further.

Conclusion

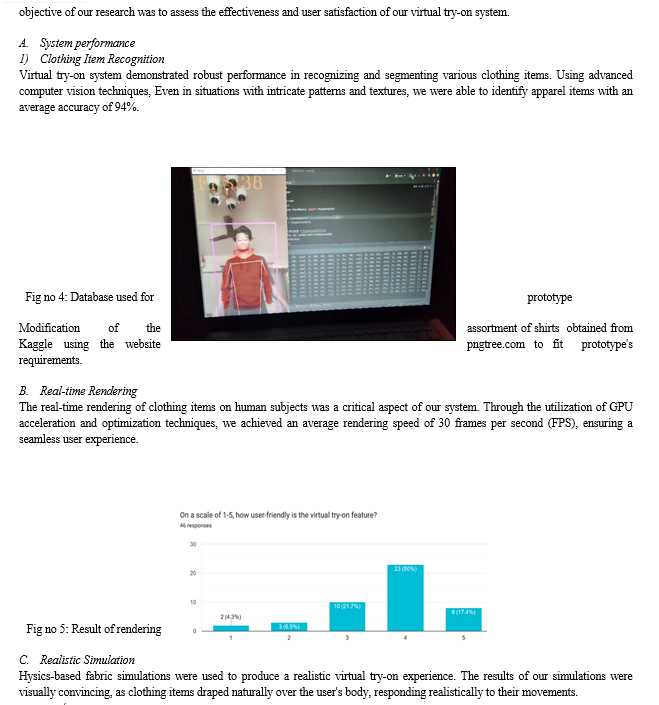

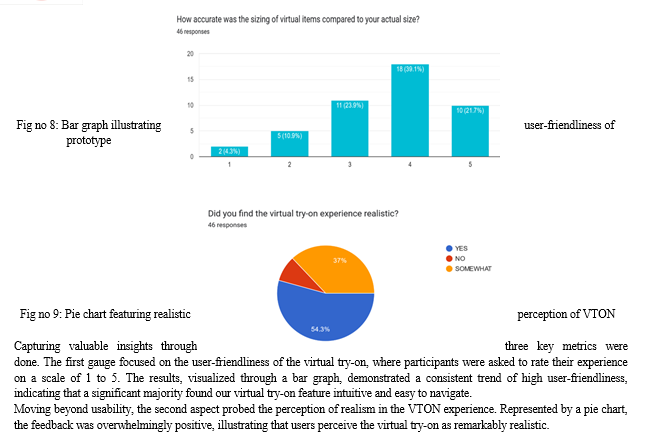

In this design, we\'ve successfully designed and enforced a virtual pass- on system using Python, using computer vision and drugs- grounded simulations to enable druggies to fantasize apparel particulars on themselves in real- time. Our evaluation of the system\'s performance and stoner satisfaction has yielded precious perceptivity into the capabilities and limitations of our virtual pass- on result. Our system\'s robust performance in apparel item recognition, real- time picture, and realistic simulation demonstrates its eventuality to revise the way consumers interact with fashion in the digital age. The high situations of stoner satisfaction, as substantiated by stoner checks and qualitative feedback, emphasize the system\'s usability and effectiveness in furnishing a realistic and pleasurable shopping experience. Looking ahead, the possibilities for expanding and perfecting our virtual pass- on system are vast. We fantasize integrating machine literacy- grounded fashion recommendation algorithms to give druggies with substantiated apparel suggestions and outfit combinations. also, we will explore the integration of stoked reality( AR) and virtual reality(VR) technologies to produce indeed more immersive and engaging shopping gests . In conclusion, our design represents a significant step towards the future of online fashion retail by bridging the gap between physical and digital shopping gests . We\'re agitated about the eventuality of our virtual pass- on system to empower consumers, enhance their online shopping trip, and contribute to the elaboration of the fashion assiduity. As we continue to introduce and upgrade our system, we anticipate indeed lesser strides in the field of virtual pass- on technology, eventually serving both consumers and the fashion assiduity as a whole.

References

[1] Yuan Chang, Tao Peng? , Ruhan He, Xinrong Hu, Junping Liu, Zili Zhang, Minghua Jiang, “DP-VTON: TOWARD DETAIL-PRESERVING IMAGE-BASED VIRTUAL TRY-ON NETWORK”, CASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Wuhan Textile University, Wuhan, China [2] Chia-Wei Hsieh, Chieh-Yun Chen, Chien-Lung Chou, Hong-Han Shuai, Wen-Huang Cheng, “FIT-ME: IMAGE-BASED VIRTUAL TRY-ON WITH ARBITRARY POSES”, ICIP 201, National Chiao Tung University [3] Shizuma Kubo, Yusuke Iwasawa, Masahiro Suzuki, Yutaka Matsuo, “UVTON: UV Mapping to Consider the 3D Structure of a Human in Image-Based Virtual Try-On Network”, 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), The University of Tokyo [4] Hyug Jae Lee* , Rokkyu Lee* , Minseok Kang* , Myounghoon Cho, Gunhan Park NHN Corp, “LA-VITON: A Network for Looking-Attractive Virtual Try-On”, 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW) [5] Xiaoyang Lv, Bo Zhang, Jie Li, Yangjie Cao, Cong Yang, “Multi-Scene Virtual Try-on Network Guided by Attributes”, 2021 IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE 2021) [6] Davide Marelli, Simone Bianco, Gianluigi Ciocca, “A Web Application for Glasses Virtual Try-on in 3D Space’’, University of Milano - Bicocca viale Sarca 336, 20126 Milano, Italy, 2019 IEEE 23rd International Symposium on Consumer Technologies (ISCT) [7] Feng Sun1 , Jiaming Guo1 , Zhuo Su1,?, Chengying Gao, “IMAGE-BASED VIRTUAL TRY-ON NETWORK WITH STRUCTURAL COHERENCE”, 2019 IEEE,Sun Yat-sen University, Guangzhou, China [8] B. Wang, H. Zheng, X. Liang, Y. Chen, L. Lin, and M. Yang. Toward Characteristic-Preserving Image-based Virtual Try On Network. ECCV, 2018. [9] M. Han Yang, Ruimao Zhang, Xiaobao Guo, Wei Liu, Wangmeng Zuo, and Ping Luo, “Towards photo-realistic virtual try-on by adaptively generating preserving image content,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 7850–7859 [10] ZENG Fan-zhi,ZOU Lei,ZHOU Yan,QIU Teng- da,CHEN Jia-wen. Application of Lightweight GAN Super-resolution Image Reconstruction Algorithm in Real-time Face Recognition. Journal of Chinese Computer Systems, 2020, 41(9): 1993-1998. [11] Jandial S, Chopra A, Ayush K, et al. SieveNet: A Unified Framework for Robust Image-Based Virtual Try-On[C]//The IEEE Winter Conference on Applications of Computer Vision. 2020: 2182-2190. [12] Xintong Han, Zuxuan Wu, Zhe Wu, Ruichi Yu, and Larry S Davis, “Viton: An image-based virtual try on network,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7543–7552. [13] Ignacio Rocco, Relja Arandjelovic, and Josef Sivic, “Convolutional neural network architecture for geomet ric matching,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 6148–6157. [14] Nadia Magnenat-Thalmanna,b, Pascal Volinoa , Bart Kevelhama , Mustafa Kasapa , Qui Tranb , Marlène Arévaloa , Ghana Priyab and Nedjma Cadia, “An Interactive Virtual Try On”, IEEE Virtual Reality 2011 19 - 23 March, Singapore 978-1-4577-0038-5/11/$26.00 ©2011 IEEE [15] Hongqiang Zhu?, Jing Tong?†, Luosheng Zhang? and Xiao Zou?,” Research and Development of Virtual Try-on System Based on Mobile Platform”, 978-1-5386-2636-8/19/$31.00 ©2019 IEEE DOI 10.1109/ICVRV.2017.00098 [16] ?an Güne? Okan ?anl? Övgü Öztürk Ergün, “Augmented Reality Tool for Markerless Virtual Try-on around Human Arm”, *Bahçe?ehir University, + SUNAGU Engineering Consultancy Education Ltd 978-1-4673-9628-8/15 $31.00 © 2015 IEEE DOI 10.1109/ISMAR-MASH\'D.2015.25 [17] Zheng Shou, Binqiang Yu, Gang Chen, Hengjin Cai, and Qiaochu Liu, “Key Designs in Implementing Online 3D Virtual Garment Try-on System”, International School of Software Wuhan University, 978-0-7695-5079-4/13 $26.00 © 2013 IEEE DOI 10.1109/ISCID.2013.46 [18] Yan Jiang, Zhengdong Liu, Chunli Chen, “ Modeling Human Body for Virtual Try-On System”, Computer Information Center?Beijing Institute of Fashion Technology?Beijing, P. R. China, 978-0-7695-3571-5/09 $25.00 © 2009 IEEE DOI 10.1109/GCIS.2009.333 [19] https://blog.research.google/2020/08/on-device-real-time-body-pose-tracking.html?hl=zh_CN

Copyright

Copyright © 2024 Prof. Dr. Sonali Antad, Vincent Bardekar, Ganesh Damre, Bhumika Chule, Durgesh Dhurve. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET62234

Publish Date : 2024-05-16

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online