Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Vision Assisted Robotic Arm Using Arduino

Authors: Mr. Khan Mohammad Tahir, Mr. Mohammed Yusuf, Mr. Chaudhary Shahbaz, Mr. Bakhed Avesh, Prof. Monika Pathare

DOI Link: https://doi.org/10.22214/ijraset.2024.59740

Certificate: View Certificate

Abstract

In the era of automation, the fusion of robotics and computer vision offers promising solutions for sustainable industrial practices. This paper presents the development and implementation of a vision-assisted robotic arm system designed for efficient object sorting by color. Leveraging the integration of robotics and computer vision, the system demonstrates its potential to optimize industrial processes while promoting environmental sustainability. The paper discusses the construction, experimental results, insights gained, and future implications of the project, highlighting its contributions to sustainable development goals.

Introduction

I. INTRODUCTION

In the age of automation, we invite you to step into a world where machines "see" and "decide." Our project represents an exciting exploration into the integration of robotics and computer vision, with a specific focus on object sorting by color. Picture a robotic arm that can recognize and categorize objects based on their hues, simplifying processes in industries ranging from manufacturing to recycling.

The proposed solution involves the design, implementation, and optimization of an automated system using a vision-assisted robotic arm controlled by Python. By integrating robotics and computer vision, the system offers a sustainable approach to industrial automation, aligning with the objectives of the United Nations' Sustainable Development Goals (SDGs).

This report takes you on a journey through the creation of this ingenious system, revealing the challenges we faced and the solutions we devised. Join us in unravelling the realm of robotics, where technology and vision combine to sort our colorful world.

II. LITERATURE SURVEY

The integration of robotics and computer vision has revolutionized industrial automation, enabling precise object manipulation and sorting tasks. Previous studies have demonstrated the potential of vision-assisted robotics in enhancing efficiency, reducing waste, and promoting sustainable practices. By leveraging computer vision algorithms for object recognition and color sorting, robotics systems can adapt to dynamic environments and handle diverse objects effectively. This interdisciplinary approach contributes to sustainable development by minimizing resource consumption and optimizing productivity.

III. IMPLEMENTATION DETAILS

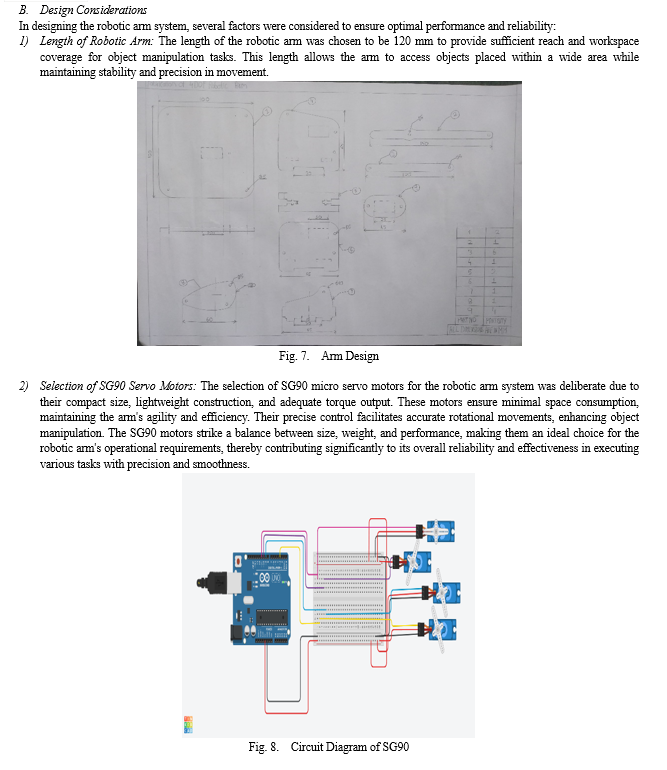

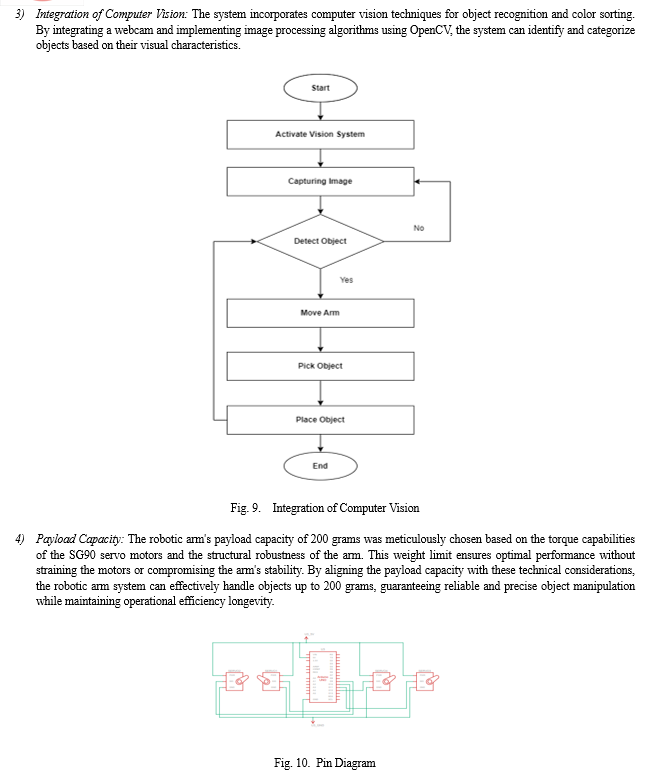

The vision-assisted robotic arm system presented in this paper represents a significant advancement in the integration of robotics and computer vision for sustainable industrial practices. The system is designed to perform object manipulation and sorting tasks efficiently and accurately. This section provides an overview of the hardware components, design considerations, and fundamental formulas utilized in the implementation of the robotic arm system.

IV. EXPERIMENTAL RESULTS

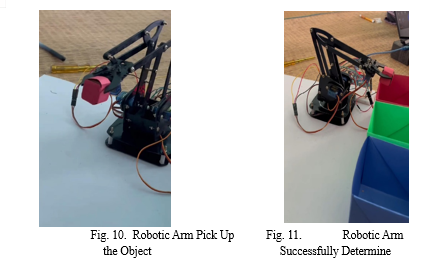

Experimental testing of the vision-assisted robotic arm system yielded promising results, showcasing its efficiency, accuracy, and sustainability. Through rigorous testing and evaluation, the system demonstrated its capability to perform object manipulation and sorting tasks with precision and reliability. This section provides an overview of the experimental setup, key findings, and implications of the results.

A. Experimental Setup

The experimental setup involved testing the vision-assisted robotic arm system under various conditions to assess its performance. The setup included:

1) Object Recognition and Sorting: For evaluating the system's prowess in object recognition and sorting, a diverse range of objects featuring various colors and shapes was meticulously selected. These objects were strategically placed within the system's workspace. Utilizing a high-resolution webcam, real-time images of these objects were captured, ensuring precise visual data acquisition. Subsequently, advanced computer vision algorithms were deployed to analyze these images comprehensively. The algorithms were specifically engineered to discern intricate details such as color variations and distinctive shapes, enabling the system to accurately identify and categorize objects based on their unique visual characteristics.

2) Torque Measurement: To gauge the system's robustness and mechanical proficiency during object manipulation tasks, a series of torque measurements were meticulously conducted. Diverse objects, each varying in weight and physical properties, were affixed to the end-effector of the robotic arm. Subsequently, a precision torque sensor was employed to measure the torque exerted by the servo motors while manipulating these objects. This comprehensive torque measurement procedure provided invaluable insights into the arm's ability to effectively handle varying loads and execute precise manipulation.

3) Efficiency Testing: The system's overall efficiency and operational efficacy were rigorously evaluated through comprehensive efficiency testing procedures. A diverse array of sorting tasks was meticulously designed and executed to assess the system's performance metrics comprehensively. Key performance indicators such as sorting speed, error rate, and throughput were meticulously measured and recorded throughout the testing phase. The time taken by the system to complete each sorting task was meticulously logged, providing valuable insights into its operational speed and efficiency. Furthermore, the accuracy of object recognition was rigorously scrutinized to ensure precise sorting capabilities. Through meticulous data collection and analysis, the system's efficiency, reliability, and overall performance were meticulously assessed, highlighting its ability to streamline industrial processes and optimize productivity effectively.

B. Key Findings

The experimental results revealed several key findings, highlighting the capabilities and performance of the vision-assisted robotic arm system:

- Precise Object Recognition: The computer vision algorithms implemented in the system accurately identified objects based on their colors, enabling reliable sorting and manipulation.

- Efficient Sorting Process: The system demonstrated high sorting speed and throughput, effectively reducing the time required to complete sorting tasks. This efficiency is essential for optimizing production processes and enhancing productivity in industrial settings.

- Sustainable Operation: Torque measurements confirmed the arm's ability to handle moderate loads, indicating its suitability for industrial applications. The system's sustainable operation aligns with the objectives of Sustainable Development Goals (SDGs) related to sustainable production and consumption.

- Low Error Rate: The system exhibited a low error rate in object recognition and sorting, minimizing the occurrence of misclassifications and errors. This reliability is crucial for maintaining high-quality standards in industrial processes.

C. Implications and Future Directions

The experimental results have significant implications for various industries, particularly in manufacturing, logistics, and recycling sectors. The vision-assisted robotic arm system offers a sustainable and efficient solution for automating object manipulation and sorting tasks, thereby reducing labor costs, minimizing errors, and improving overall productivity.

Moving forward, further research and development efforts can focus on:

- Optimizing Algorithm Performance: Continuously refining and optimizing computer vision algorithms to enhance object recognition accuracy and speed.

- Scaling Up for Industrial Deployment: Expanding the system's capabilities to handle larger volumes of objects and integrating it into industrial production lines for real-world deployment.

- Exploring New Applications: Exploring new applications and use cases for the vision-assisted robotic arm system in diverse industries, such as agriculture, healthcare, and warehouse automation.

V. INSIGHTS AND OBSERVATIONS

The development and implementation of the vision-assisted robotic arm system have provided valuable insights into the potential of robotics and computer vision technologies for sustainable industrial practices. This section discusses key insights and observations gleaned from the project, highlighting its significance and implications.

A. Scalable and Adaptable Solution

The integration of robotics and computer vision technologies has resulted in a scalable and adaptable solution for object sorting and manipulation tasks. The vision-assisted robotic arm system can be easily customized and deployed across various industries, offering a versatile platform for optimizing production processes and enhancing efficiency.

B. Alignment with Sustainable Development Goals (SDGs)

The project aligns closely with the objectives of Sustainable Development Goals (SDGs), particularly those related to sustainable production and consumption, innovation, and industry growth. By automating object sorting and manipulation tasks, the system contributes to reducing waste, conserving resources, and promoting sustainable practices in industrial settings.

C. Potential for Innovation and Industry Growth

The vision-assisted robotic arm system represents a promising avenue for innovation and industry growth. Its integration of cutting-edge technologies opens up new possibilities for streamlining operations, improving productivity, and driving economic development. Moreover, the system's adaptability allows for continuous innovation and refinement, ensuring its relevance in a rapidly evolving industrial landscape.

D. Future Research and Development

The vision-assisted robotic arm system offers a promising future. We anticipate integrating advanced sensors, AI, and machine learning to enhance object recognition and sorting precision. Multi-object sorting, industry-specific adaptations, and collaboration with human operators are on the horizon. Improvements in energy efficiency, safety features, and remote control capabilities are priorities. Market expansion, user interface refinements, and international collaborations will further its reach and impact. This system remains poised for dynamic growth, adapting to evolving technologies and market demands across various sectors.

The fusion of vision technology and Arduino-driven robotics holds immense promise for the future. In the forthcoming years, we anticipate a deeper integration of these technologies into Industry 4.0, with fully autonomous manufacturing systems. Healthcare will benefit from vision-assisted robotic surgery, remote monitoring, and telemedicine, increasing precision and accessibility. In agriculture, smart farms equipped with vision systems will optimize crop management, and autonomous vehicles will revolutionize transportation. Personal robotics, including smart appliances and assistive devices, will become commonplace. Ethical considerations and robust regulations will be essential, and interdisciplinary collaborations will drive progress. Continuous research and innovation will remain pivotal, ensuring that the potential of these technologies is harnessed to shape a more intelligent and adaptive world in harmony with human needs.

Conclusion

In conclusion, the fusion of vision technology and Arduino-driven robotics has paved the way for intelligent and adaptable automation solutions. Vision-assisted robot arms hold the promise of transforming industries and revolutionizing how we interact with and harness the power of robotics in our daily lives. Within the manufacturing sector, these vision-assisted robotic arms are poised to optimize production lines, streamline assembly processes, and deliver consistent quality while reducing the risk of errors. The potential for cost savings and enhanced productivity is nothing short of revolutionary.

References

[1] W. DaeHee, L.Young Jae, S. Sangkyung, and K. Taesam, \"Integration of vision based SLAM and nonlinear filter for simple mobile robot navigation,\" in Aerospace and Electronics Conference. 2008 NAECON IEEE National, pp. 373-378. [2] Xiang-xiang Qi, Ben-xue Ma and Wen-dong Xiao “On-Line Detection of Hami Big Jujubes Size and Shape Based on Machine Vision” in Computer Distributed Control and Intelligent Environmental Monitoring (CDCIEM), 2011 International Conference on19-20 Feb. 2011 [3] Timothy S. Newman and Anil K. Jain, “A survey of automated visual inspection”, Computer Vision and Image Understanding, vol 61, No. 2, pp 231-262, 1995 [4] Satoru Takahashi and Bijoy K. Ghosh, “Motion and shape identification with vision and range”, IEEE Transactions on Automatic Control, vol 47, No. 8, pp 1392-1396, Aug 2002 [5] Jose A. Ventura and Wenhua Wan, “ Accurate matching of two dimensional shapes using the minimal tolerance zone error”, Image and Vision Computing, vol 15, pp 889-899, 1997 [6] R. O. Duda and P. E. Hart, “Use of the Hough transformation to detect lines and curves in pictures,” Comm. ACM, vol. 15, pp. 11–15, 1972. [7] C. Igathinathane, L.O. Pordesimo, E.P. Columbus, W.D. Batchelor, S.R. Methuku, “ Shape identification and particles size distribution from basic shape parameters using ImageJ”, Computers and Electronics in Agriculture, vol 63, pp 168-192, 2008 [8] H. Golnabi and A. Asadpour, “Design and application of industrial machine vision systems”, Robotics and Computer – integrated Manufacturing, vol 23 , pp 630-637, 2007 [9] C. Shanthi and N. Pappa, “An artificial intelligence based improved classification of two-phase flow patterns with feature extracted from acquired images”, ISA Transactions, vol. 68, pp. 425–432, 2017. [10] D. G. Lowe, “Object recognition from local scale-invariant features”, IEEE 7th International Conference on Computer Vision (ICCV), vol. 2, pp. 1150–1157, 1999. [11] L. Ledwich and S. Williams, “Reduced SIFT features for image retrieval and indoor localisation”, Australian Conference on Robotics and Automation (ACRA), vol. 322, p. 3, 2004. [12] F. Guan, X. Liu, W. Feng, and H. Mo, “Multi target recognition based on SURF algorithm”, 6th International Congress on Image and Signal Processing (CISP), vol. 1, pp. 444–453, 2013. [13] H. Heo, J. Y. Lee, K. Y. Lee, and C. H. Lee, “FPGA based Implementation of FAST and BRIEF algorithm for object recognition”, TENCON 2013-2013 IEEE Region 10 Conference (31194), pp. 1–4., 2013 [14] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: an efficient alternative to SIFT or SURF”, International Conference on Computer Vision (ICCV), pp. 2564–2571, 2011. [15] D. G. Lowe, “Distinctive image features from scale-invariant keypoints”, International Journal of Computer Vision (IJCV), vol. 60, no. 2, pp. 91–110, 2004. [16] H. Joshi and M. K. Sinha, “A Survey on image mosaicing techniques”, International Journal of Advanced Research

Copyright

Copyright © 2024 Mr. Khan Mohammad Tahir, Mr. Mohammed Yusuf, Mr. Chaudhary Shahbaz, Mr. Bakhed Avesh, Prof. Monika Pathare. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59740

Publish Date : 2024-04-02

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online