Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Web Application for Emotion-Based Music Player using Streamlit

Authors: Dr. J Naga Padmaja, Amula Vijay Kanth, P Vamshidhar Reddy, B Abhinay Rao

DOI Link: https://doi.org/10.22214/ijraset.2023.49019

Certificate: View Certificate

Abstract

Music is a language that doesn’t speak in particular words, it speaks in emotions. Music has certain qualities or properties that impact our feelings. We\'ve numerous music recommendation systems that recommend music based on the previous search history, previous listening history, or ratings provided by users. Our project is all about developing a recommendation system that recommends music based on the user’s current mood. This approach is more effective than the existing ones and eases user’s work of first searching and creating a specific playlist. A user’s emotion or mood can be identified by his/her facial expressions. These expressions can be deduced from the live feed via the system’s camera. Machine Learning come up with various methods and techniques through which human emotions can be detected. Support Vector Machine (SVM) algorithm is used to develop this system. The SVM is used as a classifier to classify different emotional states such as happy, sad, etc. The facial feature displacements in the live feed are used as input to the SVM classifier. Based on the output of the SVM classifier, the user is redirected to a corresponding playlist. Streamlit Framework is used to build this web application. It helps us to create beautiful web apps for data science and machine learning in less time.

Introduction

I. INTRODUCTION

Numerous studies in recent times reveal that humans respond and reply to music and this music has a high impression on the exertion of the mortal brain. Music is the form of art known to have a major connection with a person's emotion. Music can also be considered as the universal language of humanity. It has the ability to bring positivity and refreshment in the lives of individuals. Experimenters discovered that music plays a major part in relating thrill and mood. Two of the most important functions of music are its unique capability to lift up one's mood and come more tone- apprehensive. Musical preferences have been demonstrated to be largely affiliated to personality traits and moods. Facial expressions are the best way that people may derive or estimate the emotion, sentiment, or reflections that another person is trying to express. People frequently use their facial expressions to communicate their feelings. It has long been recognized that music may alter a person's nature. A user's mood can be gradually calmed down and an overall good effect can be produced by capturing and recognizing the emotion being emitted by the person and playing appropriate songs corresponding to the one's mood. Music is a great way to express emotions and moods. For example, people like to hear to happy songs when they are feeling good, a comforting song can support us to relax when we are feeling stressed-out and people tend to hear some kind of sad songs when they are feeling down. Hence in this project, we're going to develop a system which will capture the real time emotion of the user and based on that emotion, related songs will be recommended. We're going to classify songs into the groups based on the categories like Happy, Sad, Neutral etc. Then according to the captured emotion, the user will be redirected to a suitable playlist. In this way user can get recommendation of songs based on his current mood and will change dynamically based on current mood.

II. RELATED WORK

A. Literature Survey

An important field of research to enhance human-machine interaction is emotion recognition. The task of acquisition is made more challenging by emotion complexity. It is suggested that Quondam works capture emotion through a unimodal mechanism, such as just facial expressions or just voice input. The concept of multimodal emotion identification has lately gained some traction, which has improved the machine's ability to recognise emotions with greater accuracy.

Below are some research papers used for Literature Survey on Facial Emotion Recognition.

- Jaiswal, A. Krishnama Raju and S. Deb, "Facial Emotion Detection Using Deep Learning," 2020 International Conference for Emerging Technology (INCET), 2020.

In order to detect emotions, these steps are used: face detection, feature extraction, and emotion classification. The suggested method reduces computing time while improving validation accuracy and reducing loss accuracy.

It also achieves a further performance evaluation by contrasting this model with an earlier model that was already in use. On the FERC-2013 and JAFFE databases, which comprise seven fundamental emotions like sadness, fear, happiness, anger, neutrality, surprise, and disgust, we tested our neural network architectures. In this research, robust CNN is used to picture identification and use the deep learning (DL) free library "Keras" given by Google for facial emotion detection. This proposed network was trained using two independent datasets, and its validation accuracy and loss accuracy were assessed. Facial expressions are used for seven different emotions in images taken from a specific dataset to detect expressions using a CNN-created emotion model. Keras library provided by Google is used to update a few steps in CNN, and also adjusted the CNN architecture to provide improved accuracy.

2. Kharat, G. U., and S. V. Dudul. "Human emotion recognition system using optimally designed SVM with different facial feature extraction techniques." WSEAS Transactions on Computers 7.6 (2008).

The goal of this research is to create "Humanoid Robots" that can have intelligent conversations with people. The first step in this path is for a computer to use a neural network to recognise human emotions. In this essay, the six primary emotions: anger, disgust, fear, happiness, sadness, and surprise along with the neutral feeling are all acknowledged. The important characteristics for emotion recognition from facial expressions are extracted using a variety of feature extraction approaches, including Discrete Cosine Transform (DCT), Fast Fourier Transform (FFT), and Singular Value Decomposition (SVD). The performance of several feature extraction techniques is examined, and Support Vector Machine (SVM) is utilised to recognise emotions using the extracted face features. On the training dataset, the authors achieved 100% recognition accuracy and 92.29% on the cross-validation dataset.

B. Existing Systems

The majority of currently used recommendation systems are based on user's past ratings, listening history, or search history. Because the system only considers ratings history when making suggestions, it ignores other factors that can affect the forecast, such as the user's reaction, behaviour, feeling, or emotion. As a result, they are only able to have a static user experience. These elements must be included in order to alter and improve the user's present mood. It takes time to create a playlist using these existing recommendation methods. The trick is that the consumer could benefit from a more dynamic, personalised experience by taking such behavioural and emotional characteristics into account. Additionally, playlists are built more quickly and precisely.

III. PROPOSED SYSTEM

Our proposed system creates a mood-based music player that does real-time mood recognition and recommends songs in accordance with identified mood. This adds a new feature to the classic music player apps that are already pre-installed on our mobile devices. Customer satisfaction is a significant advantage of mood detection. The ability to read a person's emotions from their face is crucial. To gather the necessary information from the human face, a camera is employed. This input can be used, among other things, to extract data that can be used to infer a person's mood. Songs are generated using the "emotion" derived from the preceding input. This laborious chore of manually classifying songs into various lists is lessened, which aids in creating a playlist that is suitable for a particular person's emotional characteristics. In this research work, we implemented Machine Learning project Emotion Based Music Recommendation System using the Streamlit framework.

The project is split into 3 phases:

A. Face Detection

The primary goal of face detection is to identify the face in the frame by reducing external noises and other factors. Face detection is one of the applications that are within the scope of computer vision technology. This is the process of developing and training algorithms to correctly locate faces or objects in object detection or related systems in images. This detection can be done in real time using OpenCV. OpenCV (Open-Source Computer Vision) is a well-known computer vision library. A classifier is a computer programme that determines whether an image is positive (face image) or negative (non-face image). To learn how to correctly classify a new image, a classifier is trained on hundreds of thousands of faces and non-face images. OpenCV provides us pre-trained classifiers used for face detection.

Using OpenCV to capture video streams is one existing method for achieving real-time video processing with Streamlit. However, this only works when the Python process has access to the video source, i.e., when the camera must be linked to the same host as the app. Because of this limitation, it has always been difficult to deploy the app to remote hosts and use it with video streams from local webcams. When the app is hosted on a remote server, the video source is a camera device connected to the server, not a local webcam, and cv2.VideoCapture(0) consumes a video stream from the local connected device. This issue is resolved by WebRTC. WebRTC (Web Real-Time Communication) provides low-latency sending and receiving of video, audio, and arbitrary data streams across the network by web servers and clients, including web browsers. Major browsers like Chrome, Firefox, and Safari currently support it, and its specifications are open and standardised. WebRTC is frequently used in browser-based real-time video chat applications like Google Meet. By enabling the transmission of video, audio, and arbitrary data streams across frontend and backend operations like browser JavaScript and server-side Python, WebRTC expands the potent capabilities of Streamlit.

B. Emotion Classification

Emotion classification, also known as emotion categorization, is the process of identifying human emotions from both facial and verbal expressions and dividing them into corresponding category. If you were shown a picture of a person and asked to guess how they were feeling, chances are you'd have a pretty good idea. Imagine computer was capable of doing the same, with high accurate results, this is possible by Machine Learning. Machine Learning provides a variety of tools and technologies that can efficiently classify emotions. Facial landmarks also known as nodal points, are key points on images of human faces. The coordinates of the points on the image define them. These points are used to locate and represent prominent facial features such as the eyes, brows, nose, mouth, and jawline. In the field of emotion classification, machine learning has developed a wide range of algorithms, including artificial neural networks and convolutional neural networks. The Support Vector Machine method is implemented in this project to identify emotions.

- Support Vector Machine (SVM)

A common Supervised Learning approach for classification and regression issues is the Support Vector Machine, or SVM. But it's largely used in machine learning to solve classification problems. To conveniently classify fresh data points in the future, the SVM algorithm seeks to identify the optimal line or decision boundary for categorizing n-dimensional space. A hyperplane is a mathematical term for this optimal decision boundary. SVM selects the extreme points or vectors that help in the creation of the hyperplane. These extreme cases are referred to as support vectors, and hence the algorithm is known as the Support Vector Machine.

C. Music Recommendation

The third and final stage of our approach is Music Recommendation. We make playlists on third-party apps like Spotify based on categories like Happy, Sad, Neutral, and so on. Based on the output of the SVM classifier, the user is redirected to a third-party application containing corresponding playlist. Webbrowser module in python is used to redirect to the playlist. In the Python programming language, the webbrowser module is a handy web browser controller. This module provides a high-level interface for displaying web-based documents. Thus, the fundamental outcome of recommending music based on the user’s mood is achieved.

- Model Deployment Using Streamlit Framework

Model Deployment allows you to show off your research work to the rest of the world. Deploying your application over the internet improves its accessibility. After deployment, the app can be accessed from any mobile device or computer in the world. We needed to make the developed SVM model for Emotion-Based Music Recommendation System publicly available for real-time predictions, so we used streamlit to deploy it on the web. Streamlit is the best lightweight web deployment technology.

Streamlit is a free and open-source Python framework for creating brisk and stunning web applications for machine learning and data science projects. We can quickly design and launch web applications using Streamlit. Since the current frameworks are complex, Streamlit was created. Without caring about the front end, this may be used to deploy Machine Learning models or any other Python applications. It is not necessary to have prior experience with other frameworks to utilise Streamlit. Everything is handled by Streamlit. We can use Streamlit to create apps in the same manner that we create Python code. We don't need to spend days or months building a web app; we can build a really beautiful machine learning or data science app in a matter of hours or even minutes.

IV. RESULTS AND DISCUSSION

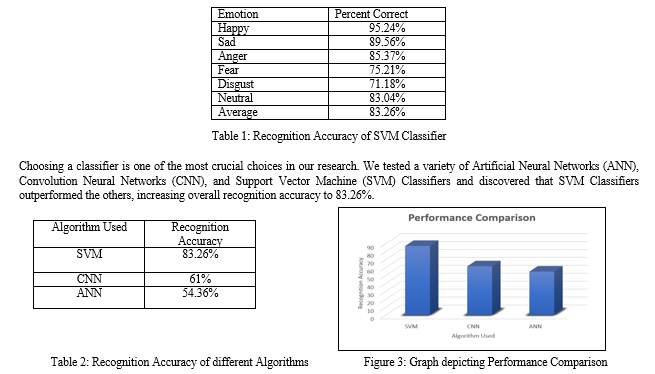

We evaluate our system's classification performance for the six basic emotions. Our method makes no assumptions about the emotions used for training or classification and is applicable to arbitrary, user-defined emotion categories. The standard SVM classification algorithm was combined with a linear kernel. Table 2 shows the percentage of correctly classified examples for each basic emotion as well as the overall recognition accuracy. Certain emotions (for example, fear) or combinations of emotions (for example, disgust vs. fear) are inherently more difficult to distinguish than others. However, the findings reveal certain motion properties of expressions that appear to be universal across subjects (for example, raised mouth corners for 'happy', corresponding to a smile).

A. Output Screens

Below are some screenshots of an Emotion-Based Music Player. Figure 4 represents the home page of the Emotion-Based Music Player. Here, the user has two options for playlist retrieval. One method involves manually entering the emotion; the other involves capturing his own face. Figures 5 and 6 depict the user's emotion being detected. The playlist that is recommended to the user is shown in Figure 7.

Conclusion

The algorithm suggested in this research tries to regulate the creation of playlists based on facial expressions. According to experimental results, the suggested algorithm was successful in automating playlist construction based on facial expressions, which reduced the time and effort required to do the activity manually. Our results indicate that the properties of a Support Vector Machine learning system correlate well with the constraints imposed by a real-time environment on recognition accuracy and speed. We assessed our system\'s accuracy in a variety of interaction scenarios and discovered that the results of controlled experiments look good compared to previous approaches to expression recognition. The overall objective of creating the system is to improve the user\'s experience and, ultimately, relieve some stress or lighten the user\'s mood. The user does not have to waste time searching or looking up songs, and the best track matching the user\'s mood is automatically detected. The system is prone to producing unpredictable results in difficult light conditions, so removing this flaw from the system is intuited as part of future work. People frequently use music to regulate their moods, specifically to change a bad mood, increase energy, or reduce tension. Our system\'s primary goal is to change or maintain the user\'s emotional state and boost the user\'s mood by exploring various music tracks. Additionally, listening to the right music at the right time may improve mental health. As a result, human emotions and music have a strong relationship. Users will find it easier to create and manage playlists with our Web application for emotion-based music recommendation system.

References

[1] Singh, Gurlove, and Amit Kumar Goel. \"Face detection and recognition system using digital image processing.\" 2020 2nd International Conference on Innovative Mechanisms for Industry Applications (ICIMIA). IEEE, 2020. [2] Kumar, Ashu, Amandeep Kaur, and Munish Kumar. \"Face detection techniques: a review.\" Artificial Intelligence Review 52.2 (2019): 927-948. [3] Jaiswal, A. Krishnama Raju and S. Deb, \"Facial Emotion Detection Using Deep Learning,\" 2020 International Conference for Emerging Technology (INCET), 2020. [4] Kharat, G. U., and S. V. Dudul. \"Human emotion recognition system using optimally designed SVM with different facial feature extraction techniques.\" WSEAS Transactions on Computers 7.6 (2008): 650-659. [5] Dai, Hong. \"Research on SVM improved algorithm for large data classification.\" 2018 IEEE 3rd International Conference on Big Data Analysis (ICBDA). IEEE, 2018. [6] Chaganti, Sai Yeshwanth, et al. \"Image Classification using SVM and CNN.\" 2020 International Conference on Computer Science, Engineering and Applications (ICCSEA). IEEE, 2020. [7] Khorasani, Mohammad, Mohamed Abdou, and Javier Hernández Fernández. \"Streamlit Use Cases.\" Web Application Development with Streamlit. Apress, Berkeley, CA, 2022. 309-361. [8] Raghavendra, Sujay. \"Introduction to Streamlit.\" Beginner\'s Guide to Streamlit with Python. Apress, Berkeley, CA, 2023. 1-15. [9] Elleuch, Wajdi. \"Models for multimedia conference between browsers based on WebRTC.\" 2013 IEEE 9th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob). IEEE, 2013. [10] J. Naga Padmaja, R. Rajeswara Rao, “Exploring Spectral Features for Emotion Recognition Using GMM”, Vol. 14 ICETCSE 2016 Special Issue International Journal of Computer Science and Information Security (IJCSIS) ISSN 1947-5500 pp 46-52. [11] J. Naga Padmaja, R. Rajeswara Rao, “Exploring Emotion Specific Features for Emotion Recognition System Using PCA Approach”, International Conference on Intelligent Computing and Control Systems (ICICCS), 978-1-5386-2745, IEEE 2017. [12] Naga Padmaja Jagini et. al(Feb 2022), “Video-based emotion sensing and recognition using Convolutional Neural Network based Kinetic Gas Molecule Optimization , IMEKO, ISSN:2221-870X, Volume 11, Number-2, pp.No.1-7, June 2022.

Copyright

Copyright © 2023 Dr. J Naga Padmaja, Amula Vijay Kanth, P Vamshidhar Reddy, B Abhinay Rao. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET49019

Publish Date : 2023-02-06

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online