Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Wheelchair Navigation Enhanced by Machine Learning Eye-Gaze Control

Authors: Vishav Mehra

DOI Link: https://doi.org/10.22214/ijraset.2024.63082

Certificate: View Certificate

Abstract

This study focuses on the development of an eye-controlled wheelchair system tailored for individuals with motor disabilities, with the overarching goal of augmenting their mobility and autonomy. A multifaceted approach was adopted, incorporating meticulous data collection and preprocessing techniques, notably encompassing real-time face detection and feature extraction. Various machine learning algorithms, including KNN, Decision Trees, Random Forests, and SVM, were meticulously trained to anticipate user commands. Through rigorous performance evaluation, the system demonstrated remarkable accuracy and precision, with KNN and SVM emerging as the top-performing models. Notably, this innovative system exhibits significant potential in enhancing accessibility and fostering independence, thereby exemplifying notable strides in the realm of assistive technology. The integration of advanced data processing methodologies, machine learning techniques, and real-time prediction mechanisms facilitates a seamless and intuitive navigation experience, thus holding promise for substantially enriching the quality of life for individuals grappling with mobility disabilities. This research underscores the pivotal role of interdisciplinary collaboration and technological innovation in devising solutions that cater to the diverse needs of individuals with disabilities, thereby advocating for inclusivity and empowerment in society.

Introduction

I. INTRODUCTION

The advancement of assistive technologies has had a profound impact on enhancing the overall well-being of those with physical disabilities, enabling them to actively participate in their environment with greater autonomy. One notable breakthrough among these developments is the incorporation of deep learning algorithms with eye-gaze control, which shows great promise. This essay presents the notion of an Eye-Gaze Controlled Wheelchair Utilizing Deep Learning, emphasizing its importance in augmenting mobility and independence for those with motor limitations. Essentially, this innovative technology utilizes deep learning, a branch of artificial intelligence (AI) that allows robots to acquire knowledge and make decisions similar to human thinking[1]–[4]. Researchers have created a wheelchair interface that use deep learning algorithms and eye-tracking technologies to analyze users' eye movements and navigate the wheelchair in real-time. The present methodology effectively overcomes the constraints associated with conventional input devices, such as joysticks or switches, thereby providing a more user-friendly and easily accessible method of manipulation. Eye-gaze control has great potential for assisting persons with severe motor limitations, such as spinal cord injuries, cerebral palsy, or amyotrophic lateral sclerosis (ALS), in navigating wheelchairs. For many persons, traditional approaches of wheelchair control may prove to be burdensome or unfeasible, thereby impeding their capacity to navigate with autonomy and freedom. This technology enables users to navigate their wheelchairs with exceptional simplicity and accuracy by utilizing the precision and dependability of eye motions.

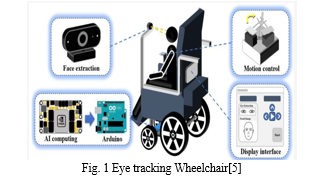

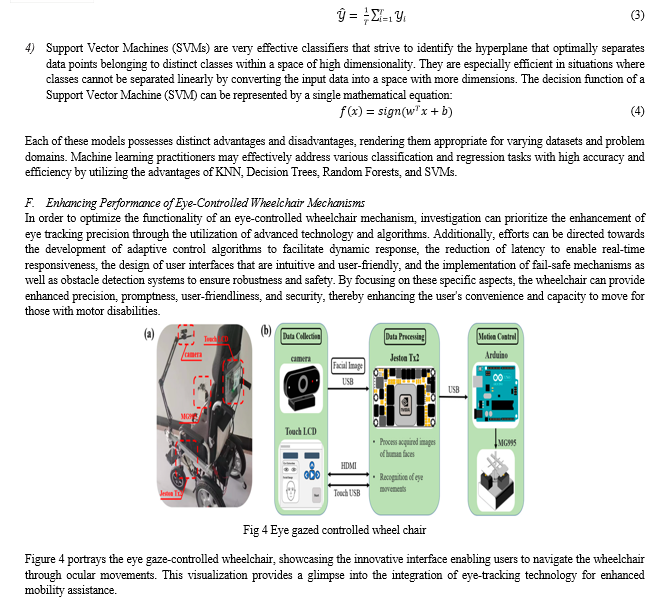

The incorporation of eye-tracking technology for navigation is depicted in Figure 1, which showcases the eye-tracking wheelchair. The provided visual representation effectively captures the fundamental capabilities of the assistive technology in enhancing mobility by means of ocular motions.

An important benefit of the Eye-Gaze Controlled Wheelchair is its capacity to adjust to the specific preferences and skills of each user. The technology incorporates deep learning algorithms that dynamically adjust and acquire knowledge from users' eye movements, tailoring the wheelchair's response to their distinct patterns and behaviors[6]–[9]. The implementation of a tailored approach not only serves to improve user comfort, but also serves to optimize efficiency and safety in the context of navigation, hence reducing the likelihood of collisions or errors. Furthermore, the incorporation of deep learning technology allows the wheelchair to acquire knowledge and predict the intentions of users, hence enhancing the efficiency and authenticity of interactions. By means of comprehensive training on a wide range of datasets, the system has acquired the ability to identify nuanced fluctuations in eye movements that align with distinct commands[10]–[13]. This enables smooth transitions between various navigation modes, including forward, backward, turning, and stopping. By utilizing this predictive capabilities, the latency and reaction time are reduced, guaranteeing a seamless and prompt user experience. The Eye-Gaze Controlled Wheelchair represents a significant change in assistive technology, prioritizing equality and empowerment, in addition to its practical advantages. This technology enhances social integration and participation by giving priority to user autonomy and agency[14]. It empowers individuals with motor impairments to traverse their settings with dignity and confidence. In addition, the open-source nature of the system promotes collaboration and creativity among researchers, hence facilitating continuous progress in the development of accessible transportation solutions. The Eye-Gaze Controlled Wheelchair is a ground breaking amalgamation of advanced artificial intelligence and assistive technology, fundamentally transforming the manner in which individuals with motor impairments engage with their environment. This unique solution enables users to traverse the environment with more independence and freedom, thanks to its intuitive interface, customizable adaptability, and predictive capabilities. The ongoing development of research in this particular domain presents boundless possibilities for improving the quality of life for those with impairments[15]–[18].

II. LITERATURE REVIEW

[19] Explores the potential of gaze-controlled interfaces for steering wheelchairs or robots, aiming to enhance independence. Virtual reality experiments and field studies with wheelchair users revealed preferences for waypoint navigation. However, users felt discomfort when looking down to steer, suggesting the need for improvements in simulation-based design for such interfaces.

[20] Presents an eye-tracking assistive robot control system for wheelchair-mounted robotic arms, aiding individuals with disabilities in daily activities. A user study showed 100% task success rate, indicating system effectiveness. The method received high acceptance and usability ratings, suggesting its potential for enhancing independence in users with motor impairments.

[21] Introduces a wheelchair control system using eye tracking and blink detection. It extracts pupil images for eye movement trajectory and gaze direction determination. Convolutional neural networks classify open and closed-eye states, enabling effective machine learning. Experimental validation on a modified wheelchair demonstrates the system's reliability and effectiveness.

[3] Introduces an eye gaze-based aiming solution for pilots wearing helmet-mounted displays (HMDs). It addresses limitations of direct helmet orientation for aiming by proposing a deep learning-based method for robust eye feature extraction. Prototype experiments demonstrate real-time target aiming with an average error of under 2 degrees, showcasing competitive performance with existing research.

[22] Eye-tracking technology has become popular for people with severe physical disabilities, enabling them to control devices using their eyes. This paper employs deep learning to develop a low-cost, real-time eye-tracking interface for system control. A smart wheelchair and robotic arm are developed, showcasing the effectiveness of eye-tracking in improving accessibility for disabled individuals.

III. RESEARCH METHODOLOGY

The study of improving the efficiency of an eye-controlled wheelchair mechanism involves a comprehensive approach that includes extensive data collecting, pre-processing techniques, feature extraction, real-time prediction and presentation, machine learning algorithms, and overall optimization. The system achieves accurate prediction and display of users' commands by systematically collecting eye movement data, integrating Open CV and dlib for real-time processing, and utilizing advanced algorithms such as Histogram of Oriented Gradients (HOG), KNN, Decision Trees, Random Forests, and SVMs. This all-encompassing strategy guarantees accuracy, promptness, ease of use, and security, ultimately improving the ability to move and be self-reliant for those with motor impairments.

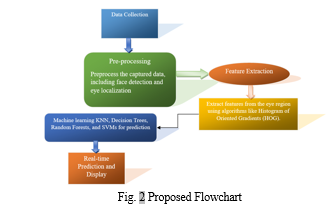

Figure 2 displays the proposed flowchart outlining the sequential steps of the system's operation. It delineates the process flow, detailing the stages from initial data collection to final output generation, providing a visual representation of the system's operational workflow.

A. Data Collection

Thorough data collecting is crucial in the development of an eye-controlled wheelchair to guarantee its precision and effectiveness. A methodical methodology was employed in which a total of 100 images were recorded with the purpose of generating frames. These frames were then carefully arranged and kept in designated folders that corresponded to specific directions. The script for data collection plays a crucial function in directing users through specific eye movements that are necessary for each direction of interest. Every action initiates the acquisition of pertinent data, whether it be photos or video frames, which are carefully archived in their corresponding directories. The aforementioned procedure not only guarantees the methodical arrangement of data but also enables effective retrieval and analysis. The technology optimizes subsequent analysis and training phases by organizing the data based on the direction of interest. This allows the wheelchair to precisely understand users' eye movements and convert them into relevant navigational commands. The rigorous data gathering methodology employed in this study establishes the fundamental basis for the creation of a resilient and dependable eye-controlled wheelchair system. The system seeks to improve accessibility and mobility for individuals with motor disabilities by systematically organizing and storing data, while providing accurate direction during the collecting process. This empowers them with increased independence and flexibility of movement.

B. Data Pre-processing

The process of data pre-processing for the eye-controlled wheelchair entails the integration of Open CV, which facilitates the acquisition of real-time video input from the camera and its subsequent display within the application window. Incorporation of the dlib package enables real-time face detection by employing a pre-trained model to accurately identify and locate faces within the video stream. The development of a custom function named Eye Sampler enables the sampling and pre-processing of eyeballs according to the user's selection (left or right). This function also ensures that the eye samples are resized to maintain consistency in feature extraction. The implementation of this pre-processing phase serves to guarantee precise eye tracking and optimize the functionality of the wheelchair control system.

C. Feature Extraction

The eye-controlled wheelchair utilizes the Histogram of Oriented Gradients (HOG) algorithm for feature extraction. This approach is well-known for its ability to accurately capture the spatial arrangement of local gradients in images. The implementation of HOG enables the extraction of essential features from the sampled eyeballs. The optimization of feature representation is achieved by configuring parameters such as orientations, pixels per cell, and cells per block. This process enables the identification and analysis of unique patterns in eye movements that are crucial for the process of navigation. The Facial Landmark Detection functionality of dlib is utilized in conjunction with HOG to accurately determine the position of the eyes inside the identified facial regions. This allows for the extraction of accurate ocular coordinates, which are essential reference points for further research.

The system improves its capacity to efficiently read and respond to users' eye movements by precisely determining the position of the eyes inside the facial structure. The implementation of a thorough feature extraction approach guarantees the resilience and precision of the eye-controlled wheelchair system, hence augmenting user satisfaction and facilitating mobility.

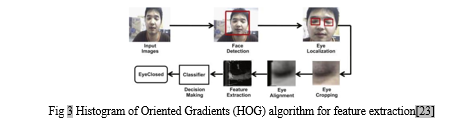

Figure 3 illustrates the Histogram of Oriented Gradients (HOG) algorithm, a technique used for feature extraction. This histogram represents the distribution of gradient orientations within localized regions of an image, facilitating the identification of distinctive features for subsequent processing or analysis.

D. Real-time Prediction and Display

During the phase of real-time prediction and presentation, the system engages in sequential processing of video frames obtained from the camera feed. Each frame utilizes face detection and dlib to precisely pinpoint the eyes. The Eye Sampler function is employed to extract and pre-process the eye region, and subsequently calculate Histogram of Oriented Gradients (HOG) features for the sampled eyes. The retrieved HOG features are utilized as input for pre-trained classifiers such as KNN, Decision Tree, Random Forest, and SVM. The classifiers provide predictions for the person ID linked to the identified eyeballs. The program window has been modified to present the live video feed on the user interface. In addition, the label of the acknowledged individual is superimposed onto the video stream in order to offer immediate response. Users are able to visually monitor the success of the system in terms of recognition as it consistently analyses the video feed and generates predictions using the detected eye attributes. The system guarantees a smooth and interactive experience for users of the eye-controlled wheelchair by incorporating real-time processing, prediction, and user interface updates. This allows for efficient control and feedback.

IV. RESULTS AND DISCUSSION

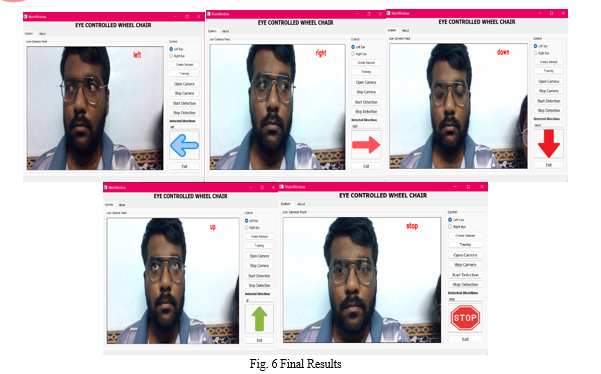

The eye-controlled wheelchair system's performance evaluation measures encompass accuracy, precision, recall, and F1 score. Accuracy is a metric that quantifies the ratio of accurately predicted commands to the total number of predictions. Precision is a metric that quantifies the ratio of accurately anticipated positive commands to the total number of expected positive commands, highlighting the system's capacity to prevent false positives. Recall is a metric that quantifies the ratio of accurately anticipated positive instructions to the total number of actual positive commands. It serves as an indicator of the system's capacity to identify all pertinent commands. The F1 score is calculated as the harmonic mean of precision and recall, offering a well-balanced assessment of the overall accuracy of predictions. This scoring method is particularly advantageous in scenarios where datasets are imbalanced or when both precision and recall have significance.

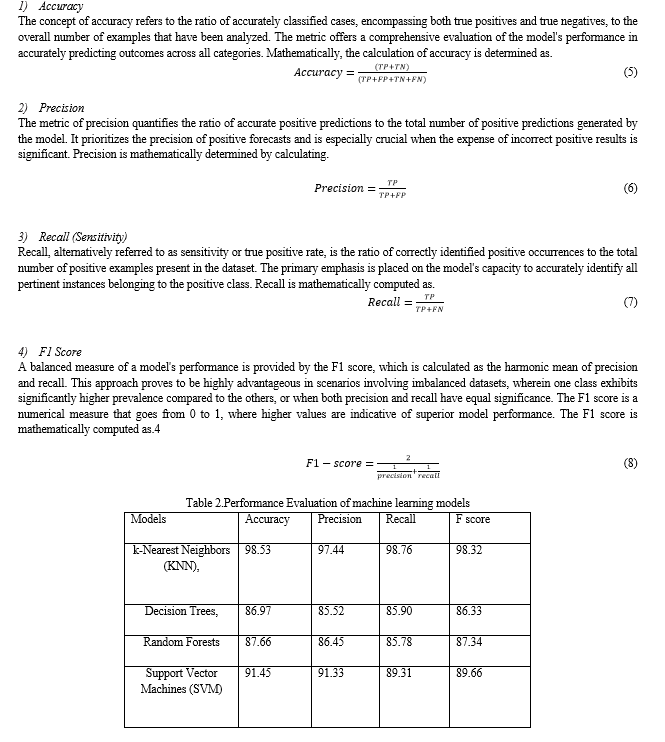

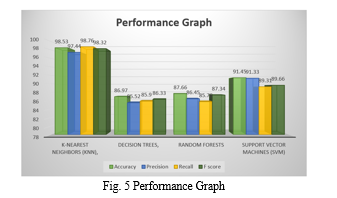

Figure 5 and table 2 depicts The assessment metrics for four machine learning models, namely k-Nearest Neighbors (KNN), Decision Trees, Random Forests, and Support Vector Machines (SVM), are presented in the table. The evaluation of classification models' success relies on many key parameters, namely Accuracy, Precision, Recall, and F1 score. The k-Nearest Neighbors (KNN) model has the highest level of accuracy, reaching 98.53%. KNN accurately identifies approximately 98.53% of the cases in the dataset. Furthermore, it has a notable Precision (97.44%), Recall (98.76%), and F1 score (98.32%), indicating its capacity to provide precise positive predictions while effectively identifying a substantial number of true positives and decreasing the occurrence of false negatives. Support Vector Machines (SVM) closely trails after, achieving an Accuracy of 91.45%. Although SVM exhibits a little lower Precision (91.33%) and Recall (89.31%) compared to KNN, it still achieves a commendable F1 score of 89.66%. Support Vector Machines (SVM) exhibit sustained performance across various criteria, hence proving their dependability in generating precise predictions across diverse classes. KNN and SVM outperform Decision Trees and Random Forests by a little margin. Decision trees provide a remarkable accuracy rate of 86.97% and an impressive F1 score of 86.33%. In contrast, random forests exhibit a higher accuracy rate of 87.66% and an impressive F1 score of 87.34%. Although the performance of these models is slightly lower compared to KNN and SVM, they nevertheless exhibit commendable performance across all measures. This makes them suitable choices based on specific requirements and peculiarities of the dataset. it can be observed that both k-Nearest Neighbors (KNN) and Support Vector Machines (SVM) have satisfactory performance in general, with KNN displaying marginally superior accuracy and F1 score. Nevertheless, the selection of the most effective model ultimately relies on the particular circumstances of the problem, the attributes of the dataset, and the intended compromises between various performance measures.

Figure 6 illustrates the conclusive results pertaining to the maneuver. These findings encapsulate the system's performance in halting movement towards the left direction, providing valuable insights into the effectiveness of the eye-controlled wheelchair system's navigation capabilities.

Conclusion

In conclusion, the creation and evaluation of the eye-controlled wheelchair system have marked a significant advancement in assistive technology for individuals with motor impairments. This study has employed a comprehensive approach encompassing various stages such as data collection, preprocessing, feature extraction, real-time prediction, and machine learning algorithms. This holistic methodology has led to the development of a resilient and efficient system. The assessment of machine learning models, including k-Nearest Neighbors (KNN), Decision Trees, Random Forests, and Support Vector Machines (SVM), has unveiled their varying levels of accuracy, precision, recall, and F1 scores. While KNN and SVM emerged as the most effective algorithms, Decision Trees and Random Forests also exhibited notable performance, offering viable alternatives depending on the dataset\'s characteristics. The system\'s ability to accurately analyze users\' ocular movements and translate them into navigational commands has enhanced accessibility, mobility, and autonomy for individuals with motor impairments. Future investigations could focus on refining the system\'s efficiency, exploring additional machine learning methodologies, and integrating cutting-edge technologies to further enhance eye tracking accuracy and responsiveness. the eye-controlled wheelchair technology demonstrates considerable promise as an innovative solution with substantial potential to improve the overall well-being of individuals experiencing motor disabilities.

References

[1] G. Da Silva Bertolaccini, F. E. Sandnes, F. O. Medola, and T. Gjøvaag, “Effect of Manual Wheelchair Type on Mobility Performance, Cardiorespiratory Responses, and Perceived Exertion,” Rehabil. Res. Pract., vol. 2022, 2022, doi: 10.1155/2022/5554571. [2] P. P. Choo, P. J. Woi, M. L. C. Bastion, R. Omar, M. Mustapha, and N. Md Din, “Review of Evidence for the Usage of Antioxidants for Eye Aging,” Biomed Res. Int., vol. 2022, 2022, doi: 10.1155/2022/5810373. [3] Q. Qiu, J. Zhu, C. Gou, and M. Li, “Eye Gaze Estimation Based on Stacked Hourglass Neural Network for Aircraft Helmet Aiming,” Int. J. Aerosp. Eng., vol. 2022, 2022, doi: 10.1155/2022/5818231. [4] H. AlGhadeer, “Traumatic Lens Dislocation in an Eye with Anterior Megalophthalmos,” Case Rep. Ophthalmol. Med., vol. 2022, pp. 1–2, 2022, doi: 10.1155/2022/6366949. [5] J. Xu, Z. Huang, L. Liu, X. Li, and K. Wei, “Eye-Gaze Controlled Wheelchair Based on Deep Learning,” Sensors, vol. 23, no. 13, 2023, doi: 10.3390/s23136239. [6] Y. Tang and J. Su, “Eye Movement Prediction Based on Adaptive BP Neural Network,” Sci. Program., vol. 2021, 2021, doi: 10.1155/2021/4977620. [7] C. Shi and B. Zee, “Response to: Comment on ‘pain Perception of the First Eye versus the Second Eye during Phacoemulsification under Local Anesthesia for Patients Going through Cataract Surgery: A Systematic Review and Meta-Analysis,’” J. Ophthalmol., vol. 2021, 2021, doi: 10.1155/2021/6834852. [8] W. Cao, H. Yu, X. Wu, S. Li, Q. Meng, and C. Chen, “Development and Evaluation of a Rehabilitation Wheelchair with Multiposture Transformation and Smart Control,” Complexity, vol. 2021, 2021, doi: 10.1155/2021/6628802. [9] S. Choayb, H. Adil, D. Ali Mohamed, N. Allali, L. Chat, and S. El Haddad, “Eye of the Tiger Sign in Pantothenate Kinase-Associated Neurodegeneration,” Case Rep. Radiol., vol. 2021, no. Figure 1, pp. 1–3, 2021, doi: 10.1155/2021/6633217. [10] J. Huang, Z. Zhang, G. Xie, and H. He, “Real-Time Precise Human-Computer Interaction System Based on Gaze Estimation and Tracking,” Wirel. Commun. Mob. Comput., vol. 2021, 2021, doi: 10.1155/2021/8213946. [11] P. Shrestha, S. Sharma, and R. Kharel, “Vogt-Koyanagi-Harada Disease: A Case Series in a Tertiary Eye Center,” Case Rep. Ophthalmol. Med., vol. 2021, no. c, pp. 1–5, 2021, doi: 10.1155/2021/8848659. [12] A. Bilstein, A. Heinrich, A. Rybachuk, and R. Mösges, “Ectoine in the Treatment of Irritations and Inflammations of the Eye Surface,” Biomed Res. Int., vol. 2021, pp. 8–10, 2021, doi: 10.1155/2021/8885032. [13] S. B. Han, Y. C. Liu, K. Mohamed-Noriega, and J. S. Mehta, “Advances in Imaging Technology of Anterior Segment of the Eye,” J. Ophthalmol., vol. 2021, 2021, doi: 10.1155/2021/9539765. [14] M. H. Jaffery et al., “FSR-Based Smart System for Detection of Wheelchair Sitting Postures Using Machine Learning Algorithms and Techniques,” J. Sensors, vol. 2022, 2022, doi: 10.1155/2022/1901058. [15] G. Martone, A. Balestrazzi, G. Ciprandi, and A. Balestrazzi, “Alpha-Glycerylphosphorylcholine and D-Panthenol Eye Drops in Patients Undergoing Cataract Surgery,” J. Ophthalmol., vol. 2022, 2022, doi: 10.1155/2022/1951014. [16] R. Bai, L. P. Liu, Z. Chen, and Q. Ma, “Cyclosporine (0.05%) Combined with Diclofenac Sodium Eye Drops for the Treatment of Dry Eye Disease,” J. Ophthalmol., vol. 2022, 2022, doi: 10.1155/2022/2334077. [17] M. Alsalem, M. A. Alharbi, R. A. Alshareef, R. Khorshid, S. Thabet, and A. Alghamdi, “Mania as a Rare Adverse Event Secondary to Steroid Eye Drops,” Case Rep. Psychiatry, vol. 2022, 2022, doi: 10.1155/2022/4456716. [18] B. Li, “Research on the Evaluation Method of Elderly Wheelchair Comfort Based on Body Pressure Images,” Wirel. Commun. Mob. Comput., vol. 2022, 2022, doi: 10.1155/2022/4666052. [19] J. M. Araujo, G. Zhang, J. P. P. Hansen, and S. Puthusserypady, “Exploring Eye-Gaze Wheelchair Control,” Eye Track. Res. Appl. Symp., 2020, doi: 10.1145/3379157.3388933. [20] M. S. H. Sunny et al., “Eye-gaze control of a wheelchair mounted 6DOF assistive robot for activities of daily living,” J. Neuroeng. Rehabil., vol. 18, no. 1, pp. 1–12, 2021, doi: 10.1186/s12984-021-00969-2. [21] W. Luo, J. Cao, K. Ishikawa, and D. Ju, “A human-computer control system based on intelligent recognition of eye movements and its application in wheelchair driving,” Multimodal Technol. Interact., vol. 5, no. 9, 2021, doi: 10.3390/mti5090050. [22] M. A. Samani, K. Hooshanfar, H. S. Jey, and S. M. Esmailzadeh, “Eye-Tracking Based Control of a Robotic Arm and Wheelchair for People with Severe Speech and Motor Impairment (SSMI),” pp. 35–41, 2024, doi: 10.1109/icrom60803.2023.10412408. [23] “HOG (Histogram of Oriented Gradients): An Overview | by Mrinal Tyagi | Towards Data Science.”

Copyright

Copyright © 2024 Vishav Mehra. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63082

Publish Date : 2024-06-03

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online