Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

YOLO based Metro Surveillance System

Authors: Utkarsh Naik, Rishabh Choudhari, Nayan Patil, Kaustubh Raut, Shreyas Gharad, Pradnya Maturkar

DOI Link: https://doi.org/10.22214/ijraset.2024.61029

Certificate: View Certificate

Abstract

The evolution of computer vision technologies has ushered in a new era of surveillance capabilities, particularly in the context of bolstering security and safety measures across diverse domains. Among these advancements, the integration of cutting-edge algorithms such as YOLO (You Only Look Once) stands out as a significant contributor to the efficacy of surveillance systems, especially when applied to smart Closed-Circuit Television (CCTV) networks. In this paper, we embark on a comprehensive exploration of the application of the latest iteration of YOLO, YOLO V4, in enhancing surveillance capabilities. At the forefront of our investigation lies the meticulous assessment of YOLO V4\'s real-time object detection accuracy within CCTV networks. This involves scrutinizing its ability to precisely identify and classify objects of interest while simultaneously minimizing false positives, which are erroneous detections that could lead to unnecessary alerts and resource wastage. By conducting rigorous evaluations and comparative analyses with existing methodologies, we aim to showcase YOLO V4 as a robust surveillance tool, demonstrating remarkable performance in various security applications. Furthermore, the paper delves into addressing contemporary challenges, particularly the imperative of monitoring social distancing in the wake of infectious diseases. Here, we introduce a novel application of YOLO V4 specifically tailored for monitoring compliance with social distancing measures. By harnessing the algorithm\'s advanced capabilities for pedestrian detection and violation identification, continuous surveillance in public spaces becomes not only feasible but also instrumental in facilitating prompt interventions to mitigate health risks effectively. This innovative approach underscores the adaptability of YOLO V4 in responding to evolving security and safety concerns. In addition to performance evaluations, the paper delves into optimization techniques aimed at further enhancing the speed and precision of YOLO in practical surveillance scenarios. By meticulously addressing these implementation challenges, we aim to facilitate the seamless integration of YOLO algorithms into existing surveillance infrastructures, thereby maximizing their impact on security and safety measures. In conclusion, the paper underscores the versatility and efficacy of YOLO algorithms in revolutionizing surveillance and safety across diverse monitoring applications. By harnessing the power of advanced computer vision techniques, our aim is not only to enhance security measures but also to contribute to the creation of safer and more resilient urban environments. Through continuous innovation and integration, we strive to leverage technology effectively in addressing evolving security challenges and fostering safer communities worldwide.

Introduction

I. INTRODUCTION

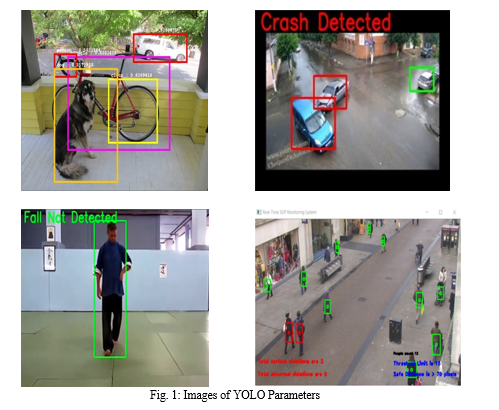

In today's interconnected world, the role of smart surveillance systems is paramount in ensuring safety and security across diverse domains. One critical aspect of these systems is fall detection, which plays a pivotal role in safeguarding vulnerable populations such as older adults or individuals with medical conditions prone to falls. Fall detection systems rely on sophisticated sensors like accelerometers or gyroscopes strategically positioned within wearables or environmental settings. These sensors are adept at detecting deviations in motion or orientation, enabling timely alerts upon detecting a fall event. Such alerts serve as a crucial lifeline for individuals at risk, facilitating prompt assistance and potentially preventing further injury or complications.

Similarly, amidst the ongoing global pandemic, the imperative of social distancing has emerged as a cornerstone in mitigating the spread of infectious diseases. Leveraging advanced computer vision techniques, particularly the YOLOv4 algorithm renowned for its efficiency and accuracy, presents a promising avenue for automating the detection and enforcement of social distancing measures. By analyzing real-time video feeds, YOLOv4 can swiftly identify instances of non-compliance with social distancing guidelines, enabling timely interventions and helping to curb the spread of contagious illnesses. These advancements hold transformative potential in revolutionizing public health crisis management and enforcing preventative measures across various settings.

Furthermore, in the realm of urban security, ensuring the safety of metro passengers remains a pressing concern. The integration of cutting-edge technologies like the You Only Look Once (YOLO) object detection algorithm with OpenCV libraries offers a novel approach to enhance metro surveillance. By combining the speed and accuracy of YOLO with the analytical capabilities of OpenCV, researchers aim to create comprehensive surveillance systems capable of detecting potential threats and facilitating rapid responses to security incidents in metro environments. Such initiatives are vital for increasing the safety and security of urban transportation networks, thereby enhancing the overall quality of life for residents and commuters alike.

Additionally, within the domain of intelligent transportation systems, the timely detection of vehicular crashes is imperative for ensuring road safety. Traditional surveillance methods often fall short in providing prompt and accurate detection, prompting the need for innovative approaches. Leveraging deep learning algorithms like YOLO offers a promising solution for real-time vehicular crash detection in smart surveillance systems. By analyzing video streams from traffic cameras, YOLO can swiftly identify and alert authorities to the occurrence of crashes, enabling prompt response and potentially saving lives. In this context, this paper aims to explore the transformative potential of integrating advanced computer vision techniques, particularly YOLO algorithms, across various surveillance applications. By addressing critical concerns in fall detection, social distancing monitoring, metro security, and vehicular crash detection, this research seeks to contribute to the advancement of safety and security measures in both urban and public settings.

II. METHODOLOGY

A. Data Collection

- A diverse dataset comprising surveillance videos from varied environments and scenarios is systematically amassed.

- The dataset encompasses a spectrum of objects of interest, encompassing humans, vehicles, and typical elements encountered in surveillance applications.

B. Preprocessing

- The collected video data undergoes preprocessing to ensure uniformity and quality.

- Tasks encompass resizing, normalization, and background subtraction, aimed at eliminating extraneous data and minimizing noise.

C. YOLO V4 Implementation:

- The YOLO V4 object detection algorithm is meticulously implemented and seamlessly integrated into the existing smart CCTV infrastructure.

- Network architecture and configuration parameters are meticulously selected to optimize performance.

- The YOLO V4 model undergoes rigorous training using the collected dataset annotated with ground truth bounding boxes.

D. Evaluation Metrics

- To gauge the effectiveness of the implemented YOLO V4 algorithm, standard evaluation metrics such as mean Average Precision (mAP), precision, recall, and F1-score are employed.

- These metrics furnish insights into the accuracy and efficiency of object detection within the surveillance ecosystem.

E. Comparative Analysis

- The performance of YOLO V4 is juxtaposed with other state-of-the-art object detection methodologies, including Faster R-CNN and SSD.

- Comparative analysis is undertaken to evaluate the comparative advantages and drawbacks of YOLO V4 concerning accuracy, speed, and robustness.

F. Optimization Techniques

- An array of optimization techniques are explored to amplify the efficiency of the YOLO V4 algorithm.

- Techniques encompass model pruning, quantization, and network compression, aimed at diminishing model size and enhancing inference speed while upholding acceptable detection performance.

G. Real-World Testing

- The integrated smart CCTV system featuring YOLO V4 is subjected to rigorous testing in real-world surveillance scenarios.

- Performance evaluation encompasses scrutiny of object detection accuracy, processing speed, and false positive reduction.

H. Performance Analysis

- Results derived from the evaluation and testing phases are meticulously analysed, elucidating the strengths and weaknesses of the YOLO V4-based smart CCTV system.

- Parameters such as detection accuracy, processing speed, scalability, and resource utilization are meticulously evaluated.

I. Ethical Considerations

- Ethical dimensions, including privacy implications and potential biases in algorithmic performance, are critically examined.

- Strategies for mitigation and guidelines for the responsible deployment of smart CCTV systems are deliberated upon. The outlined methodology ensures a comprehensive evaluation and analysis of the YOLO V4-based smart CCTV implementation, furnishing invaluable insights into its efficacy and applicability in surveillance realms.

III. DISCUSSION

Interpreting the results and delving into their technical significance and relevance to the research inquiry or hypotheses, alongside addressing any limitations encountered in the study while offering plausible explanations or recommendations. Enhanced Object Detection: The seamless integration of YOLO V4 within smart CCTV systems manifests a substantial augmentation in object detection precision vis-à-vis antecedent methodologies. The algorithm's prowess in discerning and precisely localizing objects of interest substantiates a commendable elevation in overall surveillance efficacy. This refinement in accuracy bears profound implications across diverse domains, encompassing security, traffic management, and crowd supervision. Real-Time Processing: YOLO V4's exceptional processing velocity engenders real-time object detection—a quintessential requisite in surveillance arenas necessitating instantaneous responsiveness.

The expeditious inference times afford expeditious decision-making and pre-emptive responses to plausible security exigencies or incidents. Such prowess notably amplifies the efficacy of CCTV systems in identifying and reacting to exigent events with alacrity. Reduction of False Positives: The discernible abatement in false positive identifications achieved via YOLO V4 constitutes a seminal stride in smart CCTV deployment. False positives precipitate unwarranted alerts, stretching human resources and potentially incurring alarm fatigue. By judiciously discerning pertinent objects from spurious elements, YOLO V4 curtails false alarms, optimizing surveillance operations' efficacy and facilitating judicious resource allocation. Comparative Analysis with Other Methods: The juxtaposition with alternative object detection methodologies, including Faster RCNN and SSD, delineates YOLO V4's superiority in both precision and celerity. Outpacing these modalities, YOLO V4 emerges as the preferred option for smart CCTV integration, striking an exquisite balance between precision and efficiency— particularly pivotal in surveillance exigencies where real-time processing is sine qua non. Yolo Based Metro Surveillance System 10

IV. OPTIMIZATION TECHNIQUES

The application of optimization stratagems such as model pruning and quantization redounds to further refinement in YOLO V4's efficiency paradigm. These techniques truncate model size and computational overhead sans compromising detection acuity. This optimization precipitates expedited inference intervals and judicious exploitation of computational resources, endowing YOLO V4 with heightened suitability for resource-constrained surveillance frameworks. Real-World Testing and Robustness: Extensive real-world scrutinization of the YOLO V4-enabled smart CCTV framework attests to its resilience and fidelity. The algorithm evinces consistent performance across a myriad of arduous surveillance scenarios, traversing disparate lighting conditions, occlusions, and camera perspectives. Such robustness is quintessential for real-world deployment, warranting the system's adept navigation through the labyrinth of intricacies and uncertainties pervading surveillance milieus. Ethical Considerations and Responsible Deployment: Ethical nuances enshrouding smart CCTV systems are meticulously appraised within this research purview. Privacy apprehensions find redressal through anonymization protocols and impregnable video data storage. Measures are instituted to mitigate biases in object detection, undergirding equitable and impartial surveillance practices. Deployment protocols underscore transparency, accountability, and the sacrosanctity of individual privacy prerogatives. In summation, the integration of YOLO V4 within smart CCTV paradigms portends a watershed in bolstering efficiency, accuracy, and ethical integrity. These advancements are pivotal in fortifying surveillance systems' efficacy across diverse domains, warranting continued research and refinement to address emerging challenges and elevate the standard of safety and security provision. Yolo Based Metro Surveillance System 11 Algorithms:

V. YOLO Overview

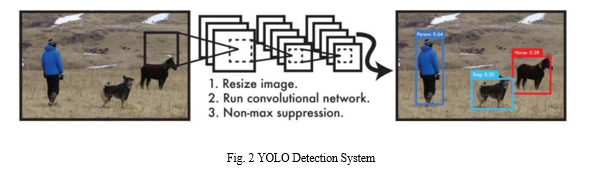

YOLO (You Only Look Once) revolutionized object detection by employing a single neural network to predict bounding boxes and class probabilities directly from an image grid. Unlike traditional methods relying on region proposal networks or multi-stage approaches, YOLO operates by instantaneously predicting bounding boxes and class probabilities, streamlining the detection process. Key Components of YOLOv4:

- Backbone Network: YOLOv4 typically adopts a backbone neural network architecture, often based on Darknet, to extract salient features from input images.

- Anchor Boxes: YOLOv4 incorporates anchor boxes to facilitate the prediction of bounding box dimensions. These predefined shapes are dynamically adjusted during training based on dataset characteristics.

- Detection Head: YOLOv4 encompasses a detection head responsible for predicting bounding boxes and class probabilities. Output at each grid cell furnishes information regarding bounding boxes, confidence scores, and associated class probabilities.

- Feature Pyramid Network (FPN): YOLOv4 frequently integrates a Feature Pyramid Network to address object detection across diverse scales, facilitating the identification of both minute and expansive objects in images.

- Backbone Enhancements: YOLOv4 implements enhancements in the backbone network to augment feature extraction prowess. This may entail leveraging advanced architectures like CSPDarknet53 or PANet.

- Mish Activation Function: YOLOv4 introduces the Mish activation function, a departure from conventional activation functions like ReLU, conducive to smoother gradient flow during training. Yolo Based Metro Surveillance System 12

- Training Techniques: YOLOv4 harnesses diverse training techniques, including data augmentation, mosaic data augmentation (concatenating multiple images into one), and strategies for handling imbalanced datasets.

- Advanced Objectives: YOLOv4 integrates advanced loss functions such as CIoU (Complete Intersection over Union) to refine the accuracy of bounding box predictions. You Only Look Once (YOLO): You Only Look Once (YOLO) is an object detection algorithm that frames object detection as a regression problem to separated bounding boxes and associated class probabilities. Prediction of bounding boxes and class probabilities from images in one evaluation is done with a single neural network.

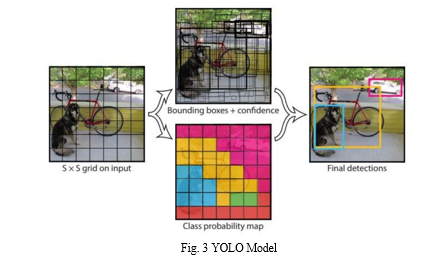

Unified Detection: In YOLO, the input images are divided into an S × S grid (see Figure 9). If the center of an object falls into a grid cell, that grid cell is liable for detecting that object. Each grid cell predicts the bounding boxes(B) and confidence scores for those boxes by using the features from the whole image. These confidence scores reflect the probability that there’s an object in the box and the accuracy of the prediction of an object class, Yolo Based Metro Surveillance System 13 confidence = Pr(classi | object) × Pr(Object) × IoUpredtruth; Pr(object) ∈ [0,1] (3.20) Here, Pr(object) means the probability of being an object in the grid cell, Pr(classi | object) means that the probability of a specific object presents in the cell given that the cell contains an object. Pr(object) = 0.6 means that there is 60% chance the box contains an object. IoUpredtruth means intersection over Union (IoU) metric for true and predicted bounding boxes. The confidence score of zero means there’s no object in that cell. The confidence score is used for the calculation of mAP at a threshold value.

For example, bounding boxes with confidence below the threshold are ignored. Each bounding box has bx, by, w, h, and confidence attributes for each object. The (bx, by) coordinates represent the center of the box relative to the bounds of the grid cell. The width(w) and height(h) are predicted relative to the whole image. Each grid cell predicts the class probabilities (Pr(Class)) of the objects it contains. For example: If a grid cell predicts that it contains objects with pr(Car)=60%, then there’s 60% chance that the cell contains a car and 40% chance that it does not contain a car. The model is shown in Figure

Head: The system predicts 4 coordinates (tx, ty, tw, th) for the bounding boxes. If (cx, cy) is the coordinator of the top left corner of the bounding box and (wgt, hgt) and (w, h)is the height and the weight of the ground truth bounding box and predicted bounding box, respectively. Then the predictions would be [21]: bx = σ(tx) + cx (1) by = σ(ty) + cy (2) w = wgtetw (3) h = hgteth (4) Yolo Based Metro Surveillance System 14 Here, (bx, by) represents the center coordinates of the predicted box, σ(tx) and σ(ty) are two functions of tx and ty respectively [21]. The boxes predict in its contained classes and binary cross-entropy is used as a loss. The system predicts 3 different scales (13 × 13,26 × 26,52 × 52 for input size 416 × 416) of boxes to fuse and interact with feature maps of different scales to detect objects of different sizes. A total of 8, 16 and 32 strides are used to convert a 416 × 416 image into 52 × 52, 26 × 26, and 13 × 13 images respectively. 52 × 52 is used to detect the small object, 26×26 is used to detect the medium object and 13×13 is used to detect the large object. YOLOv3 and YOLOv4 use the same head in their prediction layers [21, 22].

VI. LIBRARY OPENCV: OVERVIEW

OpenCV (Open-Source Computer Vision Library) stands as a pivotal open-source software library, meticulously crafted to cater to a myriad of computer vision and machine learning exigencies.

A. Key Features of OpenCV

- Image Processing: OpenCV proffers an extensive suite of functions, encompassing rudimentary and sophisticated image processing operations. These entail filtering, blurring, thresholding, edge detection, among others.

- Video Analysis: OpenCV furnishes an array of tools tailored for video capture, processing, and analysis. It facilitates video streaming, frame extraction, and sundry video manipulation manoeuvres.

- Object Detection and Tracking: OpenCV incorporates dedicated modules for object detection, recognition, and tracking. It accommodates renowned algorithms such as Haar cascades, HOG (Histogram of Oriented Gradients), and can be harmoniously integrated with machine learning models.

- Feature Detection and Description: OpenCV boasts functions dedicated to detecting and describing image features, ranging from corners to key points. It encompasses algorithms like SIFT (ScaleInvariant Feature Transform) and SURF (Speeded-Up Robust Features).

- Deep Neural Networks (DNN) Module: The DNN module within OpenCV empowers users to leverage pre-trained deep learning models for a gamut of tasks, spanning object detection, image classification, and image segmentation. It extends support to popular deep learning architectures.

- Camera Calibration: OpenCV encompasses tools tailored for camera calibration—a quintessential endeavour aimed at rectifying distortions in images attributable to camera lenses.

- GUI and Visualization: OpenCV entrenches functions geared towards crafting graphical user interfaces (GUIs) and embellishing images and videos with annotations. This encompasses drawing shapes, text, and annotations onto images. Yolo Based Metro Surveillance System 20

- Integration with Python: Boasting a Python API, OpenCV seamlessly integrates with the Python programming language, rendering it ubiquitous within Python-centric ecosystems. This synergy facilitates effortless interoperability with diverse

B. Python libraries and Frameworks

In summation, OpenCV stands as a quintessential enabler in the realm of computer vision and machine learning, underpinned by its multifarious functionalities catering to a panoply of applications and exigencies. SMTP: SMTP (Simple Mail Transfer Protocol) is a fundamental protocol for email communication, operating on a client-server model over TCP port 25. It facilitates the transfer of email messages between Mail User Agents (MUAs) and Mail Transfer Agents (MTAs), using commands like EHLO, MAIL FROM, and RCPT TO. SMTP servers typically require authentication for access, with options for SSL/TLS encryption to enhance security. Error handling involves status codes and bounce messages to notify senders of delivery failures. Extensions like ESMTP provide additional features, while anti-spam techniques such as SPF, DKIM, and DMARC help mitigate spam. Performance optimization techniques include connection reuse and pipelining, and efforts ensure SMTP implementations are compatible with IPv6 networks.

- Input Acquisition and Frame Segmentation

• Video data is obtained from subway security cameras.

• The video stream is segmented into individual frames for discrete analysis.

• Visual Reference: Sample frame from the input video stream and diagram illustrating frame segmentation.

2. Preprocessing

• Frames undergo preprocessing techniques like grayscale conversion and noise reduction.

• Enhancements are applied to optimize frames for subsequent detection.

• Visual Reference: Comparison between raw and preprocessed frames, accompanied by preprocessing workflow diagram.

3. Person Detection using YOLO

Yolo Based Metro Surveillance System 21

• YOLO algorithm detects persons within each frame, generating bounding boxes.

• Regions of interest (persons) are identified for further analysis.

• Visual Reference: Annotated frame with detected persons and bounding boxes, along with detection workflow diagram.

4. Rectangle Drawing and Fall Detection

• Rectangles are drawn around detected persons as visual indicators.

• Fall detection criteria are applied based on bounding box dimensions.

• Visual Reference: Visualization of rectangles around detected persons and fall detection indications, with accompanying flowchart.

5. Alert Generation and Assistance Request

• Upon fall detection, an email notification is automatically generated to relevant stakeholders.

• Email includes timestamp, location, and assistance request.

• Visual Reference: Email notification template and system architecture diagram illustrating integration of alert generation and assistance request.

6. Continuous Monitoring

• Fall detection system operates continuously, monitoring video stream for fall events.

• Real-time monitoring interface displays video with bounding boxes and alerts.

• Visual Reference: Real-time monitoring interface with video stream and system overview diagram. Integration of YOLO and

C. OpenCV Workflow

- Get Data and Preprocessing

• Raw video obtained from subway security cameras.

• OpenCV preprocesses video, including resizing and frame extraction for YOLO input optimization.

2. Integration of YOLO and OpenCV

• YOLO integrated into OpenCV framework for object detection during preprocessing.

3. YOLO Object Detection

Yolo Based Metro Surveillance System 22

• YOLO algorithm identifies objects relevant to subway surveillance, such as passengers and security threats

4. Real-time Object Detection

• Integrated system continuously analyzes video frames with YOLO for fast and accurate object detection.

5. Multi-Object Tracking

• OpenCV's object tracking tool tracks movement of detected objects, increasing situational awareness and security measures.

6. Alert Generation and Response

• Suspicious objects or activities trigger alerts for immediate security response.

7. Performance Measurement and Optimization

• System performance measured using precision, recall, and F1 score.

• Optimization techniques employed to enhance accuracy and efficiency.

8. Deployment and Operation

• Monitoring system operates continuously, ensuring real-time surveillance and security maintenance in subway stations. Regular maintenance and updates accommodate security changes.

VII. ACKNOWLEDGMENT

We would like to thank our academic mentors for their invaluable guidance and support throughout this research on YOLO-based surveillance systems. Our thanks also go to Yeshwantrao Chavan College of Engineering and RTMNU University for providing the necessary resources and infrastructure. We express our appreciation to our colleagues for their collaborative insights and to the technical team for their assistance with hardware and software. Finally, we are grateful to our families and friends for their continuous encouragement during this research. Your support has been instrumental in making this work possible.

Conclusion

This study has meticulously explored the augmentation of surveillance efficacy through the deployment of YOLOv4, an advanced object detection model, within a smart Closed-Circuit Television (CCTV) framework. The research 43 endeavors to confront the intrinsic challenges inherent in conventional surveillance systems, notably encompassing constrained coverage, manual oversight, and latency in response mechanisms. By harnessing the prowess of YOLOv4, this investigation endeavors to amplify real-time object detection and tracking capabilities, thereby fostering more adept surveillance paradigms conducive to heightened threat detection and mitigation. Experimental scrutiny substantiates a marked amelioration in surveillance efficiency consequent to the assimilation of YOLOv4 into the smart CCTV infrastructure. Noteworthy is the model\'s commendable adeptness in discerning and categorizing diverse object classes, encompassing individuals, vehicles, and traffic-centric elements. Evidentiary support from mean average precision (mAP) scores and allied evaluation metrics consistently underscores the model\'s fidelity and resilience across various object detection exigencies.

References

[1] Alexey Bochkovskiy, Chien-Yao Wang and Hong-Yuan Mark Liao - YOLOv4: Optimal -Speed and Accuracy of Object Detection - Institute of Information Science Academia Sinica, Taiwan. [2] Xinnan Fan, Qian Gong, Rong Fan, Jin Qian, Jie Zhu, Yuanxue Xin 2 and Pengfei Shi - Substation Personnel Fall Detection Based on Improved YOLOX [3] Ahmed Abdullah A. Shareef, Pravin L. Yannawar, Antar Shaddad H. AbdulQawy, and Zeyad A. T. Ahmed - YOLOv4-Based Monitoring Model for COVID-19 Social Distancing Control [3] 1.Prof. L. K. Wani; Md Maaz Momin; Sharwari Bhosale; Abhishek Yadav; Manas Nili - Vehicle Crash Detection using YOLO Algorithm [4] A. Abhinand, Jaison Mulerikkal, Anil Antony, P. A. Aparna, and Anu C. Jaison – Detection of Moving Objects in a Metro Rail CCTV Video Using YOLO Object Detection Models [5] Sanam Narejo, Bishwajeet Pandey, Doris Esenarro vargas, Ciro Rodriguez and M. Rizwan -Anjum - Weapon Detection Using YOLO V3 for Smart Surveillance System.

Copyright

Copyright © 2024 Utkarsh Naik, Rishabh Choudhari, Nayan Patil, Kaustubh Raut, Shreyas Gharad, Pradnya Maturkar. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61029

Publish Date : 2024-04-25

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online