Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

YOLOv3 Based Smart Assistive Navigation System for Visually Impaired Individuals

Authors: Rakesh N, Shashank V, Sudeep B M, Mrs. Sushma K Sattigeri

DOI Link: https://doi.org/10.22214/ijraset.2024.63166

Certificate: View Certificate

Abstract

Nowadays, some people suffer from blindness or vision impairment, either from childhood or due to other causes. These individuals frequently face difficulty to move from source to destination precisely, resulting in difficulties in their daily routines. Currently, people are using normal walking sticks, that is not useful for all the time, especially in outdoor environments, resulting in accidents and other difficulties. This paper aims to focus on improving the quality of life for blind people or visually impaired individuals by providing a suitable solution to enhance navigation and manage their needs in indoor and outdoor environments through the use of wearable devices. One proposed solution involves the method of voice assistant technology, which allows users to easily communicate and receive clear instructions. The Raspberry Pi camera module, that allows to capture images and video streaming with the Raspberry Pi, where the Ultrasonic sensor is commonly used for distance measurement to detect obstacles. Furthermore, incorporating a Neo 6m GPS module offers precise location data and navigation guidance, aiding users in reaching their destination safely and efficiently.

Introduction

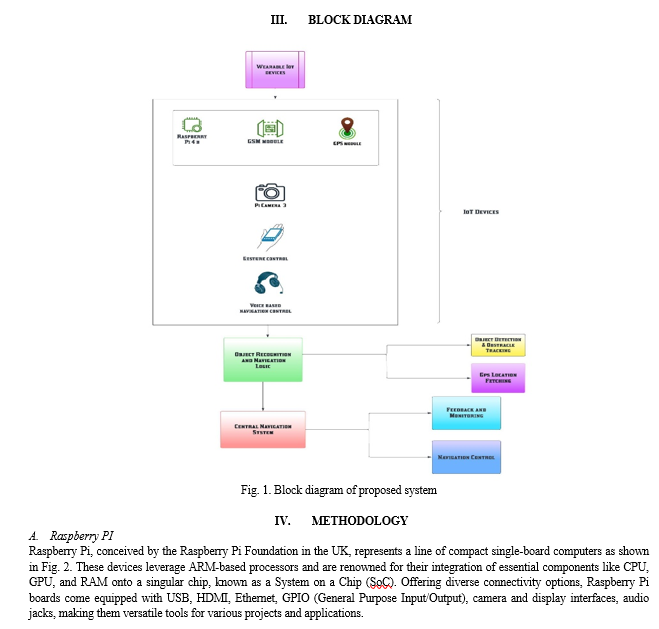

I. INTRODUCTION

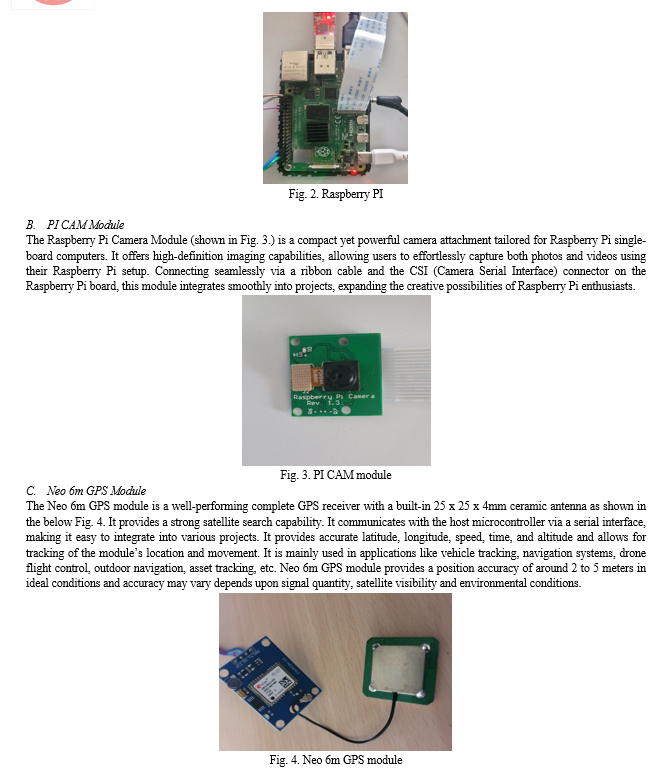

In the past, blind people were suffering, and they were always dependent on their relatives and friends for assistance in navigating their surroundings from one place to another, but they couldn't be dependent on others all the time for assistance when they were alone. They faced challenges such as difficulty going outside, reading, and working independently. Accidents were common due to their inability to perceive obstacles and hazards. Although they could sometimes rely on strong smells to infer their surroundings, this technique cannot be always reliable. While they can memorize indoor routes for daily purposes, they cannot memorize outdoor routes because, in a daily routine, we can’t predict the same objects or obstacles in specific areas. While guide dogs are provided for assistance in the outdoors, they may not always be accessible or affordable. The wooden stick was used in indoors are more feasible, but outdoors it lacked effectiveness. Some people will assist themselves to move by touching chairs, walls, etc. indoors, but outdoors, it is difficult to move freely. As per the World Health Organization (WHO), around 2.2 billion people globally suffer from vision impairment, with common causes including refractive errors, cataracts, diabetic retinopathy, glaucoma, and age-related macular degeneration. In recent advancements in technology, there has been significant research into electronic solutions for the visually impaired. However, this result is frequently expensive and not easily accessible. To address these challenges, we propose by developing a wearable device to aid visually impaired individuals in both indoor and outdoor navigation. This system integrates various components, including the PI CAM module for capturing images and video streaming with the Raspberry PI. Ultrasonic sensor is commonly used for distance measurement and object or obstacle detection without physical contact. Additionally, a Neo 6M GPS module is incorporated to provide accurate positioning and navigation capabilities for outdoor use. A microphone and speaker are included for voice assistants, enhancing the user experience and improving independence in daily activities. The main objectives of this proposed model are to develop a smart assistive navigation system that provides real-time object detection, navigation feedback, and gesture interaction. To build a system that enhances self-safety measures for blind people, enabling them to navigate more confidently, and to build a portable, lightweight, effective, and user-friendly system.

II. MOTIVATION

Developing a YOLOv3 based smart assistive navigation system for visually impaired individuals is motivated by the fundamental goal of improving their independence, safety, and overall quality of life. These blind people face a lot of difficulties by walking in indoor and outdoor environment and getting assistance from others, such as canes or dogs, guidance, etc.

Then this method can’t give exact information about obstacles and has a certain limitation by not providing information for routers. The smart assistive navigation system addresses this utilization with advanced technologies like sensors, GPS, and smart learning to help in real-time.

By detecting obstacles, blind people can make sure to move freely, recognize important places, and navigate positioning for the safest path to take them. They also have voice assistance like talking instructions and touch-based signals, that is used to communicate with blind people to easily understand and provide clear instructions. Then, resourceful navigation systems can adjust to different places and situations, giving helpful guidance whether you’re indoors, on public transport, or outside. The main reason behind creating a YOLOv3 based smart assistive navigation system for visually impaired people, that every person deserves to move freely and independently, no matter their abilities.

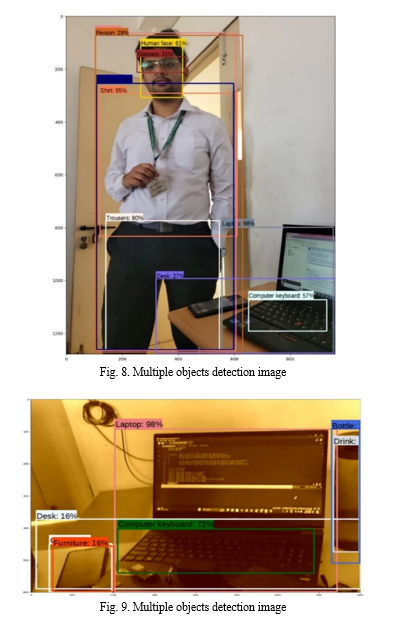

G. Software

The project's main focus lies in constructing a real-time object detection system utilizing the YOLOv3 architecture and OpenCV within the Python programming environment. The primary goal is to develop a robust system capable of identifying objects within live video streams captured from an IP camera. The approach involves leveraging functions like cv2.VideoCapture for video feed acquisition, loading the YOLOv3 model along with its configuration and weights using cv2.dnn.readNetFromDarknet, and preprocessing frames through blob conversion with cv2.dnn.blobFromImage to facilitate object detection. This process encompasses the detection of objects, applying confidence thresholds, and utilizing Non-Maximum Suppression (NMS) to precisely localize bounding boxes.

The system further annotates identified objects with bounding boxes and labels overlaid onto the video stream, offering real-time feedback. Challenges faced, including network latency and inference speed, were addressed during implementation. Additionally, proposals for future improvements were suggested, such as optimizing processing speed for improved performance and integrating cloud services for enhanced functionality. This project demonstrates a practical application of vision computing techniques in real-time object detection, holding promise for applications in surveillance, automation, and security sectors.

V. WORKING

Object detection and navigation logic are highly developed or complicated systems designed to enhance navigation safety through the utilization of sensor fusion techniques. It uses a special sensor to detect things that get in your way, like obstacles or objects. This system integrates advanced algorithms with data from cameras to detect obstacles and objects in real time. There is a benefit for users from this system because it can change routes in real time when there is something blocking the way and make sure to stay safe to reach the destination without any problems. It is a guide that helps you go on the right path and away from harm, and by using a specific part of the system for object or obstacle detection, it finds a thing that could be dangerous around you. After detecting the object, it will keep an eye on them and continuously monitor every moment of object detection to move for future positions. For monitoring, we are using an PI camera module. If we're looking for better pictures, it’s suggested that we switch the camera to the Wi-Fi module since it possesses a resolution limit. Then the camera size may affect how we build the prototype, so keep the camera size as small as possible to not lose portability. The central navigation system uses maps and GPS to plan the best route for where you want to go, giving clear instructions on how to get there with accurate positions. The position accuracy of Neo 6m GPS module is within a few meters in ideal conditions and accuracy may vary depends upon signal quantity, satellite visibility and environmental conditions. The navigation control part of the system follows the direction and makes sure to stay on track by adjusting your movement, including speed adjustments, turning, and stopping, based on the route planned. By getting feedback from the sensor, it helps to travel safely and smoothly.

For voice assistance, we are using a microphone and a speaker. The use of this microphone is to capture audio input and a speaker to provide a response. Then we need to use speech recognition to convert the spoken words into text and natural processing to understand the user’s intent. Then, we can use text-to-speech to convert responses into spoken words. The feedback and monitoring system tells you what’s happening during the journey, tells you about the route that you are taking, tells you what turns are coming up, and gives you warnings about potential obstacles. This method ensures reliable and adaptive navigation assistance, making travel safer and more convenient for users.

VI. RESULTS

In summary, the object recognition and navigation system developed in this project offer a comprehensive solution for enhancing travel safety and convenience. By leveraging advanced sensor fusion techniques, precise GPS integration, and seamless voice assistance, the system provides users with reliable navigation guidance while ensuring adaptability to changing environmental conditions.

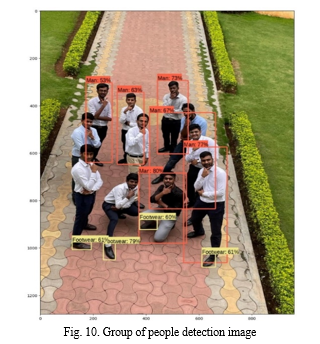

Fig. 8. and Fig. 9. shows multiple objects detection image.

This project represents a significant step forward in the advancement of intelligent navigation systems, with potential applications in numerous fields, such as autonomous vehicles, robotics, and smart transportation networks. Fig. 10. shows group of people detection image.

Conclusion

Visually impaired people are facing a lot of problems, especially in outdoor systems, when they need to move from one spot to another. They get several damages from accidents, and without any guidance, they are suffering. Blind people always depend on family, relatives, and friends for assistance. Then we created a smart assistive navigation system is to help them to interact with each other while travelling and independently for day-to-day tasks for visually impaired people. During this, where the objects are sensed, what is the gap between the user and the object or obstacle, and are there clear instructions to assist them. So in this, we have made wearable devices for vision impairment or visually impaired individuals to use freely without any problems in their daily routine and also reduce their dependence on others. This wearable device detects the motion of a moving object in the path of the user. For monitoring devices, we are using an ESP32 cam module. If we are looking for better pictures, it’s suggested that we switch the camera in the Wi-Fi module since it has a resolution limit. So the camera size may affect how we build the prototype, so keep the camera size as small as possible to not lose portability. The object detected is converted to an audio signal and transmitted through the microphone to the speaker. This system or module helps blind people with good navigation and accurate positioning in their day-to-day lives.

References

[1] Smart Navigation System for visually Impaired Person, ME Student, Department of VLSI & Embedded, MITCOE, Pune, India. (IJARCCE), 2021. [2] Smart Assistive Device for Visually Impaired, Student, Dept. of Electronics & Communication Engineering, Musaliar College of Engineering & Technology, Pathanamthitta, Kerala, India. Volume 6, 2020(IRJET). [3] Smart Assistive Navigation Device for Visually Impaired People, Department of Electronics Engineering Vidyalankar Institute of Technology, 2022, Mumbai. ISSN: 2248-9622, pp. 33-38 (IJERA). [4] Smart Navigation System Assistance for Visually Impaired People, Dept. of ESE National Institute of Electronics and Information Technology Aurangabad, Maharashtra, India. Karnataka, India. Nov 25-27, 2022 (INCOFT). [5] Sahu, N., & Sarangi, S. R. (2020). Smart assistive navigation system for visually impaired people. In 2020 International Conference on Recent Innovations in Electrical, Electronics & Communication Systems (ICRIEECS) (pp. 1-6). IEEE. [6] Krishna, G. S. R., Chaitanya, P. P., & Amarendar, N. (2020). Smart Stick for Visually Impaired People using ultrasonic sensor and IoT. In 2020 International Conference on Electrical, Communication, and Computing (ICECCO) (pp. 1-4). IEEE. [7] Choudhary, S., & Gautam, A. K. (2020). Smart cane for visually impaired using IoT. In 2020 3rd International Conference on Innovative Mechanisms for Industry Applications (ICIMIA) (pp. 187-191). IEEE. [8] Boddapati, A., Reddy, G. S., & Sivakumar, R. (2021). Smart navigation system for visually impaired people using IoT and ultrasonic sensor. In 2021 International Conference on Computer Communication and Informatics (ICCCI) (pp. 1-6). IEEE. [9] IoT based Band for Blind Peoples using ESP32 with GPS, Pranmya Ratnaparkhi, International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056, Volume: 08 Issue: 01, Jan 2021, p-ISSN: 2395-0072. [10] SMART ASSISTIVE DEVICE FOR VISUALLY IMPAIRED, Ashiq H, Dept. of Electronics & Communication Engineering, Musaliar College of Engineering & Technology, Pathanamthitta, Kerala, India, International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 06 Issue: 05, May 2019, p-ISSN: 2395-0072. [11] SMART BLIND STICK FOR VISUALLY IMAPIRED, Abhishek. Deshpande, Dept. of Electrical and Electronics Engineering, S. G. Balekundri Institute of Technology. Belagavi, Karnataka, India, International Journal of Research Publication and Reviews, Vol 4, no 5, pp 4087-4091, May 2023. [12] Smart Assistance for Visually Impaired People, Abhishek Adithya S K, Department of Computer Science and Engineering 1,2,3,4,5Dayananda Sagar Academy of Technology and Management, Bangalore, Karnataka India, March 2024, IJIRT, Volume 10 Issue 10, ISSN: 2349-6002. [13] Smart Navigation System for Visually Impaired Person, ME Student, Department of VLSI & Embedded, MITCOE, Pune, India, International Journal of Advanced Research in Computer and Communication Engineering Vol. 4, Issue 7, July 2015. [14] Rfid based indoor navigation with obstacle detection based on a* algorithm for the visually impaired. International Journal of Information and Electronics Engineering, 5(6):428, 2015. Jayron Sanchez, Analyn Yumang, and Felicito Caluyo. [15] Sankari Subbiah, Ramya S, Parvathy Krishna G, Senthil Nayagam- SMART CANE FOR VISUALLY IMPAIRED BASED ON IOT,3rd International Conference on Computing and Communication Technologies ICCCT 2019. [16] Krishnakumar. S, Bethanney Janney J, Umashankar. G- Intelligent Walking Stick with Static and Dynamic Obstacle Detection Technology for Visually Challenged People,2021 Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII) | ©2021 IEEE. [17] M. Adil Khan, Kashif Nisar, Sana Nisar, BS Chowdhry,021 International Conference on Artificial Intelligence and Smart Systems (ICAIS) | ©2021 IEEE. [18] Shamim Ahmed, Md. Munam Shaharier, Srijan Roy, Anika Akhtar Lima, Milon Biswas- An Intelligent and Multi-functional Stick for Blind People Using IoT 2022 Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII) | ©2022 IEEE.

Copyright

Copyright © 2024 Rakesh N, Shashank V, Sudeep B M, Mrs. Sushma K Sattigeri. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63166

Publish Date : 2024-06-06

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online